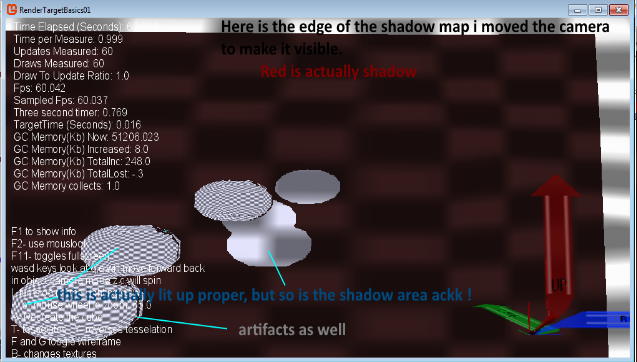

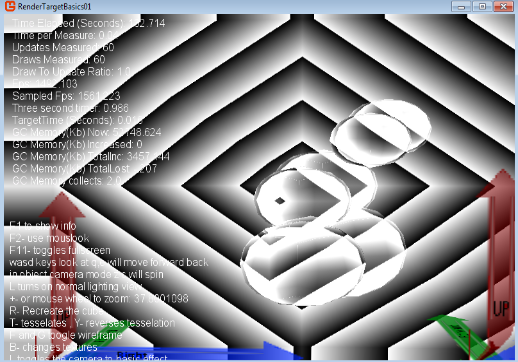

Well the result is the opposite of what i would expect.

ill post a pic in a second.

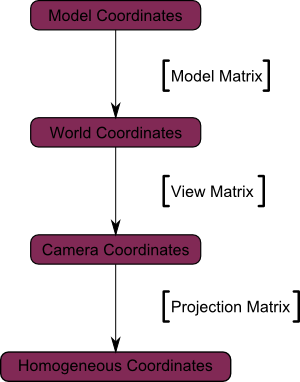

Makes a shadow depth map simple enough

//_____________________________

//>>>>>>>>>shadowmap<<<<<<<<<<<

//_____________________________

//_____________________________

struct SMapVertexInput

{

float4 Position : POSITION0;

float2 TexCoords : TEXCOORD0;

};

struct SMapVertexToPixel

{

float4 Position : SV_Position;

float4 Position2D : TEXCOORD0;

};

struct SMapPixelToFrame

{

float4 Color : COLOR0;

};

float4 EncodeFloatRGBA(float v)

{

float4 kEncodeMul = float4(1.0, 255.0, 65025.0, 160581375.0);

float kEncodeBit = 1.0 / 255.0;

float4 enc = kEncodeMul * v;

enc = frac(enc);

enc -= enc.yzww * kEncodeBit;

return enc;

}

SMapVertexToPixel ShadowMapVertexShader(SMapVertexInput input)//(float4 inPos : POSITION)

{

SMapVertexToPixel Output;// = (SMapVertexToPixel)0;

Output.Position = mul(input.Position, lightsPovWorldViewProjection);

Output.Position2D = Output.Position;

return Output;

}

SMapPixelToFrame ShadowMapPixelShader(SMapVertexToPixel PSIn)

{

SMapPixelToFrame Output;// = (SMapPixelToFrame)0;

//Output.Color = PSIn.Position2D.z / PSIn.Position2D.w;

Output.Color = EncodeFloatRGBA(PSIn.Position2D.z / PSIn.Position2D.w);

return Output;

}

technique ShadowMap

{

pass Pass0

{

VertexShader = compile VS_SHADERMODEL ShadowMapVertexShader();

PixelShader = compile PS_SHADERMODEL ShadowMapPixelShader();

}

}

second part

//_______________________________________________________________

// >>>> LightingShadowPixelShader <<<

//_______________________________________________________________

struct VertexShaderLightingShadowInput

{

float4 Position : POSITION0;

float3 Normal : NORMAL0;

float4 Color : COLOR0;

float2 TexureCoordinateA : TEXCOORD0;

};

struct VertexShaderLightingShadowOutput

{

float4 Position : SV_Position;

float3 Normal : NORMAL0;

float4 Color : COLOR0;

float2 TexureCoordinateA : TEXCOORD0;

float4 Pos2DAsSeenByLight : TEXCOORD1;

float4 Position3D : TEXCOORD2;

};

struct PixelShaderLightingShadowOutput

{

float4 Color : COLOR0;

};

VertexShaderLightingShadowOutput VertexShaderLightingShadow(VertexShaderLightingShadowInput input)

{

VertexShaderLightingShadowOutput output;

output.Position = mul(input.Position, gworldviewprojection);

output.Pos2DAsSeenByLight = mul(input.Position, lightsPovWorldViewProjection);

output.Position3D = mul(input.Position, gworld);

output.Color = input.Color;

output.TexureCoordinateA = input.TexureCoordinateA;

output.Normal = input.Normal;

//output.Normal = normalize(mul(input.Normal, (float3x3)gworld));

return output;

}

PixelShaderLightingShadowOutput PixelShaderLightingShadow(VertexShaderLightingShadowOutput input)

{

PixelShaderLightingShadowOutput output;

float4 result = tex2D(TextureSamplerA, input.TexureCoordinateA);

// positional projection on depth map

float2 ProjectedTexCoords;

ProjectedTexCoords[0] = input.Pos2DAsSeenByLight.x / input.Pos2DAsSeenByLight.w / 2.0f + 0.5f;

ProjectedTexCoords[1] = -input.Pos2DAsSeenByLight.y / input.Pos2DAsSeenByLight.w / 2.0f + 0.5f;

// shadows depth map value

float depthStoredInShadowMap = DecodeFloatRGBA(tex2D(TextureSamplerB, ProjectedTexCoords));

// the real light distance

float realLightDepth = input.Pos2DAsSeenByLight.z / input.Pos2DAsSeenByLight.w;

// for testing

float4 inLightOrShadow = float4(.99f, .99f, .99f, 1.0f);

// if in bounds of the depth map

if ((saturate(ProjectedTexCoords).x == ProjectedTexCoords.x) && (saturate(ProjectedTexCoords).y == ProjectedTexCoords.y))

{

// in bounds slightly off color

inLightOrShadow = float4(.89f, .89f, .99f, 1.0f);

if ((realLightDepth - .1f) > depthStoredInShadowMap)

{

// shadow

inLightOrShadow = float4(0.20f, 0.10f, 0.10f, 1.0f);

}

}

// finalize

result *= inLightOrShadow;

result.a = 1.0f;

output.Color = result;

return output;

}

technique LightingShadowPixelShader

{

pass

{

VertexShader = compile VS_SHADERMODEL VertexShaderLightingShadow();

PixelShader = compile PS_SHADERMODEL PixelShaderLightingShadow();

}

}

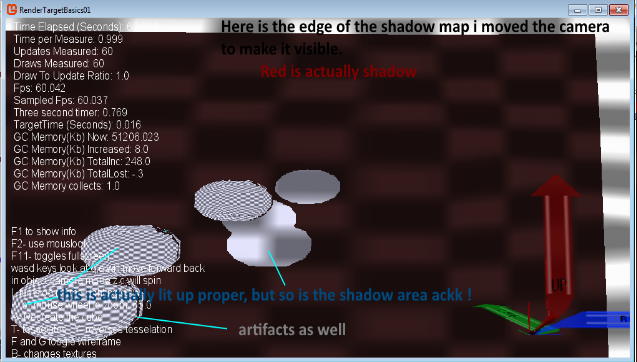

i dunno i make my render target like this.

renderTargetDepth = new RenderTarget2D(GraphicsDevice, pp.BackBufferWidth, pp.BackBufferHeight, false, SurfaceFormat.Single , DepthFormat.Depth24);

my depth buffer is turned on.

protected override void Draw(GameTime gameTime)

{

GraphicsDevice.SetRenderTarget(renderTargetDepth);

//SetToDrawToRenderTarget(renderTarget1);

GraphicsDevice.Clear(ClearOptions.Target | ClearOptions.DepthBuffer, Color.Black, 1.0f, 0);

GraphicsDevice.DepthStencilState = ds_depthtest_lessthanequals;

GraphicsDevice.RasterizerState = selected_RS_State2;

DrawSphereCreateShadow(currentSkyBoxTexture, obj_sphere02, vertices_skybox, indices_skybox);

DrawSphereCreateShadow(currentSkyBoxTexture, obj_sphere03, vertices_skybox, indices_skybox);

DrawSphereCreateShadow(currentSkyBoxTexture, obj_sphere04, vertices_skybox, indices_skybox);

GraphicsDevice.SetRenderTarget(null);

GraphicsDevice.Clear(ClearOptions.Target | ClearOptions.DepthBuffer, Color.Black, 1.0f, 0);

GraphicsDevice.RasterizerState = rs_solid_ccw;

GraphicsDevice.DepthStencilState = ds_depthtest_lessthanequals;

DrawSphereAndShadow(skyBoxImageA, renderTargetDepth, obj_sky, vertices_skybox, indices_skybox);

GraphicsDevice.RasterizerState = rs_solid_cw;

DrawSphereAndShadow(skyBoxImageA, renderTargetDepth, obj_sphere02, vertices_skybox, indices_skybox);

DrawSphereAndShadow(skyBoxImageB, renderTargetDepth, obj_sphere03, vertices_skybox, indices_skybox);

DrawSphereAndShadow(skyBoxImageC, renderTargetDepth, obj_sphere04, vertices_skybox, indices_skybox);

GraphicsDevice.RasterizerState = RasterizerState.CullNone;

GraphicsDevice.BlendState = BlendState.AlphaBlend;

DrawOrientArrows(obj_CompassArrow);

DrawOrientArrows(obj_Light);

GraphicsDevice.BlendState = BlendState.Opaque;

//GraphicsDevice.RasterizerState = rs_solid_nocull;

//DrawScreenQuad((Texture2D)renderTarget1);

DrawText(gameTime);

base.Draw(gameTime);

}

my far plane is set at like 500 though.