Hi ?

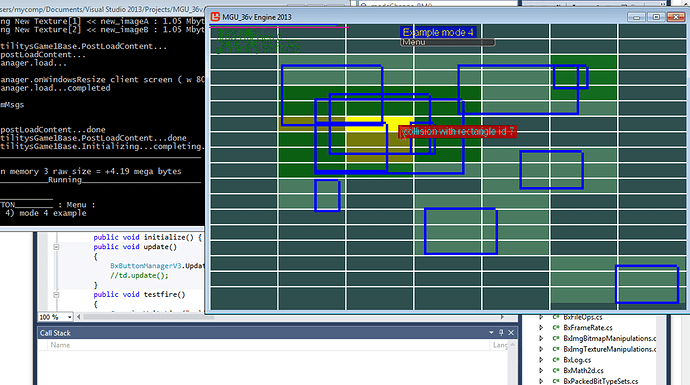

The question is in the title  I’m wondering if it is usefull or useless to sort object by their distance to the camera when doing hardware instancing, or does the GPU do its own sort with its z-buffer ?

I’m wondering if it is usefull or useless to sort object by their distance to the camera when doing hardware instancing, or does the GPU do its own sort with its z-buffer ?

Thanks for anyone shading some light on this.

The GPU never sorts anything by default I think

The order depends on the instanceVertexBuffer and the order the instance transformations are stored there.

Humm so as i sort the positions relative to the eye, and send this as the instance data, it should help the gpu.

But i dont see much increase in fps  when sorting 10000 positions of billboards for ex, it only slows down the fps by sorting it but i dont know how to profile the gpu without windows 10 as i m on seven and no gpu profiler in visualstudio

when sorting 10000 positions of billboards for ex, it only slows down the fps by sorting it but i dont know how to profile the gpu without windows 10 as i m on seven and no gpu profiler in visualstudio

I will give a try to Intel’s Gpa

Is there a way to increase the speed when overlapping a lot of smoke particles for ex. As it gets closer to the cam the fps drops as rhe billboards get larger  it is reduced when particles are blend.add but blend.alphablend is too slow even with a rendertarget 4x smaller

it is reduced when particles are blend.add but blend.alphablend is too slow even with a rendertarget 4x smaller

Why would sorting alpha blended textures increase fps? They are all fully drawn anyways

Sorting 10000 particles on the CPU is everything but free btw, this could be a bottleneck. Try different sort algorithms

Why would sorting alpha blended textures increase fps? They are all fully drawn anyways

I was wondering if: when alphablending, the GPU keeps track of the alphavalue, and if the z value is behind, skips the pixels.

A sort of thing like: if the cumuled alpha value for the current pixel reaches fully opaque value, skip pixels if z is farther.

But it is not the case ![]()

Sorting 80000 particles takes only 3ms each frame on my i5 with a multithreaded sorting algorithm. 10000 is almost unnoticeable. So it may not be a problem for one particles emitter.

Maybe if I add 10 emitters it will get worse… But i would sort the emitters beforehand.

The alpha blending happens after the fragment is fully processed.

But in the case of particles that wouldn’t even help, sadly, since you have to draw them back to front.

Btw a big performance factor for blending is the rendertarget’s format. If you use fp16 or 32 it’s much, much more expensive

The only way you are going to get that many particles rendering faster is to render smarter. With the worst case scenario of a 32-bit render target and each texture consisting of a color value of (1, 1, 1, 1), you will have reached maximum opacity with simply 255 overlapping particles using Blend.Add. Rendering more overlapping particles in these areas is redundant. If you can work out where the particles are most densely packed, you can eliminate particles from the list without any reduction in visual quality.

The fastest polygon is the polygon you don’t draw.

wouldn’t you reach maximum capacity with 1 particle in that case? Since it’s already true white with full alpha

EDIT: Ignore me, I thought in shader terms (floats), you meant bytes

![]() Love this one

Love this one

I’ll give a try to an odd/even sorting algorithm on the GPU maybe, with positions of particles in RGB istored in a 1D texture. Does 1D textures work in Monogame ? I remember having read somewhere about this.

But to get started, I’ll give a shot at a pre-grouped billboards in a map/reduce pattern. Sort of. Just as a proof of concept to see if it improves things.

Many games do this:

You can estimate screen coverage by particle size and distance to camera. You can set a maximum area*particle amount and then dynamically reduce the particle amount (e.g. every second particle, then more etc.) so you have consistent overdraw.

I actually did something similar for my dust in bounty road, the bigger it gets the more “in betweens” i simply remove from the list. So i have dense small dust at the beginning and large smoke at the end with more space between every one instance.

I already have a LOD system similar to what UE4 does, the farther, the less particles drawn/use another textures set of lower res. But when it gets closer it gets worse.

I’ll see if I can try this  It is especially a problem if a player get through a dust smoke.

It is especially a problem if a player get through a dust smoke.

On my nvidia620 it falls to 3fps.

On my 670 to 23fps.

From a starting 135fps when no particles are drawn. The last ~10units before entering smoke are the most impacting factor on the fps drop. Sort of the positive part of 1/X in term of fps, they drop really quickly when particles are about 1/4 in size of the screen with alphablend.

It would have been better for my engine to make smoke/fog with ComputeShaders in a GlobalIlumination way

My LOD system was a switch between an emitter to another one with less particles lower res.

Now I’m using instancing, and I thought about removing vertices as suggested instead of switching emitters, which forces to create one for each LOD I want.

I’ve done something similar to what @kosmonautgames suggested, but as this is to render smoke and I did not want to loose the “opacity/thickness”, I did this:

When particles are “far” and their size reaches a certain treshold, I render a single quad to replace many, with a greater alpha to make it darker (I still have to play with values to find the right mathematical function according to the number of quads skipped, but no way to know how much they overlap. As in far distance I find it quite ok, almost unnoticeable). I have saved about 10 fps, on my nvidia620: from 3 to 13 is not bad ^^

But I still have some work to make it faster. Maybe I should try something like MegaParticles (or is the name GigaParticles ?)

I have read some articles about volumetric fog, but it seems that without compushaders it is not viable.

Neither instancing or sorting i think is the problem by your description it sounds like the draw is actually from the amount of and size of overlap.

With each particle being as you said up to a quarter of the screen that’s a lot of work on the gpu I think your simply drawing to too large of an area over and over on the same spots.

Hypothetically per pixel this could be culled by increasing the alpha of a single previously drawn quad instead of adding alpha values itteratively per pixel.

Id recommend

A) simply doing as little as in the pixel shader as possible.

B) calculating many smaller smoke quads and not sorting them but instead placing them into a screen based quad tree or spacial bucket.

Then by process of elimination find final drawn quad areas that are completely overlapping each other with the goal of creating one quad draw in that screen area with a higher opacity instead of drawing many times to the same area.

I can only try to convey this idea in a picture of something totally unrelated to smoke as i have never attempted this with large smoke particles.

Essentially this is equivalent to combining all non edge areas of smoke particles that fully overlap to reduce the workload on the gpu. Drawing smoke edges separately that border only other smoke particles on one side of it. possibly with varying alpha values for each quad vertice this might also necessitate calculating clipped uv coordinates.

I don’t have a algorithm to do this already as i have never actually had to tackle this specific problem.

However that is how i would approach it if smoke could and had to fill large areas of the screen and it was dropping framerates substantially.

That or something similar to a visibility screen smoke grid that is linked to a large smoke texture that gets one shotted to the screen thru a rendertarget. I do not know if there are existing algorithms or techniques for what i have attempted to describe or what the proper terms would be to search for them.

This is a nice idea. But it would only be possible if I were not using Hardware instancing, by doing this on CPU with multithreads to maximize speed (or not maybe)

Using this technique would make me compute all these overlaps on the gpu in the vertexshader part, and I am unsure it would be faster. I don’t know if I can skip vertices once it is sent onto the GPU.

Maybe with a geomotry shader it would be possible by using something like voxels to group particles in a same voxel that is far from the camera.

Would it be possible with 80000particles like this ?

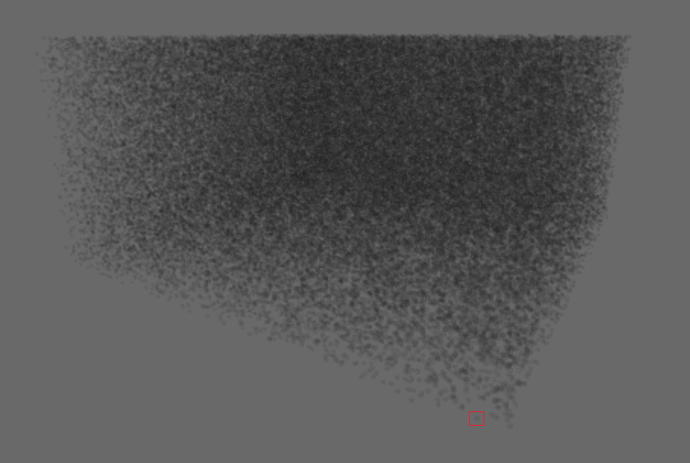

They are too small to overlap, but the case will be met when I’ll use this to simulate space dust to give the player the feeling of movement.

In red, this is the quad of 1 particles billboard.

I’m grouping particles that are far from the camera, and not too far from each other into a single particle. But this is done on the cpu before sending the positions to the GPU. Then with the vertexdeclaration it knows where each quad must be placed with the positions. If anyone knows a way to skip vertices once on GPU (like a discard or clip(-1) for vertices) it would save more time to make the particles system faster.

Well usually for billboards you just send the same position for all 4 Vertices to the gpu and then you expand them on the vertex shader. (This is done mainly so they always face the camera without any math involved)

If you don’t expand they cover no pixels.

Yes but on the vertex shader there is not collision detection to know if they overlap ?

EDIT: I think i have found an even faster solution, similar to megaparticles but without the “shower door” effect when the camera moves.

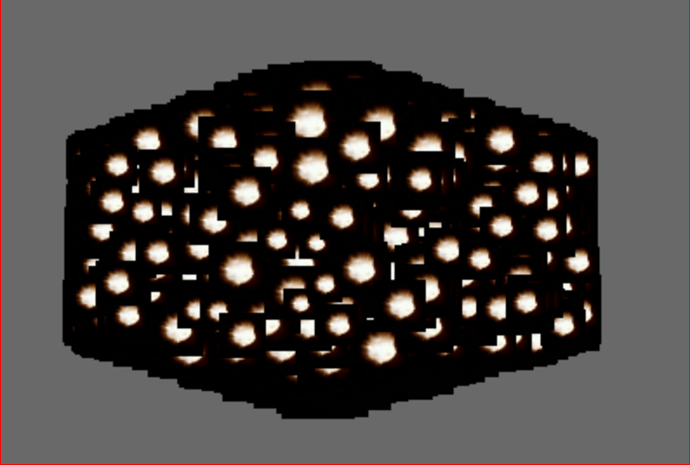

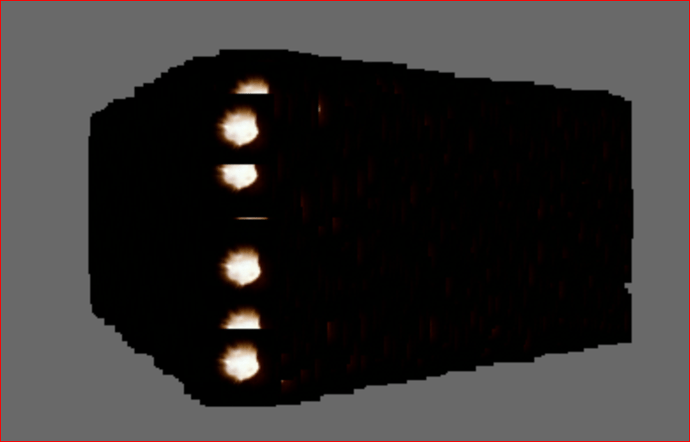

Problem is: even if I sort the positions (ie: center of the billboard, used in the vertexshader to calculate where the 2 triangles needs to be for one instance) really fast, the gpu seems to be ignoring the order of the sequence

I have to use DepthStencilState.Default to get the closest ones in front of the farthest ones, even if I sort them before or it seems to be ignored by the GPU

DepthRead gives the result as if it was not sorted (and kills perfs):

The expected result (Default ):

I have used an opaque texture to better see them.

EDIT: Give me a gun… so much time and in fact the sorted list was not used !!!

instanceBuffer.SetData(instanceData);

was missing after my sort.

Oh i don’t think i understood what you were saying before.

I was under the impression these particles were large and overlapping others in the extreme in that case. but this is a ton of small particles they don’t even fully engulf the others.

That was also the impression i had until i realized i was not calling setdata in the code at the right place.

I should manage to sleep more than 5h a night, thank you insomnia…

Now that i have a speedy sort algorythm on the closest quads only, and validated the particles a drawn in the right order i can focus and improving the system: animation, fading, spritesheet… It already supports vertex lighting and pixellighting in gbuffer