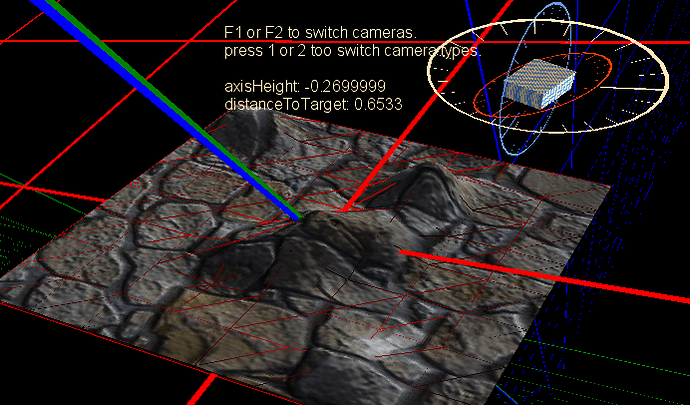

Ya when i got the shader working the orientations were all screwed up.

Guess ill just add tangents to the mesh and try to do it the regular way.

Dont like having the extra data in there but oh well.

Edit:

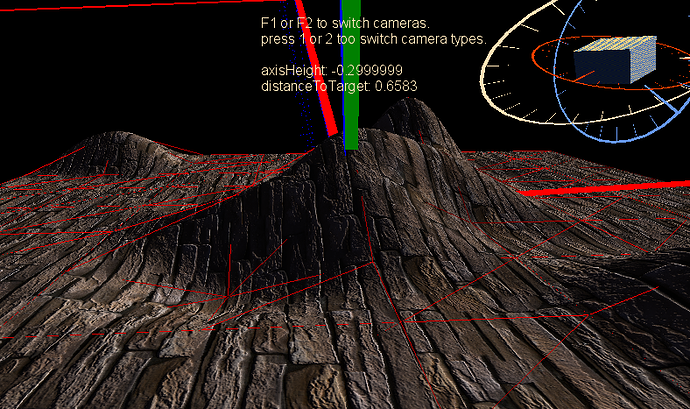

That is nice, one of eric metays brick pictures from his shared images site.

Texture2D SpriteTexture;

Texture2D NormalTexture;

sampler2D SpriteSampler = sampler_state

{

Texture = <SpriteTexture>;

};

sampler2D NormalSampler = sampler_state

{

Texture = <NormalTexture>;

};

//___________________________________

struct VsNormMapInput

{

float4 Position : POSITION0;

float3 Normal : NORMAL0;

float2 TexCoord : TEXCOORD0;

float3 Tangent : NORMAL1;

};

struct VsNormMapOutput

{

float4 Position : SV_POSITION;

float4 PositionWorld : TEXCOORD4;

float2 TexCoord : TEXCOORD0;

float3 Normal: TEXCOORD1;

float3 Tangent : TEXCOORD2;

};

VsNormMapOutput VsNormMap(VsNormMapInput input)

{

VsNormMapOutput output;

float4x4 vp = mul(View, Projection);

float4x4 wvp = mul(World, vp);

output.Position = mul(input.Position , wvp);

output.PositionWorld = input.Position;

output.Normal = input.Normal;

output.Tangent = input.Tangent;

output.TexCoord = input.TexCoord;

return output;

}

float4 PsNormMap(VsNormMapOutput input) : COLOR0

{

float4 DiffuseColor = tex2D(SpriteSampler, input.TexCoord);

float3 NormalMap = tex2D(NormalSampler, input.TexCoord).rgb;

// flips the y. the program i used fliped the green.

NormalMap.g = 1.0f - NormalMap.g;

NormalMap = normalize(NormalMap * 2.0 - 1.0);

float3 normal = mul(input.Normal, World);

float3 tangent = mul(input.Tangent, World);

float3x3 mat;

mat[0] = cross(normal, tangent); // right

mat[1] = tangent; // up

mat[2] = normal; // forward

NormalMap = mul(NormalMap, mat);

float D = length(LightPos - input.PositionWorld);

float3 N = NormalMap;

float3 L = normalize( -LightDir);

float3 Diffuse = LightColor.rgb * max(dot(N, L), 0.0f);

float3 Ambient = AmbientColor.rgb;

float AmbientStrength = 0.1f;

float3 FinalColor = DiffuseColor.rgb * ( (Ambient * (AmbientStrength) ) + (Diffuse * (1.0f - AmbientStrength)) );

return float4(FinalColor, 1.0f);

}

technique MapDrawing

{

pass

{

VertexShader = compile VS_SHADERMODEL VsNormMap();

PixelShader = compile PS_SHADERMODEL PsNormMap();

}

}

Here are some shader side quaternions and axis angle matrix functions.

// Quaternions mostly copy paste, couple i made none are tested.

// struct

struct Spatial { float4 pos, rot; };

//rotate vector

float3 qrot(float4 q, float3 v) { return v + 2.0*cross(q.xyz, cross(q.xyz, v) + q.w*v); }

//rotate vector (alternative)

float3 qrot_2(float4 q, float3 v) { return v * (q.w*q.w - dot(q.xyz, q.xyz)) + 2.0*q.xyz*dot(q.xyz, v) + 2.0*q.w*cross(q.xyz, v); }

//combine quaternions

float4 qmul(float4 a, float4 b) { return float4(cross(a.xyz, b.xyz) + a.xyz*b.w + b.xyz*a.w, a.w*b.w - dot(a.xyz, b.xyz)); }

//inverse quaternion

float4 qinv(float4 q) { return float4(-q.xyz, q.w); }

//transform by Spatial forward

float3 trans_for(float3 v, Spatial s) { return qrot(s.rot, v*s.pos.w) + s.pos.xyz; }

//transform by Spatial inverse

float3 trans_inv(float3 v, Spatial s) { return qrot(float4(-s.rot.xyz, s.rot.w), (v - s.pos.xyz) / s.pos.w); }

//perspective project

float4 get_projection(float3 v, float4 pr) { return float4(v.xy * pr.xy, v.z*pr.z + pr.w, -v.z); }

//quaternion axis angle hopefully i did this right.

float4 axis_angle(float4 axis, float angle) { float ha = angle * 0.5f; float s = sin(ha); float c = cos(ha); return float4(axis.x* s, axis.y* s, axis.z* s, c); }

// Matrix this one i just translated straight from monogame.

float4x4 CreateFromAxisAngle(float3 axis, float angle)

{

float x = axis.x;

float y = axis.y;

float z = axis.z;

float s = sin(angle);

float c = cos(angle);

float xx = x * x;

float yy = y * y;

float zz = z * z;

float nxy = x * y;

float nxz = x * z;

float nyz = y * z;

float4x4 result;

result._m00 = xx + (c * (1.0f - xx));

result._m01 = (nxy - (c * nxy)) + (s * z);

result._m02 = (nxz - (c * nxz)) - (s * y);

result._m03 = 0.0f;

result._m10 = (nxy - (c * nxy)) - (s * z);

result._m11 = yy + (c * (1.0f - yy));

result._m12 = (nyz - (c * nyz)) + (s * x);

result._m13 = 0.0f;

result._m20 = (nxz - (c * nxz)) + (s * y);

result._m21 = (nyz - (c * nyz)) - (s * x);

result._m22 = zz + (c * (1.0f - zz));

result._m23 = 0.0f;

result._m30 = 0.0f;

result._m31 = 0.0f;

result._m32 = 0.0f;

result._m33 = 1.0f;

return result;

}

![]()