I’ve been working on figuring out this problem for a couple weeks and I’m probably approaching it wrong. At any rate, I’ve written a shader (below) that draws everything in shades of grey according to their Y-position (which is equivalent to depth for the purposes of my camera.)

/////////////

// GLOBALS //

/////////////

cbuffer MatrixBuffer

{

float4x4 xViewProjection;

};

Texture2D colorTexture;

sampler s0 = sampler_state

{

texture = <colorTexture>;

magfilter = LINEAR;

minfilter = LINEAR;

mipfilter = LINEAR;

//AddressU = mirror;

//AddressV = mirror;

};

sampler colorSampler = sampler_state

{

Texture = <colorTexture>;

};

//////////////

// TYPEDEFS //

//////////////

struct VertexToPixel

{

float4 Position : POSITION; // reserved for Pixel Shader internals

float4 worldPos : TEXCOORDS0; // world position of the texture

float2 texPos : TEXCOORDS1; // actual texture coords

float2 screenPos : TEXCOORDS1; // screen position of the pixel?

};

////////////////////////////////////////////////////////////////////////////////

// Vertex Shader

////////////////////////////////////////////////////////////////////////////////

VertexToPixel DepthVertexShader(float4 inpos : POSITION, float2 inTexCoords : TEXCOORD0)

{

VertexToPixel output;

// Change the position vector to be 4 units for proper matrix calculations.

inpos.w = 1.0f;

// Calculate the position of the vertex against the world, view, and projection matrices.

output.Position = mul(inpos, xViewProjection);

// Store the position value in a second input value for depth value calculations.

output.texPos = inTexCoords;

output.worldPos = inpos;

output.screenPos = output.Position;

return output;

}

////////////////////////////////////////////////////////////////////////////////

// Pixel Shader

////////////////////////////////////////////////////////////////////////////////

float4 DepthPixelShader(VertexToPixel input) : COLOR0 // : SV_TARGET

{

// Gray the texture to it's y-value (approx) and alpha test

float4 depthColor = tex2D(colorSampler, input.texPos);

depthColor.a = round(depthColor.a);

float yval = (1 + input.worldPos.y) * 0.1 * depthColor.a;

depthColor.r = yval;

depthColor.g = yval;

depthColor.b = yval;

return depthColor;

}

technique Simplest

{

pass Pass0

{

VertexShader = compile vs_4_0_level_9_1 DepthVertexShader();

PixelShader = compile ps_4_0_level_9_1 DepthPixelShader();

}

}

I am now attempting to read this Texture from another shader in order to use it as a Depth buffer for the sake of having lighting effects only affect the things that are of a lower y-value.

I have been reading everything I can in my off time about RenderTargets, DepthBuffers, and the inability to utilize depth buffers in xna/monogame between render targets. This is my attempt to get around that.

My question is; I’ve attempted in a second (similar) shader to draw this without changing it at all over a second set of polygons. I’ve tried using World, Screen, and Texture position to no avail. For reference I’ve included the second (failing) shader

sampler s0;

Texture2D colorTexture;

sampler currSampler = sampler_state

{

Texture = <colorTexture>;

};

float4x4 xViewProjection;

struct VertexToPixel

{

float4 Position : POSITION; // reserved for Pixel Shader internals

float4 worldPos : TEXCOORDS0; // world position of the texture

float2 texPos : TEXCOORDS1; // actual texture coords

float2 screenPos : TEXCOORDS1; // screen position of the pixel?

};

VertexToPixel SimplestVertexShader(float4 inpos : POSITION, float2 inTexCoords : TEXCOORD0)

{

VertexToPixel output;

// Change the position vector to be 4 units for proper matrix calculations.

inpos.w = 1.0f;

// Calculate the position of the vertex against the world, view, and projection matrices.

output.Position = mul(inpos, xViewProjection);

// Store the position value in a second input value for depth value calculations.

output.texPos = inTexCoords;

output.worldPos = inpos;

output.screenPos = output.Position.xy;

return output;

}

float4 DrawDepthBufferAgain(VertexToPixel input) : COLOR0

{

float4 depthColor = tex2D(currSampler, input.texPos);

return depthColor;

}

technique Simplest

{

pass Pass0

{

VertexShader = compile vs_4_0_level_9_1 SimplestVertexShader();

PixelShader = compile ps_4_0_level_9_1 DrawDepthBufferAgain();

}

}

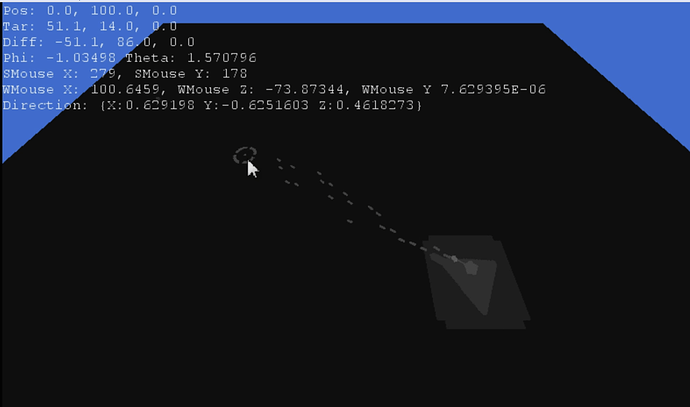

This second shader has the issue that it draws the renderTarget “magnified” and “twisted” (by pi/2) due to the re-application of the ViewProjection matrix.

Any help for my conundrum (including how to just reuse the depth buffer effectively) would be greatly appreciated. Thank you for your time.