Which method do you use to reconstruct the position from depth? I guess you reconstruct view space position?

yes, I do.

multiply with inverse view and you have world coordinates

Sorry, I should have made it clearer. I meant the method for view space reconstruction from depth.

I work with frustum corners to get the camera view direction and multiply by depth from depth buffer.

VS output.ViewRay = GetFrustumRay(input.TexCoord); //Lerp texCoords to interpolate bilinearly from frustum corners

PS

//Get our current Position in viewspace

float linearDepth = DepthMap.Sample(texSampler, texCoord).r;

float3 positionVS = input.ViewRay * linearDepth;

How does GetFrustumRay work? Ah found it… Interpolating (bilinearly) between the four FarPlane view space frustum corners like the comment says ^^

Is it possible to reconstruct world space position from depth using the far plane frustum corners in world space directly without multiplying with the inverse view matrix?

possibly.

They would have to be in world space relative to camera and then add camera position afterwards

So if I use the GetCorners method of the BoundingFrustum class, they are in world space. Then the next step would be, to substract the camera position and pass these over to the shaders?

well if you do it like you do with viewspace, which is

viewDir = GetFromFrustums()…

position = viewDir * depthFromDepthBuffer, your position would scale from 0 to the frustum corners

but what you want is from camera to frustum corners, so your viewdir has to be the vector from camera to corner, aka cornerPosition - cameraPosition.

so it would be

viewDir = getFromFrustum (which is the edge position - camera)

position = viewDir * depthFromDepthBuffer + cameraPosition

It works exactly like you wrote

I had an redundant line of code in the middle. A normalization which of course is not necessary in this case. I mixed some things up when I wrote the code.

I’ve got a question concerning depth z/w vs linear depth.

If i need to do forward rendering after all the deferred stuff with a linear depth, there are two options:

-linearize forward renders : so it would need a shader because basic effect cant do it in the shader or do it on cpu when sending the matrix? Gpus are faster for matrices transforms

-recompute the linear depth to its z/w component

Am I right or did i miss something?

2 realistic options:

1 . You do the normal forward rendering as you always would - same as Gbuffer setup. Draw your meshes, send them to the GPU together with world * view * projection.

In the vertex buffer transform both to world view projection and world view. Use world view to check against the depth buffer in the pixel shader to discard.

2 . (Probably better)

Recreate the z/w depth buffer by drawing a fullscreen quad and then render your forward rendered meshes like you always would.

In case you want a sample implementation I think my ReconstructDepth.fx from the deferred Engine is ok (You find it in Shaders / ScreenSpace / ReconstructDepth.fx )

I only need (for the moment…) forward rendering for the editor, to draw lights, their volume, etc. But if I need to use forward for translucent things for ex, i will have to think about this.

Thanks, I already have the maths needed  But I’m wondering if it is worth the effort to use linear depth, and if speed will not suffer a little with these computations, as monogame in wpf is already a little less fast as the game itself

But I’m wondering if it is worth the effort to use linear depth, and if speed will not suffer a little with these computations, as monogame in wpf is already a little less fast as the game itself

I would go with option 2 explained by @kosmonautgames, this is also what I was doing while using z/w depth.

Yes, it’s simpler with z/w depth. If you don’t need the precision I would stick with z/w. I had the z/w depth encoded to 24 bits (rgb channels) too. So perhaps it would have been enough to just store the z/w depth in a RenderTarget with SurfaceFormat.Single.

As I don’t need “killer” precision on depth, I may just change for 1-(z/w) which seems enough.

I have also read on nvidia there is a trick: reversing near and farplane. I’ll investigate into this.

This one is also interesting (I think this is already in the bookmarks 🔗 Useful MonoGame Related Links U-MG-RL, I’m pretty sure I saw mynameismjp.wordpress.com somewhere posted by @kosmonautgames)

https://mynameismjp.wordpress.com/2010/03/22/attack-of-the-depth-buffer/

Is it better to create the linear depth value

-in viewspace (ie after posworldview)

or

-in clip space (/homogenous instead of making the divide by w) ?

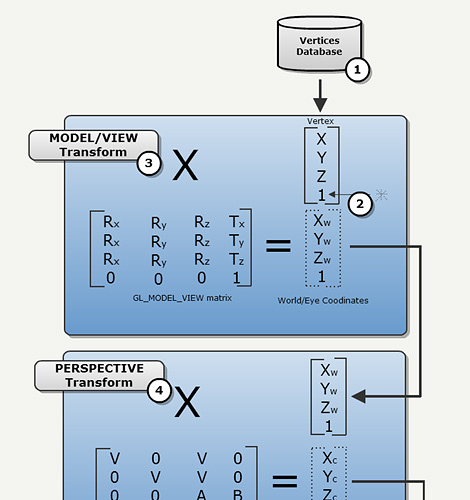

Am I wrong somewhere, according to this:

I do it like the former, so after ModelView transformation. That should work fine.

float4 viewPosition = mul(WorldPosition, View);

output.Depth = viewPosition.z / -FarClip;

Sure  But some developers does this after the perspective transform, but before de divide.

But some developers does this after the perspective transform, but before de divide.

Is it faster in the viewspace ?

it’s the same speed, one matrix multiplication for the step.

The question is only what you want to do with it, projection has no linear depth buffer in world space, but it is linear in view projection space so that’s very useful, too.