Hi MonoGame Community,

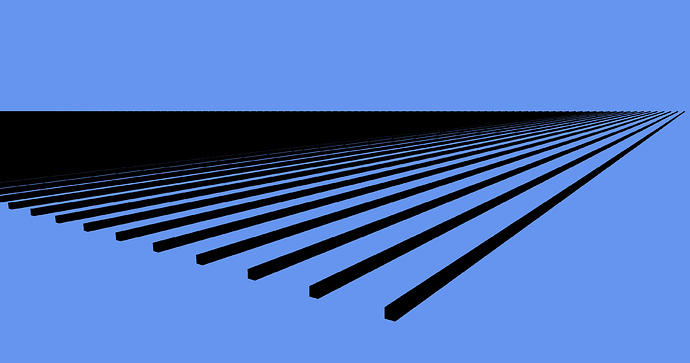

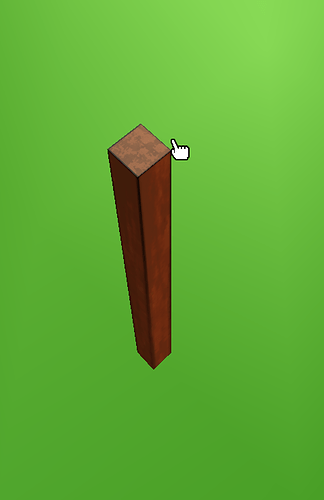

I have recently transitioned from 2D Development to 3D Development and have been trying to learn Hardware Instancing for tiling the ground objects.

I have followed some examples here but I am unsure exactly how to resolve an issue. The error I am currently getting when trying to Instance a model is

An error occurred while preparing to draw. This is probably because the current vertex declaration does not include all the elements required by the current vertex shader. The current vertex declaration includes these elements: SV_Position0, NORMAL0, TEXCOORD0, POSITION1, TEXCOORD1.

I have seen a few solutions presented from searching but haven’t been able to resolve.

The current Shader (Which is a copy from another user here)

#if OPENGL

#define SV_POSITION POSITION

#define VS_SHADERMODEL vs_3_0

#define PS_SHADERMODEL ps_3_0

#else

#define VS_SHADERMODEL vs_4_0_level_9_1

#define PS_SHADERMODEL ps_4_0_level_9_1

#endif// Camera settings.

float4x4 World;

float4x4 View;

float4x4 Projection;// This sample uses a simple Lambert lighting model.

float3 LightDirection;

float3 DiffuseLight;

float3 AmbientLight;

float4 Color;texture Texture;

sampler CustomSampler = sampler_state

{

Texture = (Texture);

};struct VertexShaderInput

{

float4 Position : POSITION0;

float3 Normal : NORMAL0;

float2 TextureCoordinate : TEXCOORD0;

};struct VertexShaderOutput

{

float4 Position : SV_POSITION;

float4 Color : COLOR0;

float2 TextureCoordinate : TEXCOORD0;

};VertexShaderOutput VertexShaderFunction(VertexShaderInput input, float4x4 instanceTransform : BLENDWEIGHT)

{

VertexShaderOutput output;// Apply the world and camera matrices to compute the output position. float4x4 instancePosition = mul(World, transpose(instanceTransform)); float4 worldPosition = mul(input.Position, instancePosition); float4 viewPosition = mul(worldPosition, View); output.Position = mul(viewPosition, Projection); // Compute lighting, using a simple Lambert model. //float3 worldNormal = mul(input.Normal, instanceTransform); //float diffuseAmount = max(-dot(worldNormal, LightDirection), 0); //float3 lightingResult = saturate(diffuseAmount * DiffuseLight + AmbientLight); output.Color = Color; // Copy across the input texture coordinate. output.TextureCoordinate = input.TextureCoordinate; return output;}

float4 PixelShaderFunction(VertexShaderOutput input) : COLOR0

{

return tex2D(CustomSampler, input.TextureCoordinate) * input.Color;

}// Hardware instancing technique.

technique Instancing

{

pass Pass1

{

VertexShader = compile VS_SHADERMODEL VertexShaderFunction();

PixelShader = compile PS_SHADERMODEL PixelShaderFunction();

}

}

From what I understand I am not passing in something I need in this shader? This is the current model Code I’m trying to use to draw.

foreach (var mesh in ThisModel.Meshes)

{

foreach (var meshPart in mesh.MeshParts)

{

// Setting Buffer Bindings here

GraphicsDevice.SetVertexBuffers(

new VertexBufferBinding(meshPart.VertexBuffer, meshPart.VertexOffset, 0),

new VertexBufferBinding(instanceVertexBuffer, 0, 1)

);GraphicsDevice.Indices = meshPart.IndexBuffer; Effect effect = Content.Load<Effect>("modelInstance"); ; effect.CurrentTechnique = effect.Techniques["Instancing"]; effect.Parameters["World"].SetValue(modelBones[mesh.ParentBone.Index]); effect.Parameters["View"].SetValue(View); effect.Parameters["Projection"].SetValue(Projection); effect.Parameters["Color"].SetValue(Vector4.One); /*effect.Parameters["AmbientLight"].SetValue(Vector3.One); effect.Parameters["DiffuseLight"].SetValue(Vector3.Zero); effect.Parameters["LightDirection"].SetValue(Vector3.One);*/ foreach (var pass in effect.CurrentTechnique.Passes) { pass.Apply(); } GraphicsDevice.DrawInstancedPrimitives(PrimitiveType.TriangleList, 0, meshPart.StartIndex, meshPart.PrimitiveCount, instanceCount); } }

If anyone knows what I might be missing or could point me in the right direction for understanding what I am doing wrong please let me know. I have searched for a while and found some examples but they seemed to be different issues.

Thanks for any help