Wow, that looks VERY impressive! Would this kind of thing be equally viable for a 2d tilemap structure? (or does it need the texture plane to change angles compared to the camera?)

It would be really nice if it could be layered on top of existing 2d tilemaps, as a slam-dunk improvement to the tileset…

yep definitely doable.

I guess you can just fake a tilt shift if you want isometric / orthographic perspective, and it’s trivial for a side scroller where you just blur certain layers. If you write a depth map you can have a non-“fake” DOF, too.

Thanks. It does sound really nice to blur non focused layers.

How long would you estimate it would take to do such a thing? Or rather, is it a comprehensive task?

And what is the root or umbrella technology for something like this?

Is it HLSL or, what would be chapter one in approaching this?

[quote=“monopalle, post:42, topic:8326”]

Wow, that looks VERY impressive!

[/quote] Thank you

If you have the tiles seperated in different layers as render targets, you could just blur those with gaussian blur for example. There should be lots of examples online how to do gaussian blur. Preferrably you would do this in two passes, one horizontal and one vertical in arbitrary order. This leads to the exact same result but reduces computational cost.

The effect I use is Depth of Field. It uses the depth to decide how blurry it should get. So it should be viable also for 2D, provided you have access to the depth like @kosmonautgames mentioned.

One method to implement DoF, is to blur the image. Then in the next step, depending on the depth, you could sample the non-blurred or blurred version of the image.

I used a more advanced technique, where focused image regions also stay focused if there is an adjacent out-of-focus image region. Maybe this isn’t clearly visible in the image due to lacking time to find better parameter values, to make the transition region from focused to non-focused more sharp.

Well, for my current project I do have ‘scale’ for each tile-layer, that should do for depth, right?

But a gaussian blur, you make that method sound easier. Would that be a kind of HLSL shader?

I think it should work, you could blur more or less based on this pseudo depth value.

It can be implemented as a PixelShader. For each pixel you would sample the neighbour pixels and calculate the average value of these pixels.

Ok, I understand thanks… the logic of it sounds easy, if I could ever get my HLSL code to run at all

post a new thread on the main page with what exactly you need and we’ll find a way to help you

I’ll make sure and do that once I get back around to it, thanks.

How do you do depth reconstruction

In the GBuffer generation step I calculate the depth as

float depth = projectedPosition.z / projectedPosition.w;

To reconstruct the depth, a fullscreen quad is drawn. The pixel shader writes the saved depth from the GBuffer. After this step, the depth is reconstructed (in the current set RenderTarget) and it is possible to continue with other draw calls

I have added an post processing effect, similar to the scene fringe effect described here: https://docs.unrealengine.com/latest/INT/Engine/Rendering/PostProcessEffects/SceneFringe/

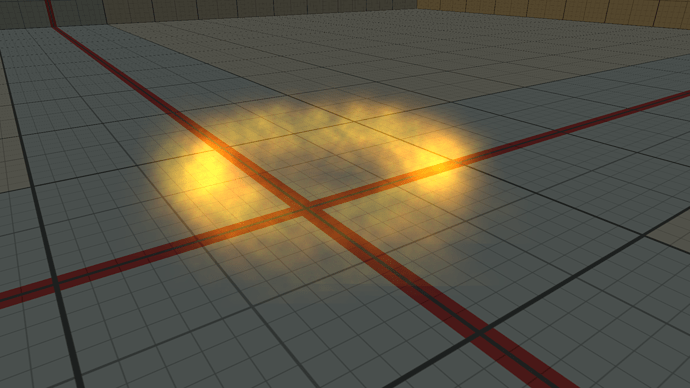

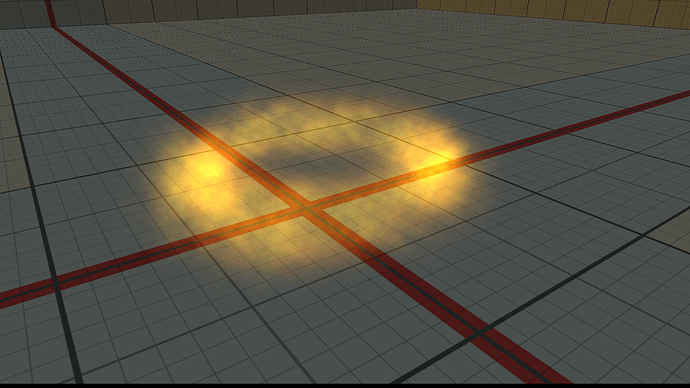

In principle soft gpu particles are also working. The difference is, that for soft particles the scene depth and particle depth are used to calculate how transparent the particle should be.

The problem is the lack of precision of the depth buffer. So if the camera is at a greater distance from the particles, the depth values are calculated to be the same, so the particles fade out.

At the moment I encode the depth value after perspective z over w divide in the rgb channels of a texture. If anybody has an idea about what to do about the depth precision problem, I would be happy to know

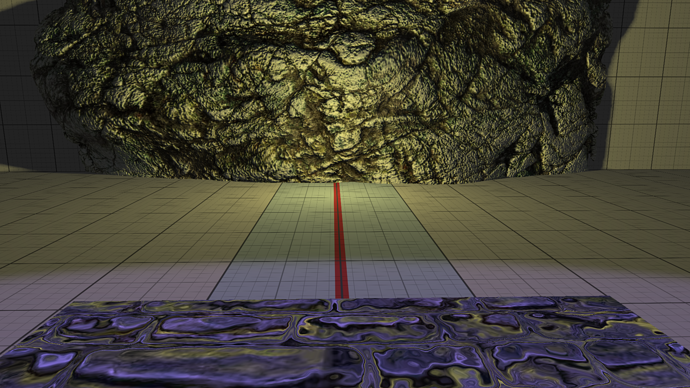

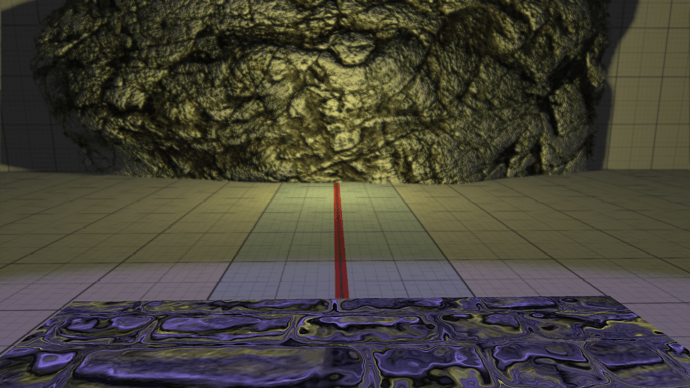

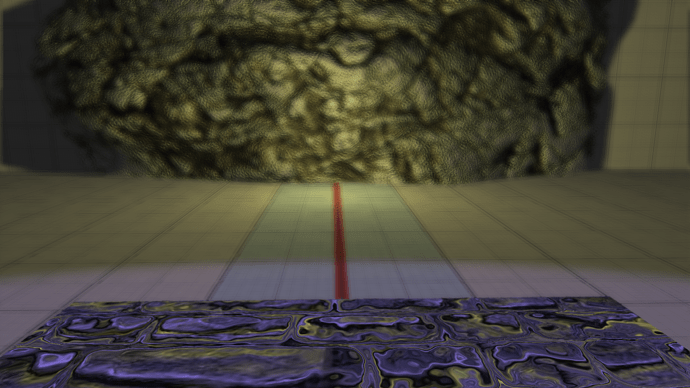

For the implementation of the gpu particles, I used the old XNA sample. Here are some images for comparison of gpu and soft gpu particles.

Great work!

are you using a linear depth buffer? I think z/w might not be good enough

Thank you!

I use z/w encoded to 24 bits of a RenderTarget at the moment. I think I need more precision for the depth.

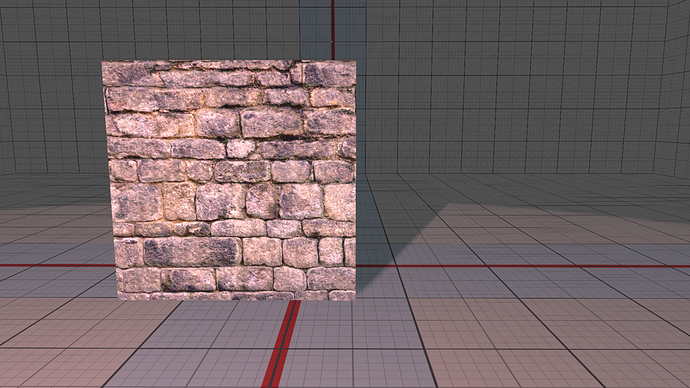

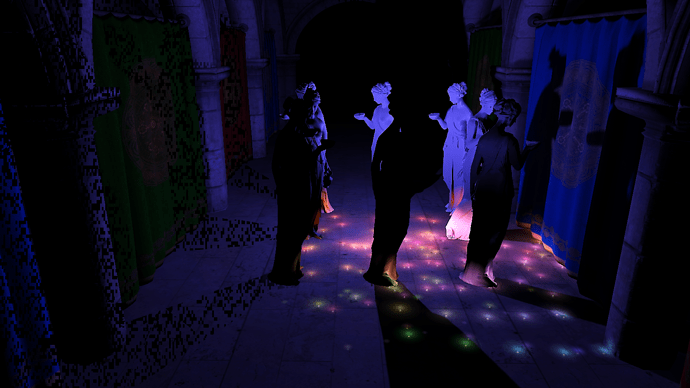

The depth written to the depth RenderTarget of the GBuffer is now linear. This is what the RenderTarget looks like.

A small update, the CubeMapPointLights seem to work again.