Weird results with z/w as well ill show it in a minute.

Im going to try that other function and see if it works.

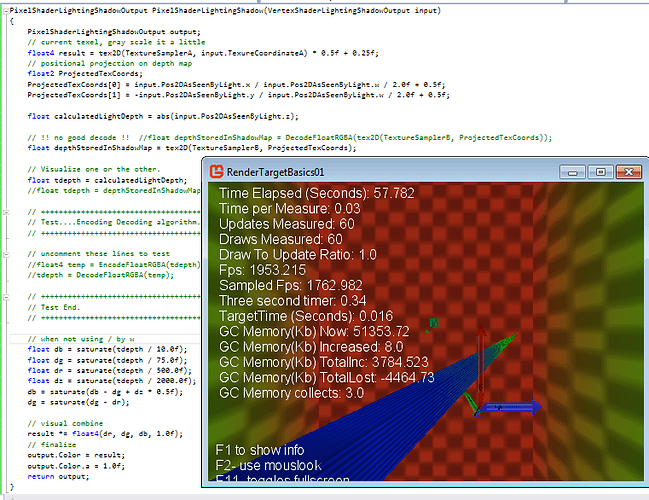

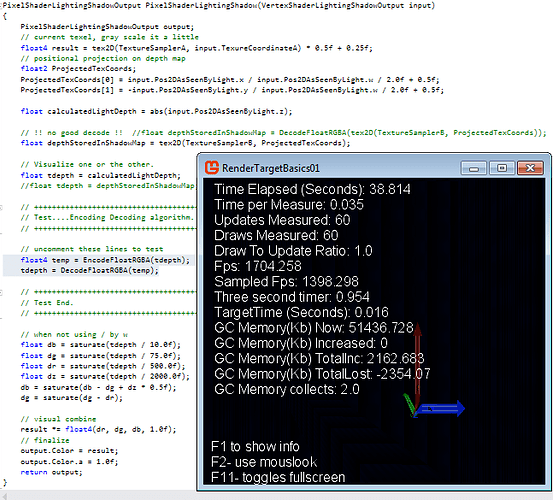

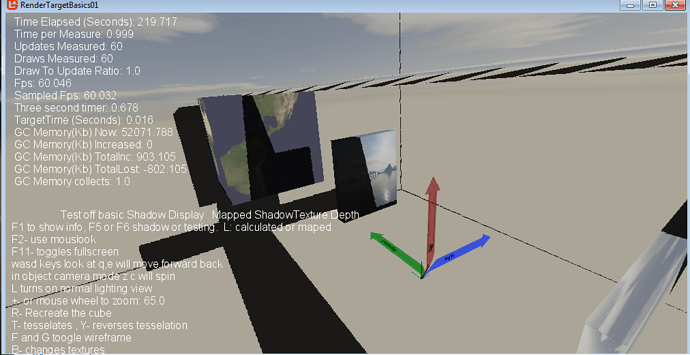

So you can see how i tested the encode decode i added pictures.

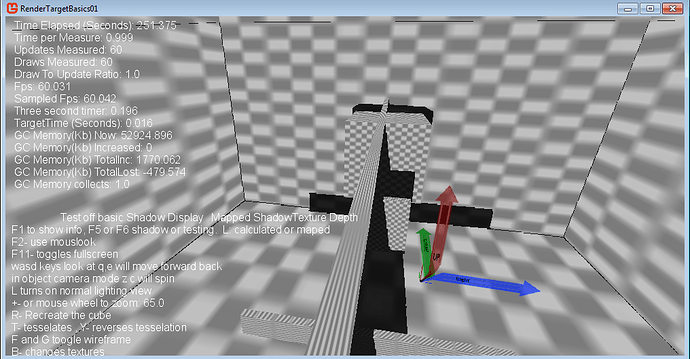

The following pictures all depict depth by color the texture is white and grey so as not to affect the visual representation.

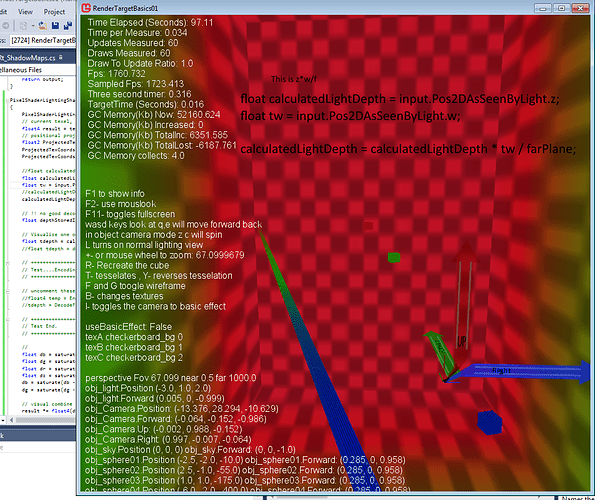

The below is the z depth alone of the calculated light across a spectrum. It’s not from the depth buffer texture which is just returning low precision junk. The code is at the bottom.

Blue = closest.

Green = middle.

Red = farther.

Note the visible objects range from 10 to 500 z the scene 550 z near 700 at the corners the far plane is set to 750.

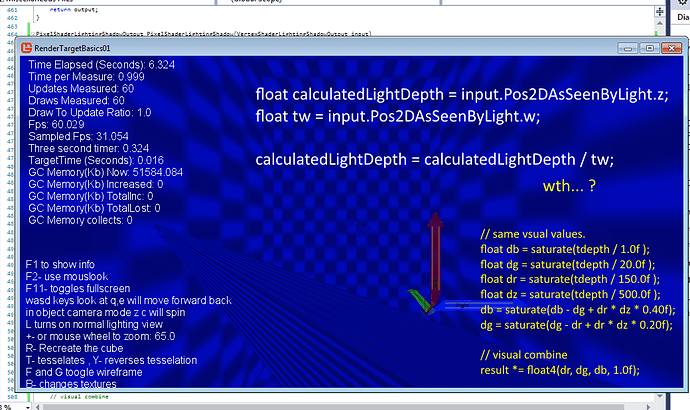

This is the encode decode inserted before visualizing the same calculated depths.

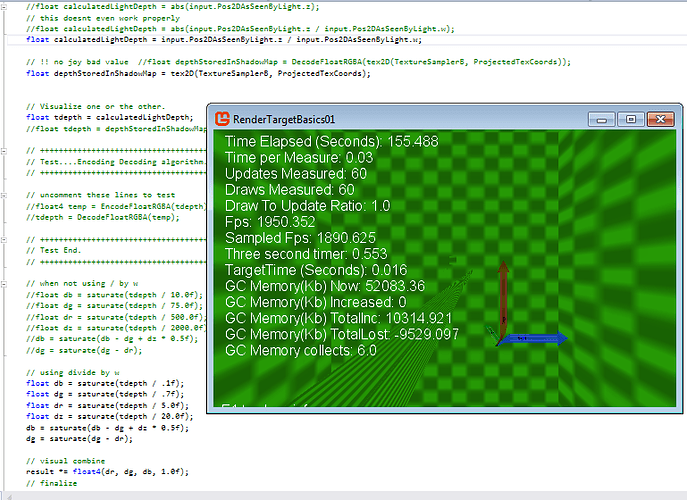

Here is the result of the calculated light using Z divided by W (z/w)

Not what i was expecting. I altered the visual range cause otherwise its just super dark but also to show its doing something weird.

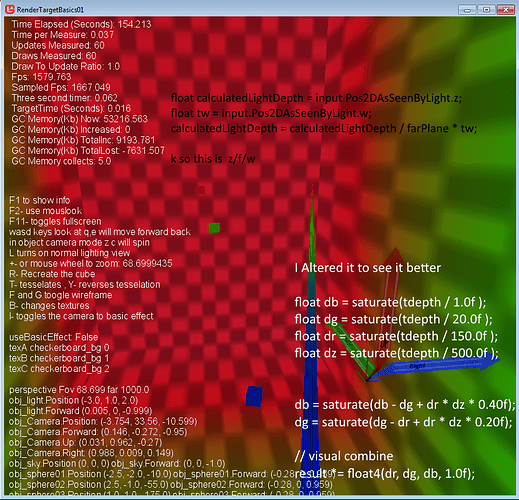

Here is the shader code i trimmed it way down.

//_______________________________________________________________

// >>>> LightingShadowPixelShader <<<

//_______________________________________________________________

struct VertexShaderLightingShadowInput

{

float4 Position : POSITION0;

//float3 Normal : NORMAL0;

//float4 Color : COLOR0;

float2 TexureCoordinateA : TEXCOORD0;

};

struct VertexShaderLightingShadowOutput

{

float4 Position : SV_Position;

//float3 Normal : NORMAL0;

//float4 Color : COLOR0;

float2 TexureCoordinateA : TEXCOORD0;

float4 Pos2DAsSeenByLight : TEXCOORD1;

//float4 Position3D : TEXCOORD2;

};

struct PixelShaderLightingShadowOutput

{

float4 Color : COLOR0;

};

VertexShaderLightingShadowOutput VertexShaderLightingShadow(VertexShaderLightingShadowInput input)

{

VertexShaderLightingShadowOutput output;

output.Position = mul(input.Position, gworldviewprojection);

output.Pos2DAsSeenByLight = mul(input.Position, lightsPovWorldViewProjection);

output.TexureCoordinateA = input.TexureCoordinateA;

//output.Position3D = mul(input.Position, gworld);

//output.Color = input.Color;

//output.Normal = input.Normal;

//output.Normal = normalize(mul(input.Normal, (float3x3)gworld));

return output;

}

PixelShaderLightingShadowOutput PixelShaderLightingShadow(VertexShaderLightingShadowOutput input)

{

PixelShaderLightingShadowOutput output;

// current texel, gray scale it a little

float4 result = tex2D(TextureSamplerA, input.TexureCoordinateA) * 0.5f + 0.25f;

// positional projection on depth map

float2 ProjectedTexCoords;

ProjectedTexCoords[0] = input.Pos2DAsSeenByLight.x / input.Pos2DAsSeenByLight.w / 2.0f + 0.5f;

ProjectedTexCoords[1] = -input.Pos2DAsSeenByLight.y / input.Pos2DAsSeenByLight.w / 2.0f + 0.5f;

//float calculatedLightDepth = abs(input.Pos2DAsSeenByLight.z);

float calculatedLightDepth = input.Pos2DAsSeenByLight.z;

// !! no good decode !! //float depthStoredInShadowMap = DecodeFloatRGBA(tex2D(TextureSamplerB, ProjectedTexCoords));

float depthStoredInShadowMap = tex2D(TextureSamplerB, ProjectedTexCoords);

// Visualize one or the other.

float tdepth = calculatedLightDepth;

//float tdepth = depthStoredInShadowMap;

// +++++++++++++++++++++++++++++++++++++

// Test....Encoding Decoding algorithm.

// +++++++++++++++++++++++++++++++++++++

// uncomment these lines to test

//float4 temp = EncodeFloatRGBA(tdepth);

//tdepth = DecodeFloatRGBA(temp);

// +++++++++++++++++++++++++++++++++++++

// Test End.

// +++++++++++++++++++++++++++++++++++++

// when not using / by w

float db = saturate(tdepth / 10.0f);

float dg = saturate(tdepth / 75.0f);

float dr = saturate(tdepth / 500.0f);

float dz = saturate(tdepth / 2000.0f);

db = saturate(db - dg + dz * 0.5f);

dg = saturate(dg - dr);

// visual combine

result *= float4(dr, dg, db, 1.0f);

// finalize

output.Color = result;

output.Color.a = 1.0f;

return output;

}

If you want the shadow map generation code its at the top same thing as before.

Edit…

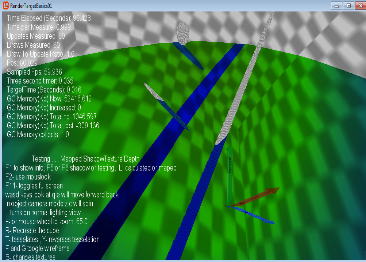

Well its looking like the clip plane needs to be used for the direct light calculation. Im actually not even worrying about the shadow map encoding yet i totally put that to the side for the moment.

Im reading every-were it is supposed to be z/w, to get the actual depth.

However that is not what im seeing whatsoever.

z/f/w

z*w/f

z/w ???