Awesome thank you. I purchased them!

You don’t have to use the apply call unless you add a vertex shader you don’t have to use the set either on the first texture you can redo it like so.

to paste code just make sure there are a couple emptly lines above and below the code you paste.

drop it in and highlight all of it.

then hit the preformatted text button.

//

// refract

//

#if OPENGL

#define SV_POSITION POSITION

#define VS_SHADERMODEL vs_3_0

#define PS_SHADERMODEL ps_3_0

#else

#define VS_SHADERMODEL vs_4_0_level_9_1

#define PS_SHADERMODEL ps_4_0_level_9_1

#endif

// This vector should be in motion in order to achieve the desired effect.

float2 DisplacementMotionVector;

// displacement amount

float DisplacementIntensity;

Texture2D Texture : register(t0);

sampler TextureSampler : register(s0)

{

Texture = (Texture);

};

Texture2D DisplacementTexture;

sampler2D DisplacementSampler = sampler_state

{

AddressU = wrap;

AddressV = wrap;

Texture = <DisplacementTexture>;

};

float4 PsRefraction(float4 position : SV_Position, float4 color : COLOR0, float2 texCoord : TEXCOORD0) : COLOR0

{

// Look up the displacement amount.

float2 displacement = tex2D(DisplacementSampler, DisplacementMotionVector + texCoord * DisplacementIntensity) * 0.2 - 0.15;

// Offset the main texture coordinates.

texCoord += displacement;

// Look up into the main texture.

return tex2D(TextureSampler, texCoord) * color;

}

technique Refraction

{

pass Pass0

{

PixelShader = compile PS_SHADERMODEL PsRefraction(); // ps_2_0 doesn't error either.

}

}

The game1

using Microsoft.Xna.Framework;

using Microsoft.Xna.Framework.Graphics;

using Microsoft.Xna.Framework.Input;

using System;

namespace RefractionShader

{

/// <summary>

/// This is the main type for your game.

/// </summary>

public class Game1 : Game

{

GraphicsDeviceManager graphics;

SpriteBatch spriteBatch;

Effect refractionEffect;

Texture2D tex2dForground;

Texture2D tex2dRefractionTexture;

Texture2D tex2dEgyptianDesert;

Texture2D tex2dpgfkp;

public Game1()

{

graphics = new GraphicsDeviceManager(this);

graphics.PreferredBackBufferWidth = 1200;

graphics.PreferredBackBufferHeight = 700;

Window.AllowUserResizing = true;

Content.RootDirectory = "Content";

IsMouseVisible = true;

}

protected override void Initialize()

{

base.Initialize();

}

protected override void LoadContent()

{

spriteBatch = new SpriteBatch(GraphicsDevice);

tex2dEgyptianDesert = Content.Load<Texture2D>("EgyptianDesert");

tex2dpgfkp = Content.Load<Texture2D>("pgfkp");

tex2dForground = tex2dEgyptianDesert;

tex2dRefractionTexture = tex2dpgfkp;

refractionEffect = Content.Load<Effect>("RefractShader");

}

protected override void UnloadContent()

{

}

protected override void Update(GameTime gameTime)

{

if (GamePad.GetState(PlayerIndex.One).Buttons.Back == ButtonState.Pressed || Keyboard.GetState().IsKeyDown(Keys.Escape))

Exit();

base.Update(gameTime);

}

protected override void Draw(GameTime gameTime)

{

GraphicsDevice.Clear(Color.CornflowerBlue);

// Draw the background image.

spriteBatch.Begin();

spriteBatch.Draw(tex2dForground, new Rectangle(0, 0, 300, 150), Color.White);

spriteBatch.Draw(tex2dRefractionTexture, new Rectangle(0, 150, 300, 150), Color.White);

spriteBatch.End();

// similar to the original shown.

Draw2dRefracted(tex2dForground, tex2dRefractionTexture, new Rectangle(300, 0, 300, 150), .05f, (1f/3f) ,gameTime);

// this one has wind instead.

Draw2dRefractedDirectionally(tex2dForground, tex2dRefractionTexture, new Rectangle(600, 0, 300, 150), .05f, (1f / 3f), new Vector2(10,1), gameTime);

base.Draw(gameTime);

}

/// <summary>

/// Draw a refracted texture using the refraction effect.

/// </summary>

/// <param name="texture">the texture to draw that will be effected</param>

/// <param name="refractionTexture">the texture to use for the refraction effect</param>

/// <param name="screenRectangle">the area to draw to on the screen</param>

/// <param name="refractionSpeed">the speed at which the refraction occurs</param>

/// <param name="displacementIntensity">the displacement intensity of the refraction how drastic it is 1/3 was the default.</param>

public void Draw2dRefracted(Texture2D texture, Texture2D refractionTexture, Rectangle screenRectangle, float refractionSpeed, float displacementIntensity, GameTime gameTime)

{

double time = gameTime.TotalGameTime.TotalSeconds * refractionSpeed;

var displacement = new Vector2((float)Math.Cos(time), (float)Math.Sin(time));

// Set an effect parameter to make the displacement texture scroll in a giant circle.

refractionEffect.CurrentTechnique = refractionEffect.Techniques["Refraction"];

refractionEffect.Parameters["DisplacementMotionVector"].SetValue(displacement);

refractionEffect.Parameters["DisplacementIntensity"].SetValue(displacementIntensity);

refractionEffect.Parameters["DisplacementTexture"].SetValue(refractionTexture);

spriteBatch.Begin(0, null, null, null, null, refractionEffect);

spriteBatch.Draw(texture, screenRectangle, Color.White);

spriteBatch.End();

}

/// <summary>

/// Draw a refracted texture using the refraction directional effect.

/// </summary>

/// <param name="wind"> the directionality of the refraction</param>

public void Draw2dRefractedDirectionally(Texture2D texture, Texture2D refractionTexture, Rectangle screenRectangle, float refractionSpeed, float displacementIntensity, Vector2 wind, GameTime gameTime)

{

wind = Vector2.Normalize(wind) * -(float)(gameTime.TotalGameTime.TotalSeconds * refractionSpeed);

var displacement = new Vector2(wind.X, wind.Y); // + screenRectangle.Location.ToVector2();

// Set an effect parameter to make the displacement texture scroll in a giant circle.

refractionEffect.CurrentTechnique = refractionEffect.Techniques["Refraction"];

refractionEffect.Parameters["DisplacementMotionVector"].SetValue(displacement);

refractionEffect.Parameters["DisplacementIntensity"].SetValue(displacementIntensity);

refractionEffect.Parameters["DisplacementTexture"].SetValue(refractionTexture);

spriteBatch.Begin(0, null, null, null, null, refractionEffect);

spriteBatch.Draw(texture, screenRectangle, Color.White);

spriteBatch.End();

}

}

}

actually if you set the other values in load one time displacement speed and stuff all you have to set is the motion. then call spritebatch,Draw

// the other stuff can be set to the effect after you loaded it in load including the refraction texture.

public void Draw2dRefracted(Texture2D texture, Texture2D refractionTexture, Rectangle screenRectangle, float refractionSpeed, float displacementIntensity, GameTime gameTime)

{

double time = gameTime.TotalGameTime.TotalSeconds * refractionSpeed;

var displacement = new Vector2((float)Math.Cos(time), (float)Math.Sin(time));

// might still want to set this if you have other techniques in the shader.

// refractionEffect.CurrentTechnique = refractionEffect.Techniques["Refraction"];

// then this is all that you need to set.

refractionEffect.Parameters["DisplacementMotionVector"].SetValue(displacement);

spriteBatch.Begin(0, null, null, null, null, refractionEffect);

spriteBatch.Draw(texture, screenRectangle, Color.White); // and call it.

spriteBatch.End();

}Sorry I am a bit confused. Which Apply call are you referring to?

He is saying you can pass the effect to the sprite batch begin call, rather than call the apply on it.

How would that work with multi pass shaders, or if you wanted to use different techniques within the shader?

Would you set them prior to the begin call?

Does the begin run through all the passes in the selected technique?

Forgive my ignorance

Does the begin run through all the passes in the selected technique?

Im not sure on that one. I would think it would be looping them all by default but im not positive on that.

You would have to look into the base.draw call to see if it is using a loop on the passes or if its calling pass(0).

Edit woops i accidently messed up this post ya it does it runs thru all the passes if you set the effect to begin.

so you could do something like so.

technique TwoPassForSpriteBatch

{

pass Pass0

{

PixelShader = compile PS_SHADERMODEL PsRefractionSnells();

}

pass Pass1

{

PixelShader = compile PS_SHADERMODEL PsDisplacement();

}

}

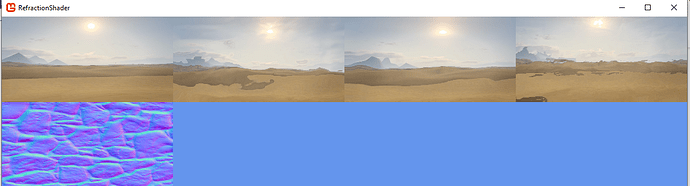

Yep just tried it and it works one on the right is red with the disp texture coords fliped then drawn on top of the other colored red at half alpha same code as the others just the above in the shader and call the two pass technique in the game1 method function.

How would that work with multi pass shaders, or if you wanted to use different techniques within the shader?

Would you set them prior to the begin call?

New techniques between begin end. Typically id say no maybe if your in immediate mode you could do it but it would be hacky id say do a new begin end.

Prolly have to get someone to chime in that knows a bit better on that or dig thru the code.

Ahh cool, that’s interesting

Thanks for that

Which line is calling the Apply method? Sorry I must be overlooking something

Earlier i was referring to image of the code he posted shown on line 97 when you pass the effect into spritebatch instead. then you don’t need to call apply Though its not a big deal and you sort of have to do it that way if you want to override the vertex shader and still use spritebatch.

Could someone explain to me why this line is needed?

// Comment this line out to break the shader!!! float4 v = tex2D(TextureSampler, input.TexCoord);

I am trying to learn HLSL. Does this mean that if I need to perform any calculation in the shader, I need to examine the TextureSampler first?

It’s ok to do any calculations before sampling from the first texture, however if you sample from the another texture first (Displacement) before the default one, then it seems to sometimes mess up the Mojoshader shader-byte-code converter. Hard to say why - but it certainly looks like a bug(could be optimization bug as you said). It seems it expects a sample taken from the default texture first so this odd behavior probably doesn’t happen often.

Btw - here are some tips for anyone who may find them useful:

You can also get away with just doing this (without the Texture2D and for the first one you don’t need to specify anything if you don’t want to):

sampler TexSamp;

sampler DisplaceSamp { Texture = (Displacement); };And then use those. ie:

tex2D(TexSamp, input.texCoord)

Personally I would pass the shader in Begin and using Deferred since then everything is done at once in the End() call which is supposed to be a bit more optimal. Any values that don’t change can be set early on and don’t actually need their values submitted during update or draw (like other textures).

then it seems to sometimes mess up the Mojoshader shader-byte-code converter. Hard to say why

Yep on dx you should probably do something like this just to make sure this seems to force them into the right order texture is the one that spritebatch takes so it is kind of wasteful.

effect.CurrentTechnique = effect.Techniques[technique];

effect.Parameters["Texture"].SetValue(texture);

effect.Parameters["DisplacementTexture"].SetValue(displacementTexture); //displacementTexture

This line gets the Texture’s pixel or texel at a position on the texture.

To say it gets a color sample from a specific Texture it’s linked to. The call itself is to grab a texel from a texture 2D At the specified Texture Coordinates aka (TexCoord) simply it gets a pixel from the specific image you sent in that the sampler represents.

Now the texture coordinates are often called uv to make it easier to refer to them opposed to xy for were on the screen your pixel position to draw to will be. Likewise texels refer to source colors, vs pixels as were a color is drawn to.

The coordinates are the reciprocal of the textures size i.e. 1f / texture.Width = u which always ranges from 0 to 1 for all textures (same for height). So the gpu can use u * width to find the exact location on any texture of any size in a uniformed mathematical way. so that’s the … what were why.

The pixel shader is mostly concerned with source texel manipulations.

The vertex shader with destination vertex position manipulations.

Doing it either way seems to work correctly if the v = tex2D line occurs somewhere before the second texture is sampled… I can remove the v =… line if I use the effect.Parameters[“Texture”].SetValue(texture) and it will not be black, however the results look wrong as though it isn’t sampling from the same part of the video ram unless the v = line is added back in. It’s very weird…

Yea, it’s not a very nice bug is it

At least we have a workaround though

oh i didn’t realize you’re talking about his shader there.

That’s because the displacement is set first here.

Then draw texture sets the texture so at first it assigns the displacement texture to t0 s0 in your pixel shader. Then it has to move it over when the spritebatch vertex shader is called by the draw function that sets its texture or some such voodoo because sprite batch directly sets the texture to t0 s0 not to a named semantic.

spriteBatch.Begin(SpriteSortMode.Immediate, BlendState.Opaque);

effect.CurrentTechnique.Passes[0].Apply();

effect.Parameters["DisplacementScroll"].SetValue(DisplacementScroll += scrollVelocity);

effect.Parameters["Displacement"].SetValue(Content.Load<Texture2D>("Textures /bumpmap"));

spriteBatch.Draw(Content.Load<Texture2D>("Textures/xnauguk"), new Rectangle(s*2, 0, s, s), Color.White);

spriteBatch.End();

like if you look at my shader above im using the displacement texture first because i did this but thats on gl. for dx you also need to set the effect texture prior to the displacement texture as well in game1. Then you can call the tex2D() in any order on the shader.

so for example.

Texture2D Texture : register(t0);

sampler TextureSampler : register(s0)

{

Texture = (Texture);

};

Texture2D DisplacementTexture;

sampler2D DisplacementSampler = sampler_state

{

AddressU = wrap;

AddressV = wrap;

Texture = <DisplacementTexture>;

};

effect.CurrentTechnique = effect.Techniques[technique];

effect.Parameters["Texture"].SetValue(texture);

effect.Parameters["DisplacementTexture"].SetValue(displacementTexture); //displacementTexture

spriteBatch.Begin(0, null, null, null, null, effect);

spriteBatch.Draw(texture, screenRectangle, Color.White);

spriteBatch.End();

then the shader falls in line pain but its not too big of a deal.

//float4 PsHardOutline(float4 position : SV_Position, float4 color : COLOR0, float2 texCoord : TEXCOORD0) : COLOR0

//{

// float4 col = FuncGetDisplacementMapColor(texCoord) * color;

// col = FuncCellShadeMimic3dBlueCel(col); // could use this one instead its more solid

// float ce = FuncGetDisplacementTextureSizeCoef() * .5f;

// float4 colTL = FuncCellShadeMimic3dBlueCel(FuncGetDisplacementMapColor(float2(texCoord.x - ce, texCoord.y + ce).xy) * color);

// float4 colBR = FuncCellShadeMimic3dBlueCel(FuncGetDisplacementMapColor(float2(texCoord.x + ce, texCoord.y - ce).xy) * color);

// float4 colTR = FuncCellShadeMimic3dBlueCel(FuncGetDisplacementMapColor(float2(texCoord.x + ce, texCoord.y + ce).xy) * color);

// float4 colBL = FuncCellShadeMimic3dBlueCel(FuncGetDisplacementMapColor(float2(texCoord.x - ce, texCoord.y - ce).xy) * color);

// col = FunctionOutlineBiasDiffHardEdgesHardBlended(col, colTL, colBR, colTR, colBL, Amplification, Attenuation, float4(1.0f, 1.0f, 1.0f, 1.0f));

// return col;

//}Yes, that’s it!

Based on that (under dx) - I made it work perfectly by ensuring use of the 0 registers for the first texture:

Texture2D tex : register(t0);

sampler TexSamp : register(s0) { Texture = (tex); };

sampler DisplaceSamp { Texture = (Displacement); };Ahh cool

I have noticed you don’t have to do any of that if rendering using a vertex shader. This seems to be an issue when rendering to SpriteBatch.

I know this is old but im trying to make a simple clip mask shader that works on both openGL and windows net6 console apps and going insane. the directX one works , both now compile once i comment out Target, install netcore 3.1, share the content by link put ifdef for the SM 3 if open GL else shader model 4…, but the openGL one doent even sample a single texture i set. anyone have a link to samples of simple barebones sampling pixel shaders that work on newest mono game w net 6? on desktop pc, windows , and im assuming android wil work if desktop GL work… i got the clip to finally work at 7 days ARGGHH… its a 5 line code shader. im looking at nez but hes shader is at SM 2 so its not been used a while…

OK. I couldn’t find one so I made a basic .net6 template with a shared core, shared content, builds, runs on everything… and will upload to my public git… the shader does sample under DX, GL and maybe droid so its something in my setup or render target usage. This should help alot of beginers save a week or more because there arent any up to date simple modern build setups out there, that show how to use one codebase , net standard core, and net6, no repeat code , one fx, content builder, refs to shared content file, that show a simple custom pixel shader in action, and that is the whole point of Monogame. The sample we find are outdated or overly complex.

Just wondering If you guys are still doing any 2d shaders or compute that work on desktop gl , android, ios, and windowsdx, via monogame… i took the old sample and update my last one to mg3.8.1 and net7. There is interest in tryng to work w admob or a shared ios/ androind/ maui based ( mabye) ad host. you are welcome ot work my branch if still interested… I made a compute branch but even my old simple clip shader broke… but it looks more promising thabtn the old way moving forward…

So im trying to break out general stuff everyone woujld need, see if anyone wants to share basic shaders like clip and ray casts and the like, its organized a bit like the netcore 3…1 netstandard 2 but its on net 7 now . , and allow us to touch one Fx file and just buld and runon whatever. No shared folders, conflicts, or bloat and good architecture. Not bulidng a game engine but one could easily add physics engines, imaging dlls, and such … as core net6 DLLs… if doing graphics like to MG Gl i think the “bait and switch” still works, it builds content for each EXE and puts it in differrnt folders…

Monogame With Xamarin or Maui (IOS /Android) - #25 by Damian_Eaglestein… I might ad avaloniaUI , aValonia and WPF level edititor type samples to it as well.

sorry its a little bit of a crazy mess but it would be nice to have some more eyes on this , its just the old mg netcore 3 ->netstandard core up to net 6 or 7-> Net6… should just clone and builld and run at least on adroid and desktopDX/ or GL on vs 2022 preview and windows …

Just wondering If you guys are still doing any 2d shaders that work on desktop gl , android, ios, and windowsdx, via monogame… i took the old sample and update my last right to mg3.8.1 and net7. There is interest in tryng to work in admob or a sharped maui based ( mabye) ad host. you are wellcome ot work my branch if stilll interested… I made a compute branch but even my old simple clip shader broke… but it looks more promising thabtn the old way moving forward…

So im trying to break out general stuff everyone woujld need, see if anyone wants to share basic shaders like clip and ray casts and the like, its organized a bit like the netcore 3…1 netstandard 2 but its on net 7 now . , and allow us to touch one Fx file and just buld and runon whatever. No shared folders, conflicts, or bloat and good architecture. Not buldng a game engine but one could easily add physics engines, imaging dlls, and such … as core net6 DLLs… if doing graphics like to MG Gl i think the “bait and switch” still works, it builds content for each EXE and puts it in differrnt folders…

Monogame With Xamarin or Maui (IOS /Android) - #25 by Damian_Eaglestein… I might ad avaloniaUI , aValonia and WPF level edititor type samples to it as well.

sorry its a little bit ofcrazy mess but it would be nice to have som more eyes on this , its jsut the old mg netcore 3 ->netstandard core up to net 6 or 7-> Net6… should just clone and buidl nad run at least on adroid and desktopDX/ or GL on vs 2022 preview and windows …