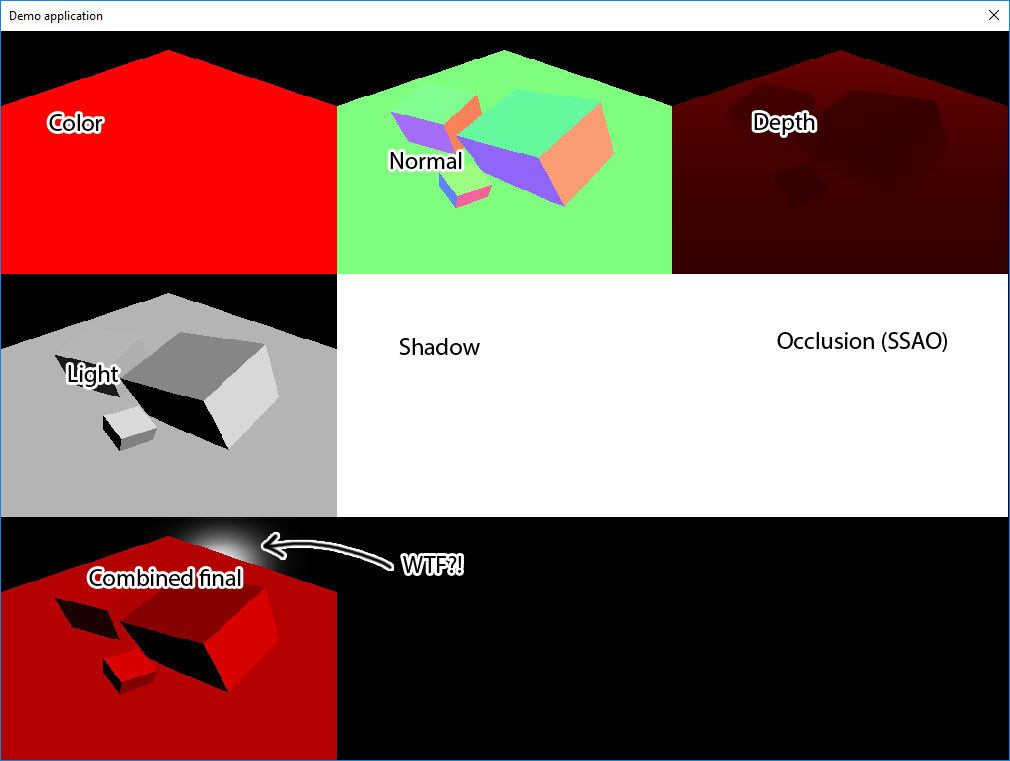

When i get a bug that starts taking to long to fix.

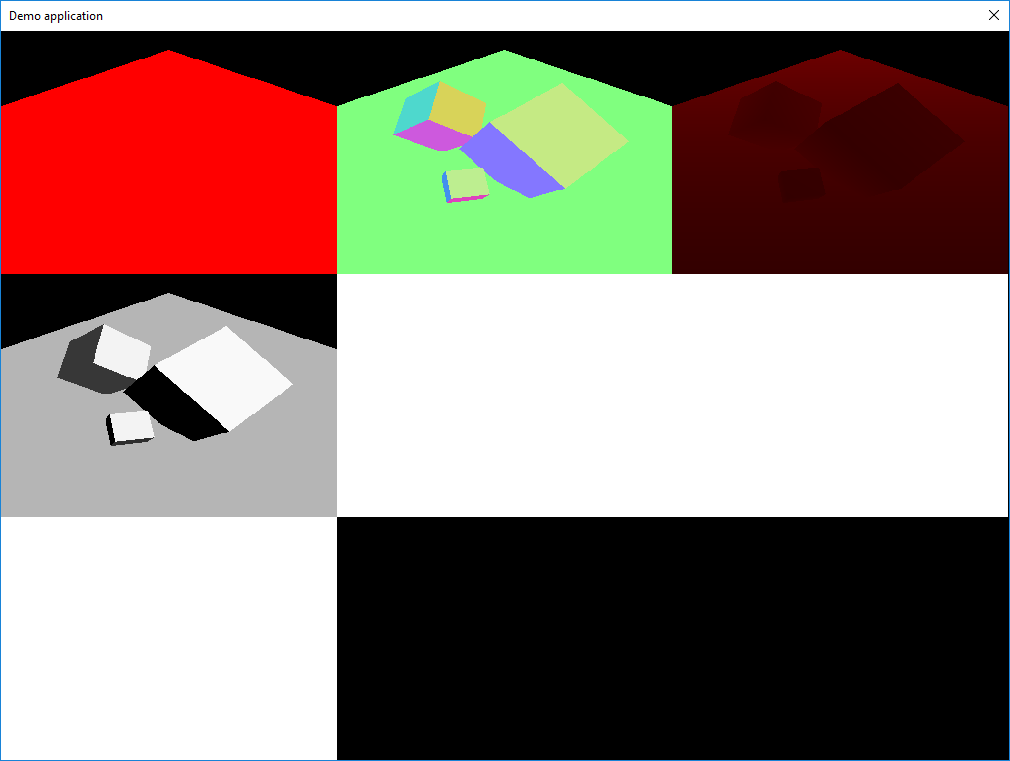

I start to double check the names of everything first so things are very clear.

Then start simplifying.

Break down big lines of code into smaller lines break down calculations into variable parts.

But ya this look like its way way over complicating things.

The more i looked at it, its sort of a mess.

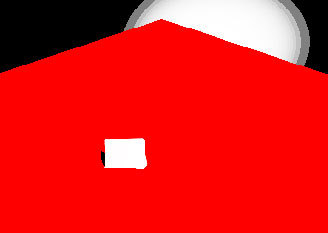

This is real suspicious to me.

Why would anyone want to do this sort of thing?

float3 normal = 2.0f * normalData.xyz - 1.0f;

then here

float3 lightVector = -(lightDirection);

This is the inverseLightDirection now and that’s a bad name, names like this are bug magnets.

In the next line there is

NDL ? = max(0, dot( normal, lightvector));

NdL (as in non directional light)? without asking what good is a non directional light.

What happens when the light is in a negative direction here with max 0, dot products can validly return a negative cosine.

I would think you would want to flip it to be positive. Not zero it out if its to be non directional, i don’t get it.

Ok then there is that weird normal that has been butchered to no longer be unit length.

Who knows what that’s going to output. That could evaluate to -1 in that case it will be zero and then the diffuse light will end up 0.

With this butchered normal data that > (2 * n -1). for a element at .5 or less which is about 35 degrees or something its going to end up zero when you multiply n * the inverse light.

in the next line.

float3 reflectionVector = normalize(reflect(-lightVector, normal));

is

float3 reflectionVector = normalize(reflect(lightDirection, normal));

pow = the x parameter raised to the pow of the y parameter.

so this says start with the reflection vector 0 to 1, (because it has been normalized) powed to the camera vector great its no longer unit length again. clamp that ranged from 0 to 1 * the alpha of the texture is the specular light float ?

float specularLight = specularIntensity * pow(saturate(dot(reflectionVector, directionToCamera)), specularPower);

.

.

Ok im going to stop right here.

To find the magnitude of a specular light for use in increasing the color of a texel.

You only need to dot a vertice.Normal by the -light.Normal itself.

Provided both are in fact normalized you get a cosine that is -1 to 1.

-1 means the light is behind the triangle and shouldn’t be lighting it up,

(think of were the sun is at night)

so you clamp it to zero with saturate.

+1 means the light is directly shining on it from above or on a flat surface directly at no angle.

(think high noon)

0 means its to the side of the surface directly (think of early sunrise on a ocean)

This is multiplied against the color RGB not A.

Lights don’t normally change the transparency’s of things which is what alpha represents.

To say glass no matter how much light is on it will still be transparent even when its reflecting blinding white light off it.

You could flip the negative to positive if you had to by just doing a if (result < 0 ) result = -result; though i don’t see the point in doing that in the case of the ndl value here unless this is some sort of shadow thing going on.

If

That’s if you want the light to have a linear feel less like a specular spot light a bit more spread out.

You multiply the result by itself before you multiply the color to intensify it, and you get a smoother acosine value that evalues to .5 at 45 degrees of incidence to the surface instead of .707.

You should go thru each line and reword everything and break it down into even smaller pieces make sure you can see and know exactly what each thing is intended to do.