It’s also possible without that condition just not so easily.

Actually its the same either way just more complicated looping and storage with each triangle draw separately.

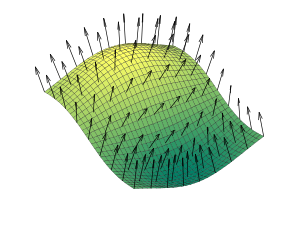

like what im saying is to generate the normals you need the white squares shown in my picture to be “something” but you need them specifically. Those in principal whatever you base them off of are your base values for a basis function to generate smooth contiguous shared or not shared vertice normals for proper lighting.

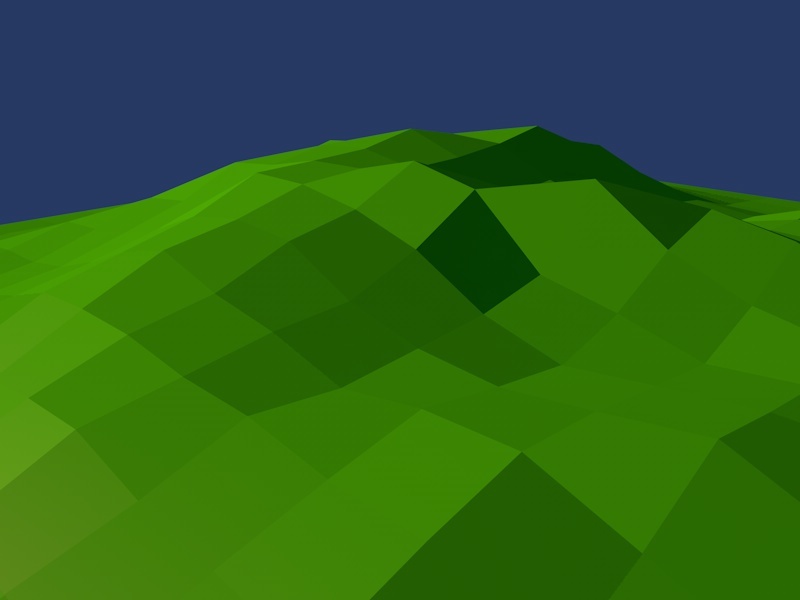

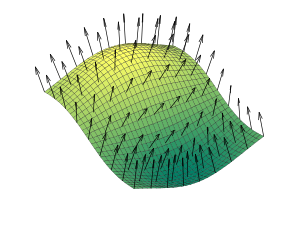

This is what you have going on your case is shown on the right.

You don’t get the left case for free.

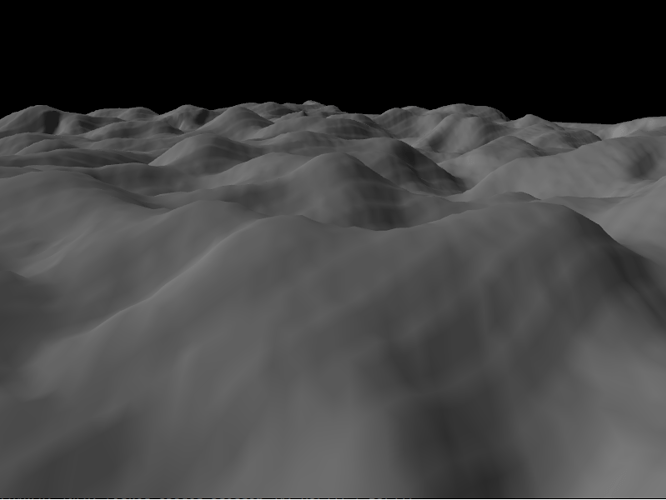

You have to create it by combining and smoothing the normal’s out before you pass them.

You have 2 quads with two triangles each, you have 2 sets of 4 normal’s and 6 vertices.

you must create a smooth adjoining normal yourself, as shown on the left

You solve this directly per vertice by calculating the surrounding triangles around each vertice directly calculating those cross products and averaging then normalizing the result and placing it as the current vertices surface normal you must be cautious of the calculation in regards to winding order.

or .

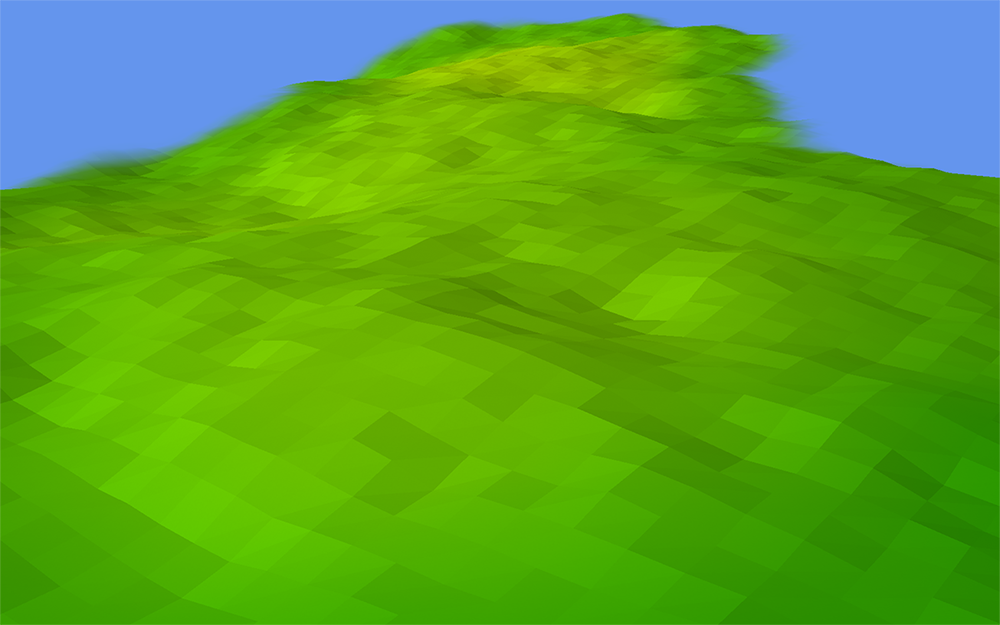

In the case of quad terrain to generate the lighting normal’s properly for a simple array, not a nurb surface like in my picture, its still the same idea. Those may based off each grid or quads total normals that surround any single vertice.

You need a single normal for each whole quad found from all his current surrounding triangle normal’s this can be simply found per quad in his specific case. In order to reform those current vertice normals and get rid of the discontinuous artifacts.

Then he needs to regenerate each vertice normal by what is essentially averaging the quad normals (he just found created), back to each vertice normal, this requires a bit of effort but that is basically the simple gist of it.

To say it another way…

Each quad needs to use all 4 of its vertices or both its triangles to create a per quad normal basis.

Each vertice needs to have its four surrounding quad base normals to be regenerated into to a smooth normal.

So basically each vertice normal requires the influence of the 8 surrounding vertice normals to be regenerated this can be based directly off the triangles as well were either way, this is normally a pre-shader operation done on the vertices themselves before passing to the gpu for terrain just once when you create the terrain itself.

However edge cases that are out of bounds must not be sumed or they will error out if this is wraped around such as a sphere.

Then if the index to be found if it is more then L will be by index = index - L if less then zero index = index + L;

but let’s throw another idea in here!

but let’s throw another idea in here!