Hi,

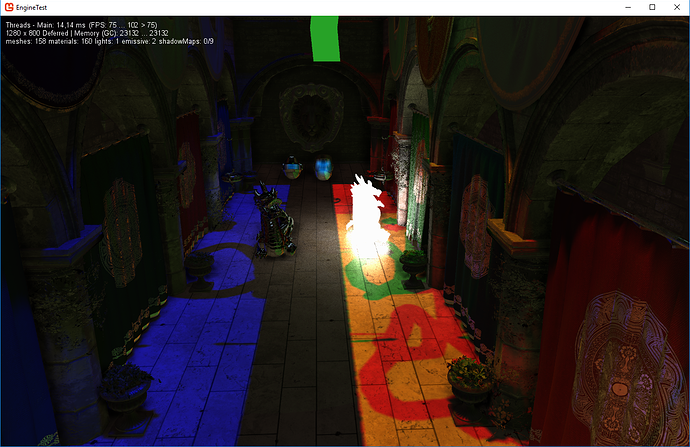

As a shader noob, I’m still trying to understand the mystery behind @kosmonautgames 's Deferred Engine (btw, merci beaucoup for sharing this code  )

)

I think I understand the GBuffer now, but the lighting part remains quite nebulous …

private void DrawLights(List<PointLight> pointLights, List<DirectionalLight> dirLights, Vector3 cameraOrigin)

{

_graphicsDevice.SetRenderTargets(_renderTargetLightBinding);

_graphicsDevice.Clear(Color.TransparentBlack);

DrawPointLights(pointLights, cameraOrigin);

DrawDirectionalLights(dirLights, cameraOrigin);

}

If I understand well, this method is used to draw in 2 render targets (diffuse and specular, both contains in renderTargetLightBinding) :

- multiple point lights (and their shadows)

- multiple directional lights (but only one of them can be shadowed for the moment)

And for that, the shader(s) is/are using :

- the albedo, normal and depth render targets

- one shadow map per light (which was calculated before)

- the direction / power / radius, etc … for each light

Am I right so far ?

So my question is : how (and where) are all theses informations combined ?

Looks like theses two render targets (diffuse and specular) are the only ones to be used for this entire process, but how can this be ?

For the points lights, the shader “DeferredPointLight.fx” (if it is the correct one) seems not looping over a limited number of light unlike I saw in this thread : Struggling to find point light resources

I am sure that it is related to the spheres drawn in the DrawPointLight method, but I don’t understand how it works.

For the directionnal lights, I guess the “DeferredDirectionnalLight.fx” is used, but once again, it seems to work with only one directionnal light. Wouldn’t calling this shader several time actualling erase the result of the previous call ? Because it seems not be stored in other render targets than the diffuse/specular ones …

And in the end of the DrawLights method, how the point lights and directional lights results are combined into the diffuse/specular render targets ?