This is still driving me nuts.

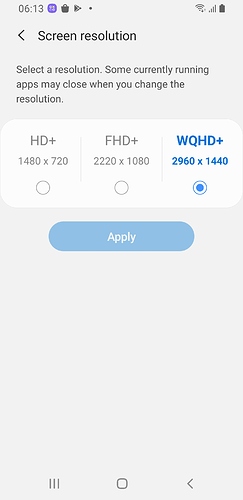

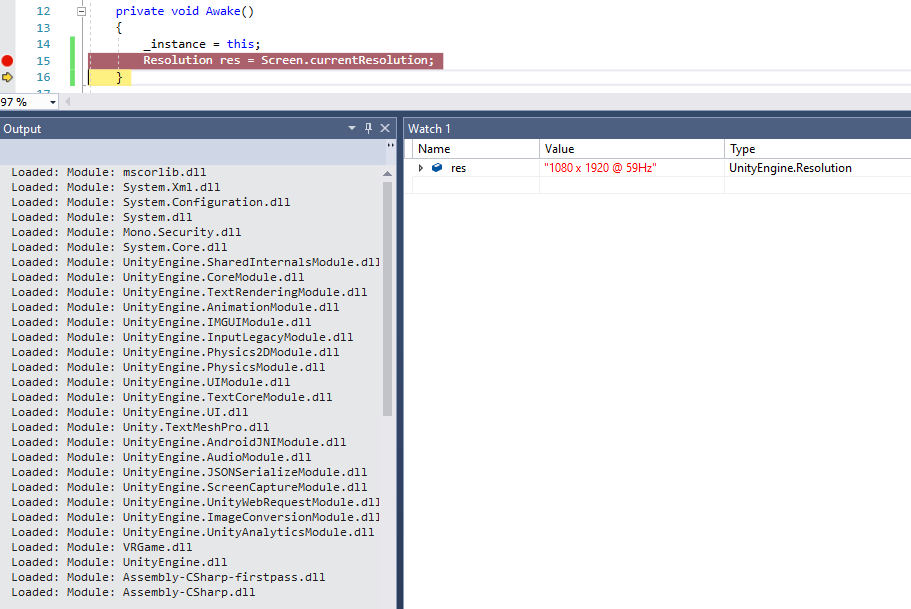

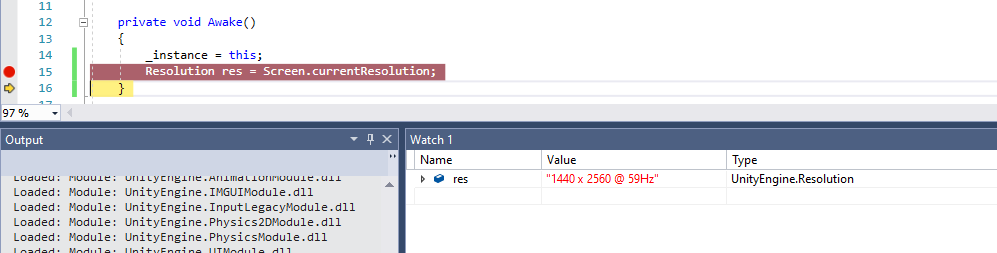

The bold sections below are from the debug output and show where the native resolution is changed from 1440 x 2960 to 1080 x 2220 (for no reason other than the Package Name, apparently).

Relayout returned: old=(0,0,1440,2960) new=(0,0,1080,2220) req=(1440,2960)0 dur=10 res=0x7 s={true 3168907264} ch=true

Why does it get changed to a lower resolution at all?

I’ve tested this on 2 different Samsung phones now with a similar result (S7 changes down to 1080x1920, S9 changes down to 1080x2220). Can no one help please?

Complete Extract output from problem package on a Samsung S9:

09-18 05:04:36.361 D/SurfaceView(27784): onWindowVisibilityChanged(0) true md5f54719fab2b5008f890ca4d350c867c1.MonoGameAndroidGameView{e293af5 VFE...... .F....I. 0,0-0,0} of ViewRootImpl@b4ad3ad[Activity1]

09-18 05:04:36.374 D/ViewRootImpl@b4ad3ad[Activity1](27784): Relayout returned: old=(0,0,1440,2960) new=(0,0,1080,2220) req=(1440,2960)0 dur=10 res=0x7 s={true 3168907264} ch=true

09-18 05:04:36.375 D/OpenGLRenderer(27784): createReliableSurface : 0xbcc80fc0(0xbce1b000)

09-18 05:04:36.383 D/SurfaceView(27784): surfaceCreated 1 #8 md5f54719fab2b5008f890ca4d350c867c1.MonoGameAndroidGameView{e293af5 VFE...... .F....ID 0,0-1080,2076}

09-18 05:04:36.384 D/SurfaceView(27784): surfaceChanged (1080,2076) 1 #8 md5f54719fab2b5008f890ca4d350c867c1.MonoGameAndroidGameView{e293af5 VFE...... .F....ID 0,0-1080,2076}

09-18 05:04:36.385 D/OpenGLRenderer(27784): makeCurrent EglSurface : 0x0 -> 0x0

09-18 05:04:36.394 I/mali_winsys(27784): new_window_surface() [1080x2220] return: 0x3000

09-18 05:04:36.398 D/OpenGLRenderer(27784): makeCurrent EglSurface : 0x0 -> 0xebd9dfc0

09-18 05:04:36.404 W/Gralloc3(27784): mapper 3.x is not supported

09-18 05:04:36.406 I/gralloc (27784): Arm Module v1.0

09-18 05:04:36.445 D/Mono (27784): DllImport searching in: '__Internal' ('(null)').

09-18 05:04:36.445 D/Mono (27784): Searching for 'java_interop_jnienv_get_static_object_field'.

09-18 05:04:36.445 D/Mono (27784): Probing 'java_interop_jnienv_get_static_object_field'.

09-18 05:04:36.445 D/Mono (27784): Found as 'java_interop_jnienv_get_static_object_field'.

09-18 05:04:36.452 D/Mono (27784): Assembly Ref addref MonoGame.Framework[0xebd9d120] -> System.Core[0xebd9d5a0]: 4

09-18 05:04:36.476 D/ViewRootImpl@b4ad3ad[Activity1](27784): **Relayout returned: old=(0,0,1080,2220) new=(0,0,1080,2220) req=(1080,2220)0 dur=9 res=0x1 s={true 3168907264} ch=false**

09-18 05:04:36.479 D/SurfaceView(27784): surfaceChanged (1080,2220) 1 #5 md5f54719fab2b5008f890ca4d350c867c1.MonoGameAndroidGameView{e293af5 VFE...... .F....ID 0,0-1080,2220}

09-18 05:04:36.482 D/ViewRootImpl@b4ad3ad[Activity1](27784): MSG_WINDOW_FOCUS_CHANGED 1 1

This is the same data from the exact same code just with one letter of the package name changed. The resolution is not changed in this case:

Relayout returned: old=(0,0,1440,2960) new=(0,0,1440,2960) req=(1440,2960)0 dur=7 res=0x7 s={true 3131969536} ch=true

09-18 05:06:04.708 D/SurfaceView(29195): onWindowVisibilityChanged(0) true md5f54719fab2b5008f890ca4d350c867c1.MonoGameAndroidGameView{e293af5 VFE...... .F....I. 0,0-0,0} of ViewRootImpl@b4ad3ad[Activity1]

09-18 05:06:04.717 D/ViewRootImpl@b4ad3ad[Activity1](29195): Relayout returned: old=(0,0,1440,2960) new=(0,0,1440,2960) req=(1440,2960)0 dur=7 res=0x7 s={true 3131969536} ch=true

09-18 05:06:04.718 D/OpenGLRenderer(29195): createReliableSurface : 0xbcd45140(0xbaae1000)

09-18 05:06:04.728 D/SurfaceView(29195): surfaceCreated 1 #8 md5f54719fab2b5008f890ca4d350c867c1.MonoGameAndroidGameView{e293af5 VFE...... .F....ID 0,0-1440,2768}

09-18 05:06:04.729 D/SurfaceView(29195): surfaceChanged (1440,2768) 1 #8 md5f54719fab2b5008f890ca4d350c867c1.MonoGameAndroidGameView{e293af5 VFE...... .F....ID 0,0-1440,2768}

09-18 05:06:04.729 D/OpenGLRenderer(29195): makeCurrent EglSurface : 0x0 -> 0x0

09-18 05:06:04.741 I/mali_winsys(29195): new_window_surface() [1440x2960] return: 0x3000

09-18 05:06:04.743 D/OpenGLRenderer(29195): makeCurrent EglSurface : 0x0 -> 0xebd9eaa0

09-18 05:06:04.753 W/Gralloc3(29195): mapper 3.x is not supported

09-18 05:06:04.756 I/gralloc (29195): Arm Module v1.0

09-18 05:06:04.790 D/ViewRootImpl@b4ad3ad[Activity1](29195): Relayout returned: old=(0,0,1440,2960) new=(0,0,1440,2960) req=(1440,2960)0 dur=10 res=0x1 s={true 3131969536} ch=false

09-18 05:06:04.792 D/SurfaceView(29195): surfaceChanged (1440,2960) 1 #5 md5f54719fab2b5008f890ca4d350c867c1.MonoGameAndroidGameView{e293af5 VFE...... .F....ID 0,0-1440,2960}

09-18 05:06:04.794 D/ViewRootImpl@b4ad3ad[Activity1](29195): MSG_WINDOW_FOCUS_CHANGED 1 1

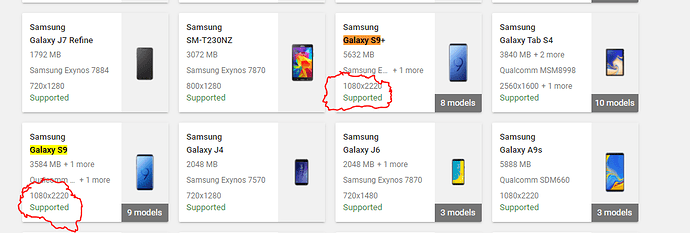

One crazy idea I’ve had is that this app is on the Google Play Store. Google Play store has stats about every single phone out there in the “Device Catalog” on the Google Play Console. For the S9+ it shows a “normal” resolution of 1080x2220.

So is this some kindof Google lookup thing saying, “Ah this Package name is in the store and the store says this device has a resolution of X, therefore I will set the resolution to X, and not the Y that it is ACTUALLY running”?