Hi !

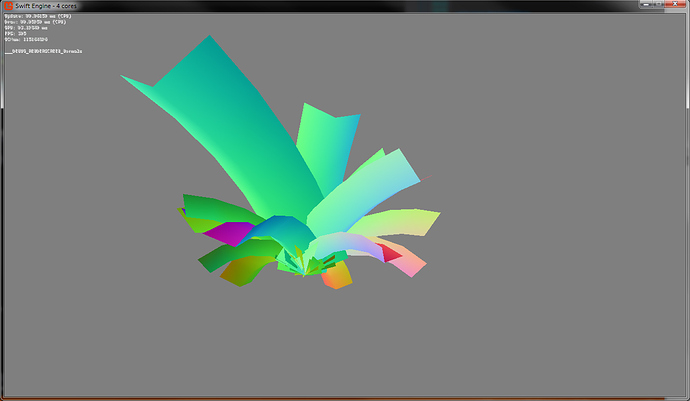

I’m using MRT and a custom processor to draw my models. (Using a GBuffer)

I’m drawing to 4 RenderTargets: (using device.DrawUserIndexedPrimitives instead of a spritebatch if it matters)

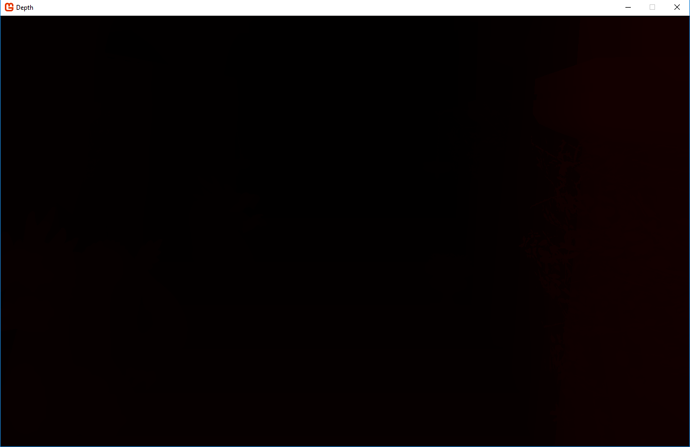

It curiously shows only depth as if it was 0 or 1, nothing in between…

float4 worldPosition = mul(input.Position, World);

output.Depth.x = output.Position.z;

output.Depth.y = output.Position.w;

The creation of the RenderTargets:

_RT_Color = new RenderTarget2D(GraphicsDevice, _BackbufferWidth, _BackbufferHeight, false, SurfaceFormat.Color, DepthFormat.Depth24Stencil8);

_RT_Normal = new RenderTarget2D(GraphicsDevice, _BackbufferWidth, _BackbufferHeight, false, SurfaceFormat.Color, DepthFormat.None);

_RT_Depth = new RenderTarget2D(GraphicsDevice, _BackbufferWidth, _BackbufferHeight, false, SurfaceFormat.Single, DepthFormat.None);

_RT_BlackMask = new RenderTarget2D(GraphicsDevice, _BackbufferWidth, _BackbufferHeight, false, SurfaceFormat.Color, DepthFormat.None);

But when I’m drawing each of the RenderTarget to see what was drawn in each, only the Normals are ok.

_RT_Color is black whatever I try:

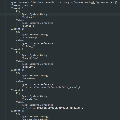

PixelShaderOutput output = (PixelShaderOutput)0;

output.Color = float4(1.0f, 0.5f, 0.7f, 0.5f); return output; //Force to return and don't execute the remaining code

Here are the structs (Are these semantic ok ?)

struct VertexShaderInput

{

float4 Position : POSITION;

float3 Normal : NORMAL0;

float2 TexCoord : TEXCOORD0;

float3 Binormal : BINORMAL0;

float3 Tangent : TANGENT0;

};

struct VertexShaderOutput

{

float4 Position : SV_POSITION;

float2 TexCoord : TEXCOORD0;

float2 Depth : TEXCOORD1;

float3x3 tangentToWorld : TEXCOORD2;

float3 ViewDirection : TANGENT0;

}

struct PixelShaderOutput

{

float4 Color : SV_TARGET0; //COLORx instead ?;

float4 Normal : SV_TARGET1;

float4 Depth : SV_TARGET2;

float4 SunRays : SV_TARGET3;

}