Hello everyone. first of I need to make my own modal class. Load Modals from the pipeline is not an option within my games as then need to be loaded / generated in realtime.

So Iv been trying to workout a few things about how to do things.

So far I have This.

I have 2 arrays one for the originalVertexs and one the renderVertexs.

The originalVertexs contains all the vertex of the modal.

The renderVertexs applys rotation, adds the modals position in the real world, and will add any animation to them. I will do this for every vertex.

I also think that I will have to calculate the normal for all the rendervertexs each draw.

Now this seems like a hell of a lot of processing to be done on the CPU and its got me worried that my approce is completely wrong. Using Vector3.Transfarm 100s in not 1000s of times each draw seems that it will kill my game.

So my question is I’m I approcing this wrong, Is there anyway to offload this to the GPU I.e send all the Vertexs to GPU with a matrix and get it calculated on GPU?

Sorry if that’s a stupid question almost all my expertise in gaming is with 2D games not 3D

The important thing to remember is that you don’t have to manually translate and rotate every vertex in the model yourself before rendering. You treat the model as if it has never moved away from the origin (0, 0, 0), and supply a transformation matrix as part of the draw. The transformation matrix is applied to every vertex as part of the vertex shader on the GPU.

As Konaju said you treat the model as if it has never moved so that likewise you treat the normals as if they have never moved.

If you create your own models if your loading them from file at run-time then you have created them at some point. You might as well generate the normals for the model and save / load them too.

To create a surface normal you just use the first crossproduct of two normalized vector distances found between 3 vertices of a triangle. The order you created them determines if the normal is backface or front face. To say if you have 3 vertices A B C then C-B = d1, B-A = d2, then a surface normal is found by Vector2.Cross( d1.Normalize(), d2.Normalize() );

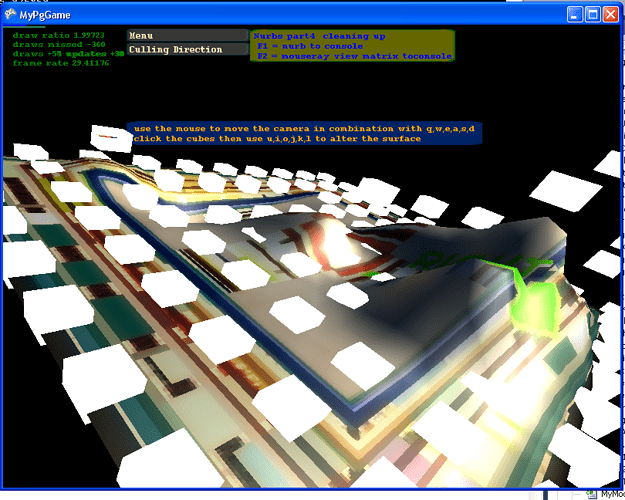

In The below image i did what you are describing, This was only for the purpose of saving loading them to a text file aka testing. This is really slow all the vertices are being resent to the gpu each time i altered something, which sounds like what your doing, but you would never do that in a game.

This was a terrain creating initial test i was rewriting dave f rogers old nurbs code from his site coded in c for c# i never really got to far into it though or finished.

the c nurbs site can be found here

that’s cool. I had a feeling that there would be a better way of doing it.

I do have a question though.

How do I apply a matrix to my verts?

I have a gamecamera that already has matrix applied to the world, so how do I also apply a matrix to the vertex array?

You should set your matrix to your effect, or to the basic effect in monogame. that is passed to the gpu and it acts on it.

for example

i have a cube that is fully generated in code with the normals in the vertex format. I would just call draw on it, then send in the parameters like you would with a draw or drawstring but inis case i pass in matrices instead of text or a source rectangle or set the rotation parameter ect…

public void DrawBasicCube(BasicEffect beffect, Matrix world, Matrix view, Matrix projection, Texture2D t)

{

beffect.EnableDefaultLighting();

beffect.TextureEnabled = true;

beffect.Texture = t;

beffect.World = world;

beffect.View = view;

beffect.Projection = projection;

GraphicsDevice device = beffect.GraphicsDevice;

//device.Indices = The_IndexBuffer;

//BxEngien.Gdevice.SetVertexBuffer(The_VertexBuffer);

device.SetVertexBuffer(The_VertexBuffer);

// there really is only one pass here

foreach (EffectPass pass in beffect.CurrentTechnique.Passes)

{

pass.Apply();

//BxEngien.Gdevice.DrawPrimitives(PrimitiveType.TriangleList, 0, NUM_TRIANGLES);

device.DrawPrimitives(PrimitiveType.TriangleList, start_from, to_number_of_triangles);

}

}

were i previously set the vertice data to the buffer just one time.

then beffect is loaded with info the effect passes tell the gpu how and were to draw the vertices.

the vertex format could be simply vertexpositionnormal or it could use something like so provided i was using a custom shader / effect that i wrote to do more stuff with the matrixes values or data in the format.

public struct VertexMultitextured

{

public Vector3 Position;

public Vector3 Normal;

public Vector4 TextureCoordinate;

public Vector4 TexWeights;

// 14 * 4 bytes = 56 for a cube with 36 vertices

// just under 2 kilobytes for a cool skybox win win

public static int SizeInBytes = (3 + 3 + 4 + 4) * sizeof(float);

public static VertexElement[] VertexElements = new VertexElement[]

{

new VertexElement( 0, VertexElementFormat.Vector3, VertexElementUsage.Position, 0 ),

new VertexElement( sizeof(float) * 3, VertexElementFormat.Vector3, VertexElementUsage.Normal, 0 ),

new VertexElement( sizeof(float) * 6, VertexElementFormat.Vector4, VertexElementUsage.TextureCoordinate, 0 ),

new VertexElement( sizeof(float) * 10, VertexElementFormat.Vector4, VertexElementUsage.TextureCoordinate, 1 ),

};

}

Thanks everyone I managed to get it all working.