I wanted to optimize my lighting with stencil culling when unforseen problem arose.

I have a curious bug now, and I have no idea what changed.

Basically when i draw 1000 lights my FPS will go down to 70. Which is odd, since they used to be at 170. That’s not the issue, the issue is that even if I have many times the resolution the frames will still stay at 70. It doesn’t matter whether I draw at 100x100 or 1920x1200 or 3840x1200 (in all of those cases previously the framerate would have stayed above 70, so that’s fine).

Even if i change the pixel shader to be a one liner, (return float4(1,1,1,1) ) my frametime barely changes.

My vertex shader is as simple as it gets, too. And it doesn’t make a difference either if I just return hard values instead of calculating anything.

I then changed the light mesh to be a cube, so only 8 vertices. No difference.

Lighting scales linearly with pixels covered usually and I wonder why it doesn’t any more.

If I turn away from the lights the scene will render normally - that is 1000 fps at low resolution, 200 fps at high resolution. Makes sense since a lot of the stuff is pixel shader bound.

Soooo is it a CPU problem? Possibly, if i check performance profilers it turns out that over 50% of the work goes into the _graphics.DrawIndexed function, afterwards I can’t trace.

But nothing changed here, I am 100% sure. I still use the same mesh and it doesn’t matter whether or not I pass the shader variables etc.

Any ideas?

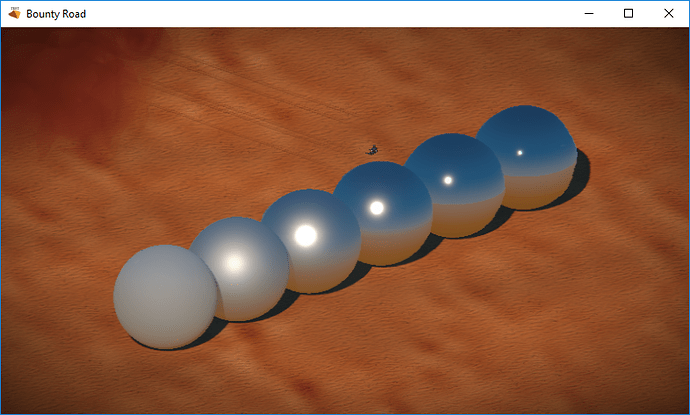

EDIT: Hmm. I’ve reverted to a very old build, where I took this screenshot

and I get the same results. Doesn’t matter if lights have volume or not, framerate stays.

So I would guess it is outside of the program. Maybe some windows thing? I have no clue. Really confused.

I have checked my processor clock rate and it’s normal. No powered-down state I believe.

EDIT: WOOWOWOW after a long time I found the error

In my DirectX properties (Debug->Graphics->directX properties) I’ve set some flags. Once disabled everything runs well again