I uploaded the test project that i had build, can you help me take a look why my lightbuffer is different? here is the link. Thanks

I uploaded the test project that i had build, can you help me take a look why my lightbuffer is different? here is the link. Thanks

the code you sent works correctly i think. The light is attached to the camera and behaves like it should.

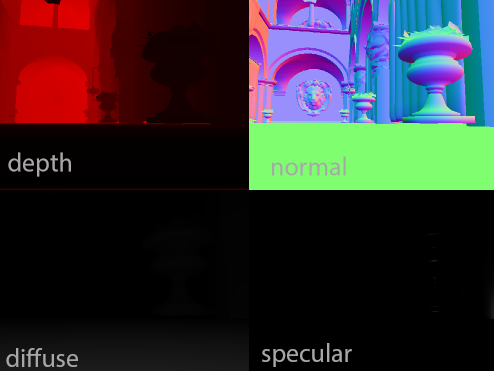

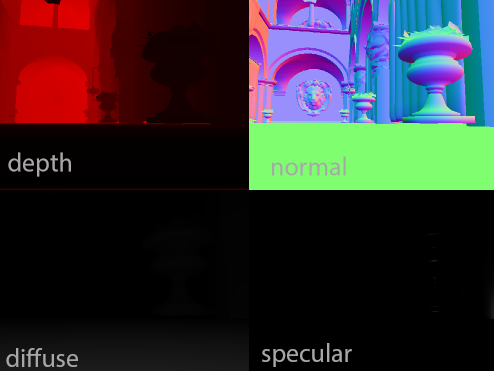

I didn’t attach any light to camera, they are both with different position. Why it so much different, since i have almost same scale data like in ur demo? Or i missing something?

I’ve found your mistake then.

You pass light position as 0,0,0, but in the shader we calculate from viewSpace.

That means that the light is at camera position, but the mesh is somewhere else.

So you need to convert the position to view space.

void DrawPointLight(Vector3 position, Vector3 color, float radius, float intensity)

{

…

pointlightFx.Parameters[“lightPosition”].SetValue(Vector3.Transform( position, _view));

… }

Haven’t tested, but that’s the idea

Omg, its worked . You’re great. Thanks so much

how can i convert existing viewspace normal buffer back to worldspace normal?

uh, inverse view matrix and then just like default world space normals? maybe you should ask on gamedev.net, they are super knowledgeable and eager to help with such questions

WOW.

This thread has moved on a bit…

Found it … was thinking reconstruct a worldnormal buffer and keep in to texture, or calculate by using the helper function everytime when we ned in shader? which 1 is faster?

float3 GetWorldNormal(float3 viewspaceNormal)

{

float3 worldNormal = mul((float3x3)View, viewspaceNormal);

return worldNormal;

}

float3 GetViewNormal(float3 worldspaceNormal)

{

float3 viewNormal = mul(transpose((float3x3)View), worldspaceNormal);

return normalize(viewNormal);

}matrix multiplication is faster than one more rendertarget.

But it’s probably even faster to do some matrix multiplications on CPU and not worry about world space at all

is the spotlight work? how can i make it work?

I’ve Reenabled directional lights (sun lights) but not yet spotlights. I think the old spotlight Shader files are still there though in “unused”.

The idea is the same as pointloghts, but you also pass a light direction. Then when computing the light for each pixel you compare the direction from light to pixel with the spotlight direction (with a dot) and multiply by this value

nice…look forward for ur spotligting implementation.

@kosmonautgames that environment is it your own creation or a generic environment available somewhere? I found a code project page with it I think… just curious

I think I provided a link to the meshes of the scene in the download. They are generic and were made for graphics research.

Look for “Crytek Sponza”

I had a look at your code, and there is a thing I don’t catch:

sometimes you use a linear depth:

in DrawBasic_VSOut DrawBasic_VertexShader(DrawBasic_VSIn input)

Output.Depth = mul(input.Position, WorldView).z / -FarClip;

and sometimes not:

infloat4 DrawBasicVSM_PixelShader(DrawBasic_VSOut input) : SV_TARGET

float depth = input.Depth.x / input.Depth.y;

How can it be possible to compare the 2 values to make shadows ?

i don’t do linear depth for shadows (yet)

Hum ok so linear is only used for deferred and lighting and back to z/w for shadows and the rest of post processes

I thought you used linear depth for ssao too (which i had to do) and the rest of the graphics pipeline

everything uses linear viewspace except for shadow mapping ( so yes SSAO uses linear). I actually changed that today, so it uses linear, too (but that’s a bit more expensive  ). Not everything is integrated yet, so no update for the shadows are pushed ot the server right now

). Not everything is integrated yet, so no update for the shadows are pushed ot the server right now

I actually switched to linear mainly for SSAO. Though I will admit my implementation is not great.

I have other stuff to work on right now though.

Ok  I think the thing I missed was in

I think the thing I missed was in

float3 lightVectorWS = -mul(float4(lightVector, 0), InverseView).xyz;

float depthInLS = getDepthInLS(float4(input.PositionVS, 1), lightVectorWS);

float shadowVSM = chebyshevUpperBound(depthInLS, lightVectorWS);

I was wondering how I could make my shadows work with my non linear depth and yours cold work too with the same maths.

Another question:

To sample from the cubemap, isn’t it enough to use texCUBE with the light direction vector (your lightVectorWS), instead of trying to find the transformation with getDepthInLS (LS= LightSpace right ?) Some tutorials says tit is not necessary to normalize the lightvector, some say to… I’m lost, maybe my shadows are working by chance…