#define f3_f(c) (dot(round((c) * 255), float3(65536, 256, 1)))

#define f_f3(f) (frac((f) / float3(16777216, 65536, 256)))

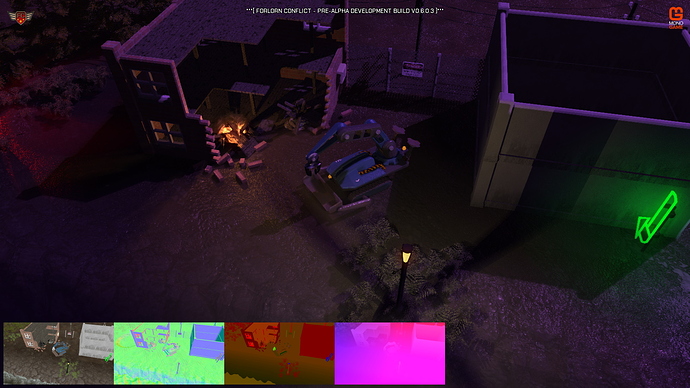

This wasn’t a problem initially as I only had material properties for Roughness, Metalness and Emission in the game so could reluctantly accept the lost bytes. However, I’m adding ambient occlusion and could possibly use the other potentially available bytes on colour and normal for other properties. I’ve managed to create a nasty version, below, that crams emission and occlusion in the third byte but this only gives me numeric range 16 for each which looks okay but still wastes data. The current code for the material layer is below.

inline float4 f_f4(float v)

{

float3 f3 = f_f3(v);

uint b, a, c;

c = uint(f3.b * 255.0f);

b = (c & 0xF0) >> 4;

a = c & 0x0F;

return float4(f3.r, f3.g, float(b) / 15.0f, float(a) / 15.0f);

}

inline float f4_f(float4 color)

{

uint b, a, c;

b = (uint) (color.b * 15);

b = b << 4;

a = (uint) (color.a * 15);

c = a | b;

return f3_f(float3(color.r, color.g, float(c) / 255.0f));

}

float4 HFXDeferedDataPixelShaderFunction(HFXDeferedDataVertexShaderOutput input) : COLOR0

{

float3 f3Normal;

float3 f3Color;

float4 f4Material;

float4 f4Final;

// Get normal from sampler and combine with world normal

f3Normal = NormalMapConstant * (NormalMap.Sample(NormalSampler, input.TextureCoordinate).xyz - float3(0.5, 0.5, 0.5));

f3Normal = input.Normal + (f3Normal.x * input.Tangent + f3Normal.y * input.Binormal);

f3Normal = normalize(f3Normal);

f3Normal = 0.5f * (f3Normal + 1.0f);

// Get texture color from sampler

f3Color = tex2D(TextureSampler, input.TextureCoordinate).xyz;

// Get material properties from sampler

f4Material = tex2D(MaterialSampler, input.TextureCoordinate);

// Encode data

f4Final = float4(f3_f(f3Color),

f3_f(f3Normal),

f4_f(f4Material),

1.0f - (input.Depth.x / input.Depth.y));

// Return final

return f4Final;

}

Now from all my reading this wasn’t easy in earlier shader models and I’ve seen many ways of attempting it, none that worked for me, and some people saying it isn’t possible. However, in shader model 4 there are two functions “asfloat” and “asunit” that should be able to do exactly this. However, despite trying a LOT of different things I can’t seem to get this to work. This only needs to work on Windows using Directx. Has anyone out there been able to do this and could provide a f4_f and f_f4 function as above without losing the last byte?

TLDR: Need a float (32bit) to float4 (8bit x 4) converter method for HLSL in both directions. Windows Directx.