This is probably a stupid question, but does anyone know of a way to instruct Monogame/XNA to draw polygons rather than triangles?

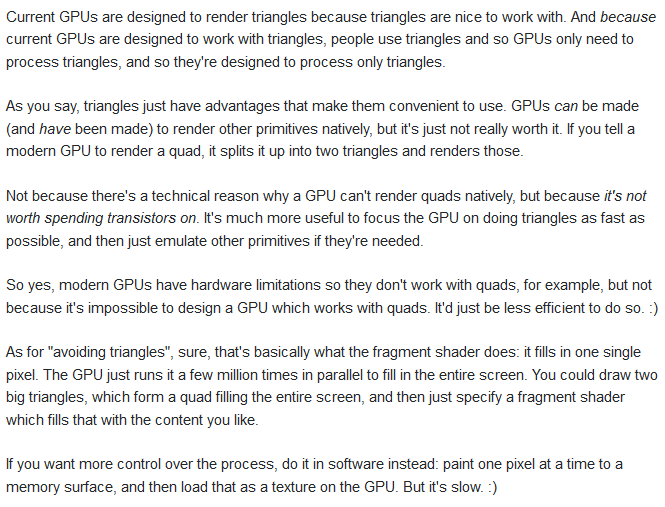

Right now I can only figure out how to draw 2 triangles into a square, which contains 4 vertices and 6 indices (quad).

I would like to simply have 4 vertices and 4 indices (square).

This probably does not seem like a big deal to most people, but if I was using quads with 6 info points vs a square with 4, it adds up to substantially more objects on screen / better performance with the same amount.

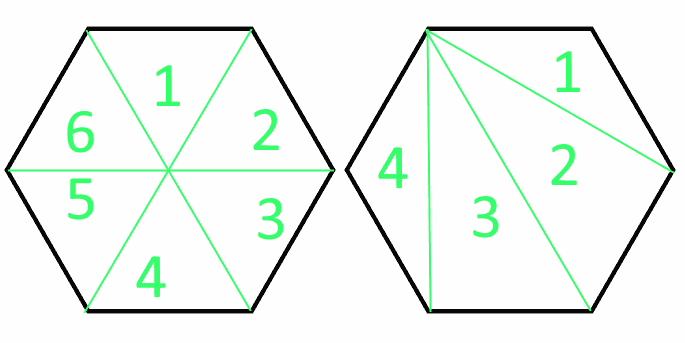

I know you can do this is other frameworks, for example I was drawing hexagons, as a single polygon, not multiple triangle polygons.

There is a work around to just export an .fbx square, with 4 edges not 5, but I would like to be able to use this with procedural generation, and model editing in game.