Im seeing something that i really don’t understand with my shader, when i add a normal to my custom vertice data structure, then send it to my .fx shader shown below (which doesn’t really do anything) i get problems.

//_______________________________

// PNCMT PosNormalColorMultiTexture fx

#if OPENGL

#define SV_POSITION POSITION

#define VS_SHADERMODEL vs_3_0

#define PS_SHADERMODEL ps_3_0

#else

#define VS_SHADERMODEL vs_4_0_level_9_1

#define PS_SHADERMODEL ps_4_0_level_9_1

#endif

float4x4 gworldviewprojection;

Texture2D TextureA;

sampler2D TextureSamplerA = sampler_state

{

Texture = <TextureA>;

};

Texture2D TextureB;

sampler2D TextureSamplerB = sampler_state

{

Texture = <TextureB>;

};

struct VertexShaderInput

{

float4 Position : POSITION0;

float4 Normal : NORMAL0;

float4 Color : COLOR0;

float2 TexureCoordinateA : TEXCOORD0;

float2 TexureCoordinateB : TEXCOORD1;

};

struct VertexShaderOutput

{

float4 Position : SV_Position;

float4 Normal : NORMAL0;

float4 Color : COLOR0;

float2 TexureCoordinateA : TEXCOORD0;

float2 TexureCoordinateB : TEXCOORD1;

};

struct PixelShaderOutput

{

float4 Color : COLOR0;

};

VertexShaderOutput VertexShaderFunction(VertexShaderInput input)

{

VertexShaderOutput output;

output.Position = mul(input.Position, gworldviewprojection);

output.Normal = input.Normal;

output.Color = input.Color;

output.TexureCoordinateA = input.TexureCoordinateA;

output.TexureCoordinateB = input.TexureCoordinateB;

return output;

}

PixelShaderOutput PixelShaderFunction(VertexShaderOutput input)

{

PixelShaderOutput output;

// test

float4 A = tex2D(TextureSamplerA, input.TexureCoordinateA) * input.Color;

float4 B = tex2D(TextureSamplerB, input.TexureCoordinateA) * input.Color;

A.a = B.a;

float4 C = tex2D(TextureSamplerA, input.TexureCoordinateB) * input.Color;

float4 D = tex2D(TextureSamplerB, input.TexureCoordinateB) * input.Color;

if (C.a < D.a) A.b = .99;

// normal use it to get the shader working

float4 norm = input.Normal;

//if (norm.x >0) A.b = .99;

output.Color = A;

//

return output;

}

technique BlankTechniqueA

{

pass

{

VertexShader = compile VS_SHADERMODEL VertexShaderFunction();

PixelShader = compile PS_SHADERMODEL PixelShaderFunction();

}

}

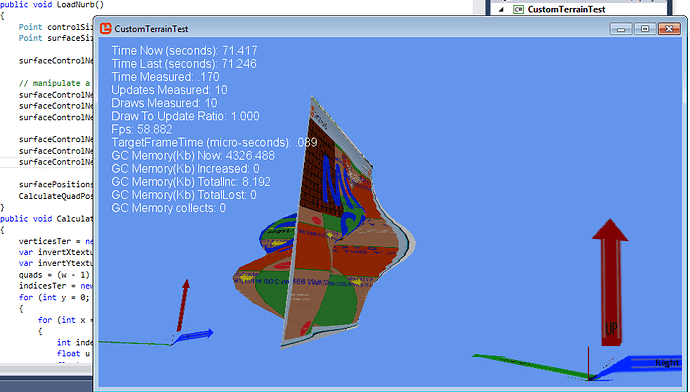

Ok first to explain this problem clearly ill show a couple screenshots to illustrate.

If i use a data structure Without the normal passed to the above shader. Position Color Texture coordinates

public struct VertexPositionColorUvTexture : IVertexType

{

public Vector3 Position; // 12 bytes

public Color Color; // 4 bytes

public Vector2 TextureCoordinate; // 8 bytes

public Vector2 WhichTexture; // 8 bytes

public static int currentByteSize = 0;

public static int Offset(float n) { var s = sizeof(float); currentByteSize += s; return currentByteSize - s; }

public static int Offset(Vector2 n) { var s = sizeof(float) * 2; currentByteSize += s; return currentByteSize - s; }

public static int Offset(Color n) { var s = sizeof(int); currentByteSize += s; return currentByteSize - s; }

public static int Offset(Vector3 n) { var s = sizeof(float) * 3; currentByteSize += s; return currentByteSize - s; }

public static int Offset(Vector4 n) { var s = sizeof(float) * 4; currentByteSize += s; return currentByteSize - s; }

public static VertexDeclaration VertexDeclaration = new VertexDeclaration

(

new VertexElement(Offset(Vector3.Zero), VertexElementFormat.Vector3, VertexElementUsage.Position, 0),

new VertexElement(Offset(Color.White), VertexElementFormat.Color, VertexElementUsage.Color, 0),

new VertexElement(Offset(Vector2.Zero), VertexElementFormat.Vector2, VertexElementUsage.TextureCoordinate, 0),

new VertexElement(Offset(Vector2.Zero), VertexElementFormat.Vector2, VertexElementUsage.TextureCoordinate, 1)

);

VertexDeclaration IVertexType.VertexDeclaration { get { return VertexDeclaration; } }

}

Then pass it to the same .fx shader shown above previously.

Via the draw call shown below.

Then it works fine…

public void DrawCustomVertices()

{

SimplePNCT.Parameters["gworldviewprojection"].SetValue(worldviewprojection);

foreach (EffectPass pass in SimplePNCT.CurrentTechnique.Passes)

{

pass.Apply();

GraphicsDevice.DrawUserIndexedPrimitives(

PrimitiveType.TriangleList,

verticesTer, 0,

2,

indicesTer, 0,

quads *2,

VertexPositionColorUvTexture.VertexDeclaration

);

}

}

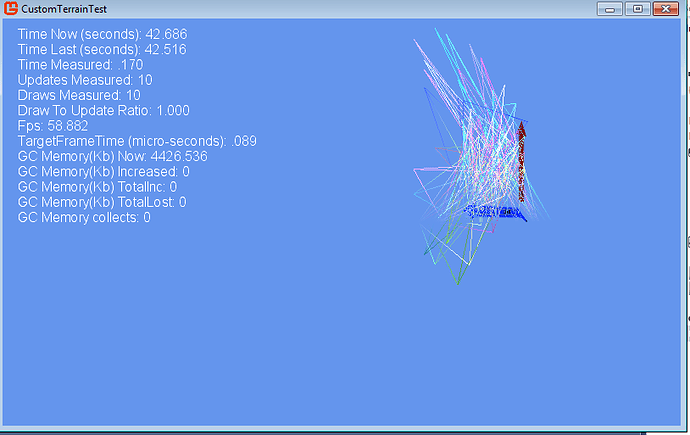

Producing the shown result.

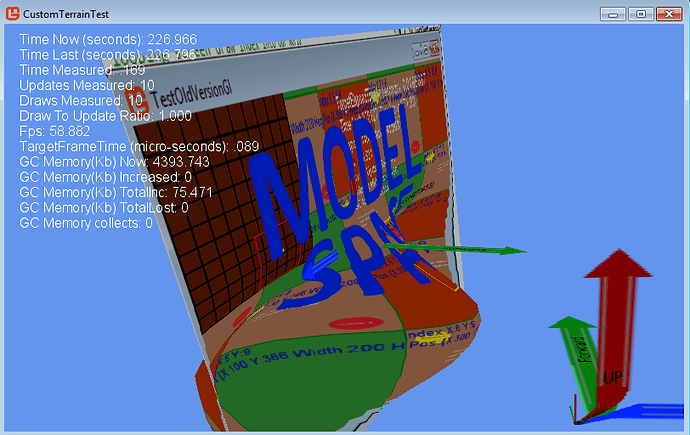

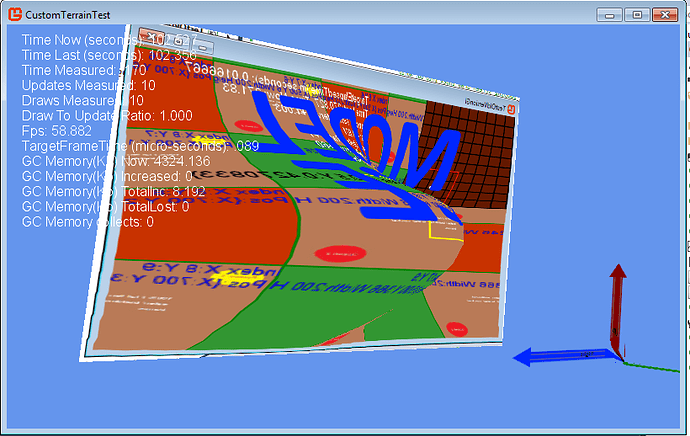

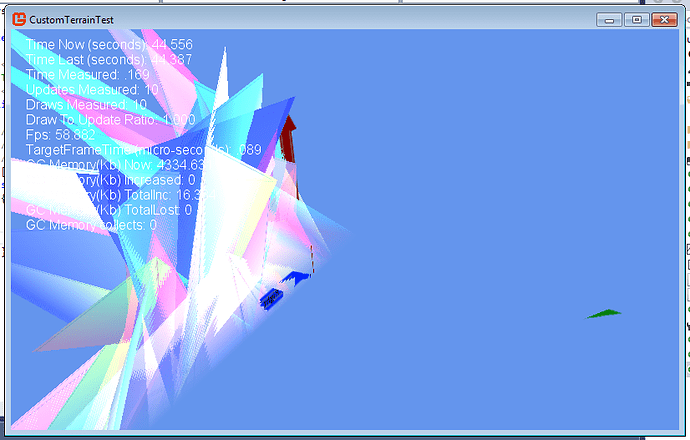

However… and here is the strange part.

if i use the same shader with the same draw call other then changing the vertice format passed in.

This vertice format below, then it goes haywire.

public struct PositionNormalColorUvMultiTexture : IVertexType

{

public Vector3 Position;

public Vector3 Normal;

public Color Color;

public Vector2 TextureCoordinateA;

public Vector2 TextureCoordinateB;

public static int SizeInBytes = (3 + 3 + 1 + 2 +2) * sizeof(float);

public static VertexDeclaration VertexDeclaration = new VertexDeclaration

(

new VertexElement(0, VertexElementFormat.Vector3, VertexElementUsage.Position, 0),

new VertexElement(sizeof(float) * 3, VertexElementFormat.Vector3, VertexElementUsage.Normal, 0),

new VertexElement(sizeof(int) * 1, VertexElementFormat.Color, VertexElementUsage.Color, 0),

new VertexElement(sizeof(float) * 2, VertexElementFormat.Vector2, VertexElementUsage.TextureCoordinate, 0),

new VertexElement(sizeof(float) * 2, VertexElementFormat.Vector2, VertexElementUsage.TextureCoordinate, 1)

);

VertexDeclaration IVertexType.VertexDeclaration { get { return VertexDeclaration; } }

}

Even though im not really using the normal at all, im just pulling in the normal data.

Its like scrambled eggs all of a sudden.

Anyone have any clue at all why in the world id get that.

Try

Try