Tried to add SSAO to my deferred renderer, should have been trivial, but nothing I do works

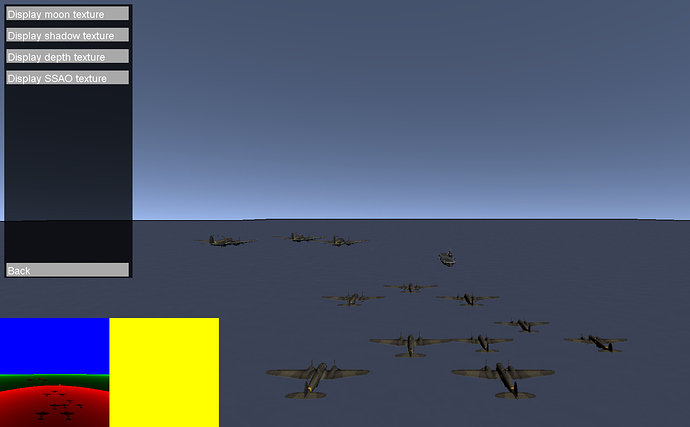

At first I thought it was depth buffer precision issues, so I swapped from using a log depth buffer to a linear depth buffer. I checked this worked by actually writing the depth buffer to the log and it’s perfect.

I can see that the depth varies from pixel to pixel , not by a lot, but by enough that SSAO should work.

So I ripped the shader apart and went down to a really trivial version that just samples 16 pixels around the target pixel and accumulates the pixels that are in front of the target pixel in the depth plane

Doesn’t work,

I get nothing

The shader has been ripped apart and now is as simple as it can be. It will not generate good looking SSAO, but it should produce SOMETHING

///////////////////////////////////////////////////////////////////////////////////////

// Pixel shaders

///////////////////////////////////////////////////////////////////////////////////////

float4 MainPS(VertexShaderOutput input) : COLOR

{

//float3 random = normalize(tex2D(RandomTextureSampler, input.UV * 4.0).rgb);

float depth = tex2D(depthSampler, input.UV).r;

//float3 position = float3(input.UV, depth);

//float3 normal = normal_from_depth(input.UV);

//float radius_depth = radius / depth;

//float occlusion = 0.0;

float diff = 16.0;

[unroll]

for (int i = 0; i < 16; i++)

{

float2 sp = sample_sphere[i].xy * 8 * halfPixel;

float occ_depth = tex2D(depthSampler, input.UV + sp).r;

//float3 ray = radius_depth * reflect(sample_sphere[i], random);

//float3 hemi_ray = position + sign(dot(ray,normal)) * ray;

//

//float occ_depth = tex2D(depthSampler, saturate(hemi_ray.xy)).r;

//float difference = (depth - occ_depth);

if (depth > occ_depth)

diff = diff-1;

//occlusion += step(falloff, difference) * (1.0 - smoothstep(falloff, area, difference));

}

//float ao = 1.0 - total_strength * occlusion * (1.0 / samples);

//float res = saturate(ao + base);

float res = saturate(diff / 16.0f);

return float4(res, res, res, 1.0);

//return float4(debug_depth(depth), 1.0);

}

I cannot get the content builder to generate debug shaders, it just crashes, so I cannot debug the shader itself.

I have checked the depth buffer is correct many times and even done a pass where I took a dump of the depth buffer and did the SSAO in C# instead of the shader, which produced the expected awful results.

I can tell you the difference between adjacent pixels in the depth buffer is in the centimetres range (0.01 -> 0.09) but that should be enough for the shader to work.

Has anyone got any ideas how I can continue?

It’s doing my head in