I’m a bit confused by this. I’ve created a simple landscape using a simplex noise function and a triangle list of VertexPositionNormalTextures. I use a BasicEffect with default lighting, just to give me a basic shader.

Here is the body of my draw method:

foreach (var pass in effect.CurrentTechnique.Passes)

{

pass.Apply();

graphics.GraphicsDevice.DrawUserPrimitives(PrimitiveType.TriangleList, verts, 0, verts.Length/3);

}

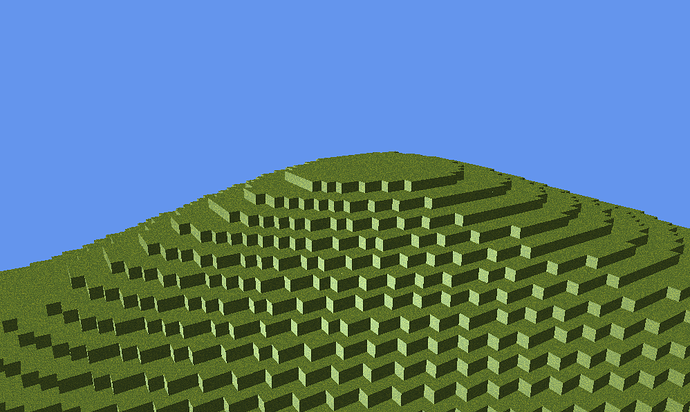

So far, everything looks good.

I want to add a crosshair, and assumed I would simply add a texture using a Spritebatch, so now my draw method is:

foreach (var pass in effect.CurrentTechnique.Passes)

{

pass.Apply();

graphics.GraphicsDevice.DrawUserPrimitives(PrimitiveType.TriangleList, verts, 0, verts.Length/3);

}

spriteBatch.Begin();

spriteBatch.Draw(crosshair, new Vector2(graphics.PreferredBackBufferWidth/2 - crosshair.Width/2,

graphics.PreferredBackBufferHeight/2 - crosshair.Height/2), Color.White);

spriteBatch.End();

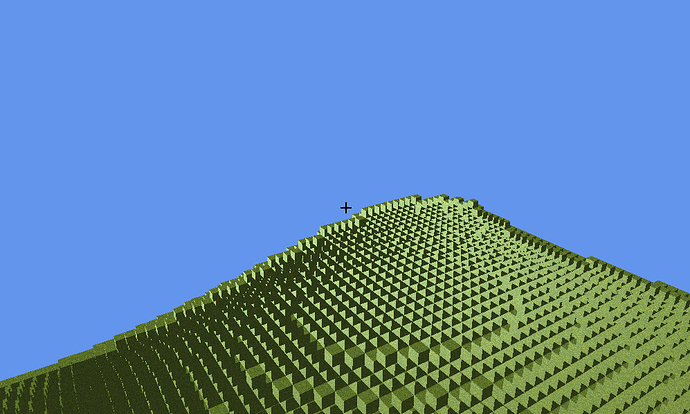

For some reason, this causes my vertices not to render properly - some of the faces of my blocks are missing:

I’m sure there’s a logical reason for this, and my understanding is that Spritebatch actually renders the texture in 3D, which I guess is interfering with my blocks somehow, but I can’t figure out why only some surfaces are disappearing - it seems to be affecting the normals which determine the direction for the shader to cast light on the blocks because if you rotate the view they display correctly from some sides.

Anyone know what I’m misunderstanding here?