Hello,

I need help with my shader, for some reason it doesn’t work on Intel’s and AMD’s integrated gpu.

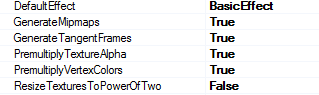

to make my shader I use the default Monogame template.

Vertex Shader:

VSOutputPixelLightingTx VSBasicPixelLightingTx(VSInputNmTx vin) {

VSOutputPixelLightingTx vout;

CommonVSOutputPixelLighting cout = ComputeCommonVSOutputPixelLighting(vin.Position, vin.Normal, vin.Tangent, vin.Binormal);

SetCommonVSOutputParamsPixelLighting;

vout.Diffuse = float4(1, 1, 1, DiffuseColor.a);

vout.TexCoord = vin.TexCoord;

return vout;

}

Pixel Shader:

float4 PSBasicPixelLightingTx(

float4 PositionPS : SV_Position,

float2 TexCoord : TEXCOORD0,

float4 PositionWS : TEXCOORD1,

float3 NormalWS : TEXCOORD2,

float3 TangentWS : TEXCOORD3,

float3 BinormalWS : TEXCOORD4,

float4 Diffuse : COLOR0

) : SV_Target0 {

float4 color = SAMPLE_TEXTURE(Texture, TexCoord) * Diffuse;

float3 eyeVector = normalize(EyePosition - PositionWS.xyz);

clip(color.a - .001);

float4 bump = SAMPLE_TEXTURE(NormalMap, TexCoord) - float4(0.5, 0.5, 0.5, 0);

float3 bumpNormal = NormalWS + (bump.x * TangentWS + bump.y * BinormalWS);

bumpNormal = normalize(bumpNormal);

ColorPair lightResult = ComputeLights(eyeVector, bumpNormal, 3);

color.rgb *= lightResult.Diffuse;

AddSpecular(color, lightResult.Specular);

ApplyFog(color, PositionWS.w);

return color;

}

the VSOutputPixelLightingTx struct:

struct VSOutputPixelLightingTx {

float4 PositionPS : SV_Position;

float2 TexCoord : TEXCOORD0;

float4 PositionWS : TEXCOORD1;

float3 NormalWS : TEXCOORD2;

float3 TangentWS : TEXCOORD3;

float3 BinormalWS : TEXCOORD4;

float4 Diffuse : COLOR0;

};

I use vs_3_0 and ps_3_0 to compile them.

Result on Nvidia and AMD dedicated card :

Result on intel and AMD integrated chip :

Over the last two day I try every solution i can find online but were not successful.

I think I am doing something wrong…

You can download here my complete shader : https://drive.google.com/file/d/0BzptdjKIFeflV0g4SVBNY3JJRVk/view?usp=sharing