Happy New Year everyone! I’ve been bashing my head against my wall for the past few days trying to optimize my 2D top-down game.

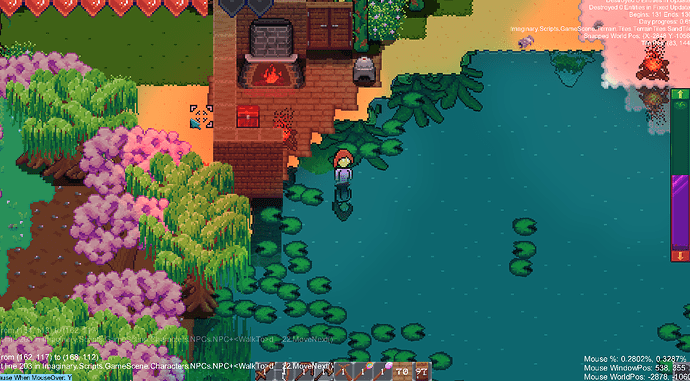

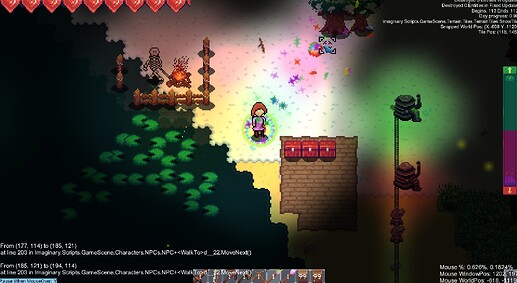

The game is quite complex and has 12 different effects/shaders, like reflection, wave, masking, etc.

I know I should not optimize unless I have to, but the game’s performance has been quite bad for a little while now (when there’s more stuff on screen, it’s at ~20-30 fps). So I did some research and learned about reducing batches.

I already took some time to change my framework/engine a few days ago. Particularly, I created an automatic Texture Atlas packer that packs all my sprites when the game initializes, and I so far have not encountered any problems with that. But as you may be able to see from the top-right-corner debug thingy, I call SpriteBatch.Begin() 130 times per frame when next to lots of water because I need to maintain the draw order of tiles and for water tiles, render reflection and waves. 130+ batches per frame is obviously VERY VERY bad, even when they are mostly all deferred.

So I did more digging. Currently, I am in the process of creating a ‘master’ effect. It will contain all 12 effects (including the default no effect shader), and have a float parameter that determines which specific effect (reflection, wave, masking etc) to use.

That is very easy to do, but I realized for it to work, I must call SpriteBatch.Begin() with Immediate sort mode instead of the much faster Deferred, which means more batches than even the 130 right now. i.e., even though I will have only 1 effect at all times, each time I call Draw() I would need to apply different parameters, and I think Deferred mode will only ‘store’ the last parameters I applied when I call SpriteBatch.End(). More digging later, I found a few posts like this one saying that the Tint parameter passed into each Draw() call is ‘remembered/stored’ for each vertex, which is exactly what I need.

So my question is, is there a way for me to store more information than just a 4-byte Tint per Draw() (per vertex)? And if so, how can I receive it in the shader?

(If any of what I said about SpriteBatch is incorrect please let me know ![]() )

)