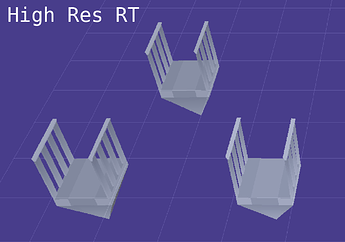

I’m using a RenderTarget2D to draw my 3D scene onto a low resolution texture, and then I draw that RT to the window, sized and positioned to the highest multiple it can of the window resolution. This all works great, but has one issue: The RT seems to be… lower quality? I’ve ensured my RT is using the same GraphicsDevice.PresentationParameters.BackBufferFormat, but for some reason, whenever I render to the RT, it seems to have a lower color depth.

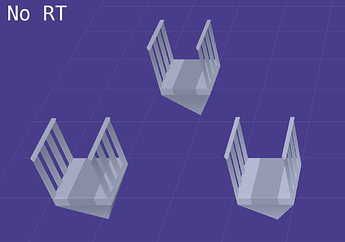

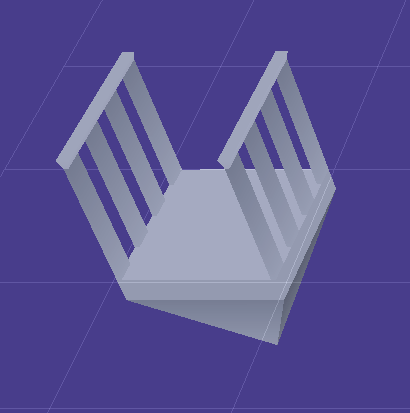

Before:

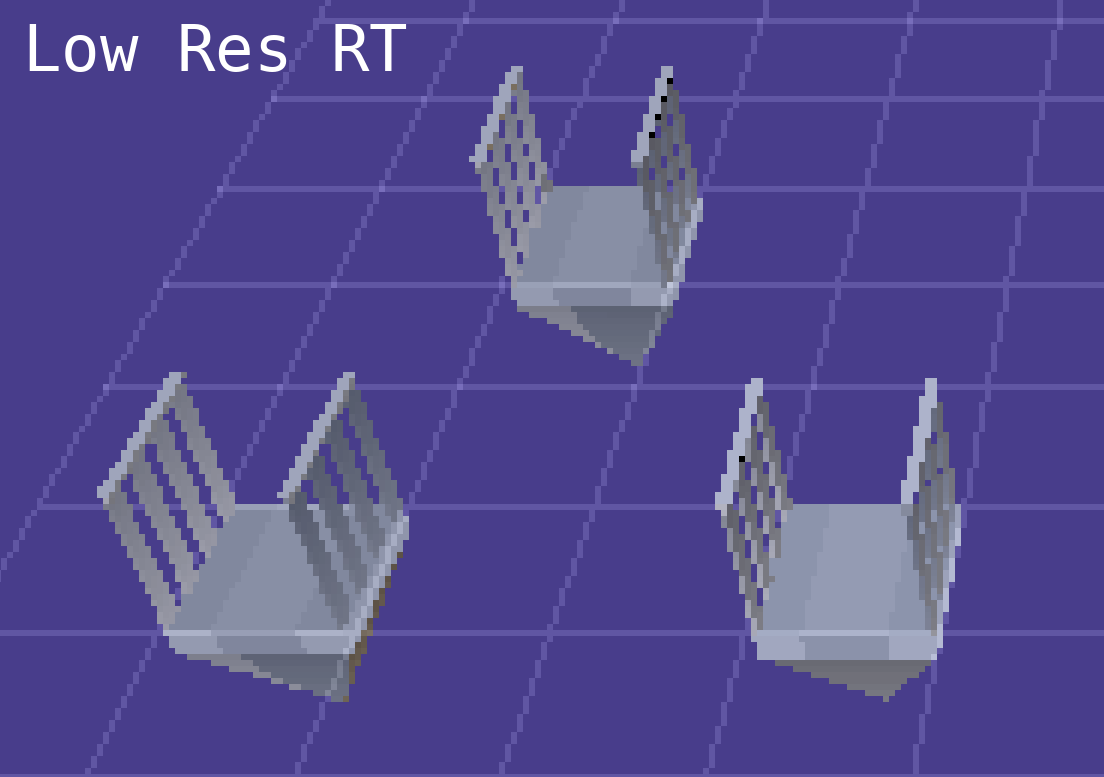

After:

I’m kinda wracking my brain wondering if there’s something I’m just not understanding about RenderTargets, I figured LESS pixels would offer more room for having all the colors in the color depth, but instead it seems like no matter how large or small my RT is, it still has the same banding and low color depth. And simply NOT drawing to the RT, and just drawing my models to the screen, it immediately looks as intended, full color.

If anyone has any idea how to avoid this kind of issue, I’d love to hear it. Also, if it makes any difference, I’m using an OGL project.