Hi all,

I’m ironing out the final problem with my shaders for an Android app. Right now I’m having trouble understanding a problem which only appears in OpenGL.

I want to apply a detail map to my terrain depending on the camera distance from each pixel. If the camera’s close to a pixel, it should blend more with the detail map. I’m actually calculating the camera distance on the vertex shader, like this:

float3 positionWorld = mul(input.Position, WorldMatrix).xyz; output.CameraDistance = length(CameraPosition - positionWorld);

…then, in the pixel shader, I do a check like this:

if (input.CameraDistance < 500) { // Sample detail map and blend it with regular texture }

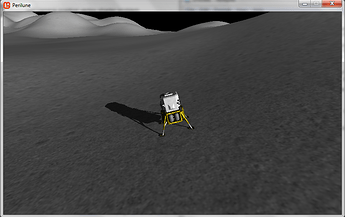

The blending works fine, but only for certain camera positions! For example, this direction it works fine:

… but if I move the camera slightly, it doesn’t work at all:

I think it’s something to do with the CameraPosition variable, which represents the coordinates of the camera in world space and is sent into my shader from the main program using SetValue(). If I remove any dependency on positionWorld and just do something like this in the vertex shader:

float cameraDistance = length(CameraPosition);

…I still get unexpected results which respond very strangely to small movements of the camera.

I tried it on Android and desktop GL, and the problem appears in both. Can anyone who knows more about GLSL see an obvious reason why there could be a problem passing a value which depends on a global variable like CameraPosition into a pixel shader?

I should also say that if I use the same logic but calculate the cameraDistance in the pixel shader, it works without any bugs.

Cheers!

which can be solved faster (easier) by just using the viewspace/screenspace coordinate of the vertex (where z is basically the depth value 0-1 seen from camera and scaled to the near/far pane of your camera) [may need division by w … not exactly sure atm) - you don’t need any distance calculations in that case

which can be solved faster (easier) by just using the viewspace/screenspace coordinate of the vertex (where z is basically the depth value 0-1 seen from camera and scaled to the near/far pane of your camera) [may need division by w … not exactly sure atm) - you don’t need any distance calculations in that case This is all a bit over my head and winds me up when there’s no easy way to debug.

This is all a bit over my head and winds me up when there’s no easy way to debug.