I have this strange problem that even after spending like 14 hours in a row i can’t find a way to solve it.

i am trying to make a few pixel shaders functions, and the results are always funny for some reasons, after a lot of testing i found the TEXCOORD0 values range changes based on the original texture size, i read the semantics of TEXCOORD0 is deprecated and i read we should use SV_POSITION instead of POSITION, etc etc etc, anyhow …

This is the shader code i am using

#if OPENGL

#define SV_POSITION POSITION

#define VS_SHADERMODEL vs_3_0

#define PS_SHADERMODEL ps_3_0

#else

#define VS_SHADERMODEL vs_4_0_level_9_1

#define PS_SHADERMODEL ps_4_0_level_9_1

#endif

Texture2D SpriteTexture;

float TimeE; float H;

sampler2D SpriteTextureSampler = sampler_state {

Texture = <SpriteTexture>;

};

struct VertexShaderOutput {

float4 Position : Position;

float4 Color : COLOR0;

float2 uv : TEXCOORD0;

};

float4 ColdDown1(VertexShaderOutput input) : COLOR {

float4 color = tex2D(SpriteTextureSampler, input.uv) * input.Color;

if(input.uv.y >= CD) color.b = color.b + 0.4f;

return color;

}

technique ColorShiftTec {

pass Pass0 { PixelShader = compile PS_SHADERMODEL ColdDown1(); }

};

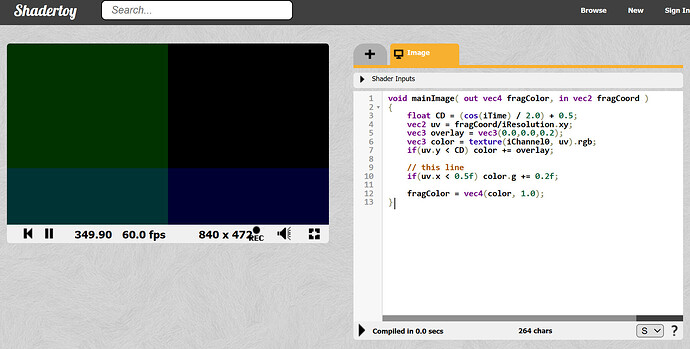

no matter what i do in the function the result different from online simulators like shadertoy (after i change the terms from GLSL to HLSL manually)

and when i used fixed values like

if(input.TextureCoordinates.x < 0.5) color.g = color.g + 0.2f;

i get different result than the simulated one

after a lot of attempts and tinkering i found out based on the texture i use the values differ, and when i edit the image and change its size i get different results, so i made few tests and here they are:

so if i have a texture with width and height of 4000 the uv respectivly value becomes from 0 to ~0.14438

for texture of size 2000 the uv becomes from 0 to ~0.2896 which is double the value

for size of 1 pixel the uv go to 0 to 579.2 …

i copied the project and test it on a different computer and the results the same. which is kinda a good news cause i can just use magic numbers and pray to God that it wont break or never change atlases sizes, and reconfigure a way to make my effects manager do the calculation after find the exact magical number, but no screw all of that <_<; shouldn’t the TEXCOORD0 have values from 0 to 1?

if anyone have an idea what i am doing i would appricate it, i tried to change the pixel shader but didn’t notice any changes