it’s ok to manipulate only with pixels, without touching the actual shape of the texture?

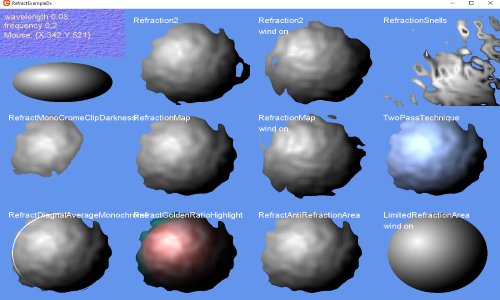

Yep the pixel shader can displace were texels are taken from on a image.

To say ideologically the pixel shader draws each and every destination position pixel and allows you to manipulate were the corresponding texel is grabed from which normally is proportionally even but you can distort that grab or even blend it for all positions via some math function you come up with. Aka distort the texel grab positions or uv sampling.

The vertex shader on the other hand works by displacing were the position of vertex edges that define were the drawing area will be at proportionally to the assigned texture positions equal to a vertex.

In general a square image has 4 vertice points in total the corners of the destination drawing rectangle the uv texel positions are straight forward 0,0 being the top left 1,1 being the bottom right. However you can assign more points to draw a square image say 9 points top mid right and so forth down with matching uv positions for middle points to have ,5 values. Later these can be distorted on the vertice shader.

Either is possible.

But in your example you have magfilter, which is absent in the documentation. This is related to the DX9 and DX11 differences?

Dunno why it would be missing in the doc should be applicable to all of them.

Anyways min and mag filter is short for Minification and Magnification Sampling filters.

These filters tell the card what to do if the destination is smaller or larger then the source texels.

To say i draw to Destination screen pixels 0 100 from a image source texels that ranges 0 10

The Magnification filter can sample and blend points (between integer texels) by a fractional amount with linear or many texels with ansiostropic.

For example for point sampling say i grab a pixel from u .5 it can just go 10 *,5 = 5 and grab source texel 5 for a range of destination positions within the 0 to 100 pixels. Namely all positions from 50 to 59, were we calculate 59 / 100 = 0.59 then .59 * 10 = 5.9 (in this case point filter means get rid of the fraction) so 5 being the grab source texel on the image that ranges from 0 to 10 and ends up at destination 50 to 59 of the area from 0 to 100 on screen a big blocky draw.

The Minification filter tells the card how to handle downsampling when the source texels range from say 0 to 100 and the destination pixels on screen are 0 to 10 were .5 actually represents many texels that would have to be sampled (aka looked up and RGB averaged) to shrink the image to one tenth the size.

The address U V describe texel grab positions x y on the texture so named instead as uv for the sake of clarity. the filters used relate mainly to what happens if the texel grab position is below 0 or above 1 basically out of bounds of the image …

Do we WRAP around to the other side of the image ?

Do we MIRROR back into the texture from that edge ?

Do we CLAMP at the edge and just return the nearest valid edge pixel ?

these 5 are the primary filter concepts to take note of the W is important for ansiostropic primarily.

Anti-aliasing is a separate topic but you need to be aware of the above before you start to worry about it. In general anti-aliasing can be thought of as a 1 to 1 texel to pixel ratio domain problem were depth and real physical properties of a monitors led lighting also factors into it…

If you want to go the vertex route you also need to create a vertex shader and set up the world view projection matrices send in the textures ect, You can’t really use spritebatch at that point.

Here is a mesh class that will generate a grid mesh from a array of Vectors. Unless charles has something ready made i don’t at the moment.

Mesh class

Thanks

Thanks