Hello

I know, that this topic has been discussed a lot of times until now. I did a lot of research and found some potential solutions for my issue, but actually I’m not happy with those solutions. Maybe someone has a better idea?

The problem statement:

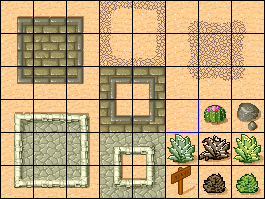

I render a tile-map, tile by tile to the screen. Of course, I use SamplerState.PointClamp as texture filter. The tile-set is like a texture atlas. It is this example tile-set from tiled:

As you can see, every tile has a black border in the tile-set, so there is some kind of padding. Please notice the blue border on the one tile, which I intentionally added here to debug this issue.

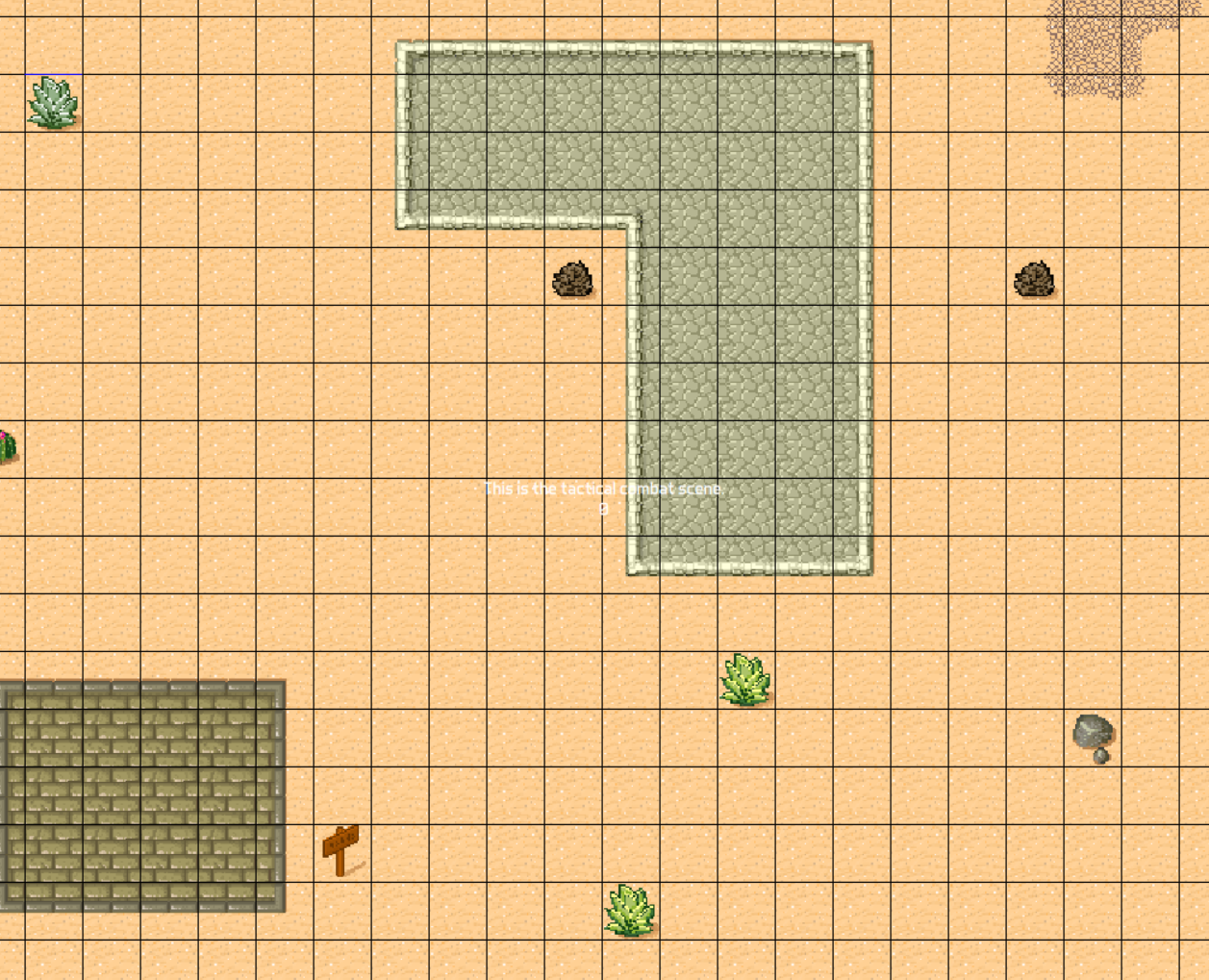

I have a camera with zoom. The camera position and the zoom is applied as transformMatrix in the Begin(...) method of the sprite-batch. If I have certain zoom levels, I suddenly see black gaps between the tiles like this:

First I was thinking, that this are really gaps between the tiles, but it isn’t. I changed the background color from black to pink, but the gaps are still black. Then I found out, that this issue has the name “texture bleeding”.

This happens when textures are in a texture atlas, like a tile-set atlas. The texture coordinates are given as floating point numbers from (0.0, 0.0) to (1.0, 1.0), which corresponds from the left top corner the atlas to the right bottom corner. Now it can happen, that the texture coordinates for the left corner of a tile are subject to floating point imprecision, so that actually not the first pixel of that tile inside the texture is chosen, but one pixel before (which is the black padding pixel). Therefore I get those strange gaps on certain zoom levels.

I found out, that there are 2 very common workarounds available for that and one workaround, which is not so often recommended.

-

The first very obvious workaround is to not have any zoom levels at all, applied to the

transformationMatrix. Often it is recommended to render the tiles to an empty texture of the size of the screen and then apply the zoom level on that texture, when rendering it to the screen. This sound like a nice workaround, since often you anyway have to apply post-processings, so having this system inside the game, would make those post-processing easier. However it only works nice for zooming in. If you also want to allow zooming out, you need an texture render target, which is 1 / zoom-level times bigger than the screen itself. For huge screens like 4k and zoom levels of around 0.1, this leads to a very huge render target. Furthermore, I’m not 100% sure, if this really fixes this issue in all cases and for all possible camera positions. Maybe, when the size of the atlas changes (for example getting much bigger) then the floating point imprecision starts again, even on zoom levels of 1, but then just on certain camera positions. -

The second very smart workaround is, to just add a padding pixel around every tile, which copies the color from the adjacent tile-pixel. If the floating point imprecision starts, it is just selecting the prepared padding pixel instead of the black border, thus we don’t see black gaps. I already tried this out, by just reducing the tile size by 2 pixel and it works fine. However, I now get on some zoom-levels and camera positions artifacts, where the pixels of some tiles, sometimes look twice as big as all other pixels. Normally this is not really noticeable, except you move the camera a lot and then you get on some flickering around those tiles. Especially on tiles with high color diversity (like the ones with the bricks), this looks ugly. Furthermore it means, that every atlas must be preprocessed by any kind of script to add the padding automatically. It really seems to be just a workaround and not a solution to me.

-

And now there is the 3rd solution I found here: Gaps Between Tiles - #16 by CSharpCoder In this thread the author is suggesting to add a small offset to the texture coordinates inside the pixel shader, to prevent a sampling of the wrong pixel. Honestly the thread is some kind of chaotic, the so called “solution” changes from post to post (sometimes, the author is giving a half pixel offset, sometimes just a very small offset like

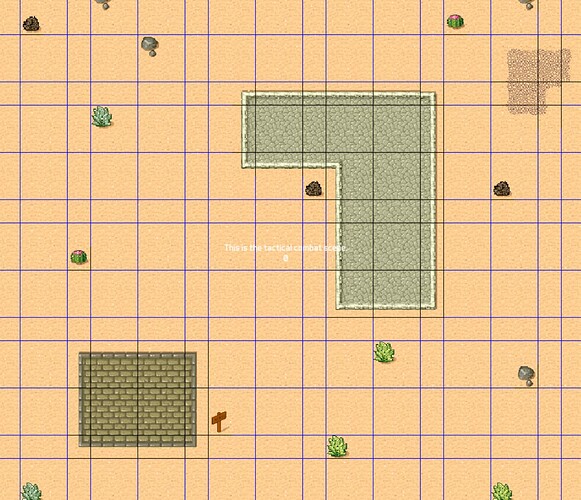

pixelSize * 0.001. Also here, I have the feeling, that is is not proven, that this is a generic solution for all kind of textures, camera positions and zoom-levels. I tried it out with the half pixel offset and get the following result:

Now, I get blue gaps, so it is not under-sampling but over-sampling (don’t know if this are the right words for that) the tile in the atlas. When I just apply an offset ofpixelSize * 0.01it works fine. But also here, I have the feeling, that on some zoom levels, I get flickering when moving the camera (like in the seconds solution). And furthermore, I have to implement my own pixel shader and pass the texture size to it, which might be a performance issue, if I want to mix up tiles from different tile-sets in the same map (then I have to change the texture-size every time, I render a tile).

So as you can see, I’m not really happy about any of those solutions. I would say, that the first solution seems to be the most reasonable, if there is the guarantee, that this really solves the issue. But why should the floating point imprecision just occur on certain zoom-levels and not also on certain camera positions with zoom level 1? Does anyone has a explanation for that?

Furthermore, I wonder how the “half pixel correction” of DirectX plays into that. Maybe there is just a flag, I have to switch on to get rid of the issue? However settings GraphicsDeviceManager.PreferHalfPixelOffset to true does not change anything.

Are there more detailed (theoretical) explanations to this issue? How are you fighting against it?