So I’m working on a little engine using Monogame and BEPU physics, and I am trying to figure out the best way to organize my scenes to minimize graphics calls while keeping the scene sorted so as to render correctly. I have yet to find a nice concise article that describes best practices in terms of XNA or Monogame.

I’ve done some thinking and I have come up with 3 solutions, mostly revolving around using “flags” in my rendering stack to handle state changes:

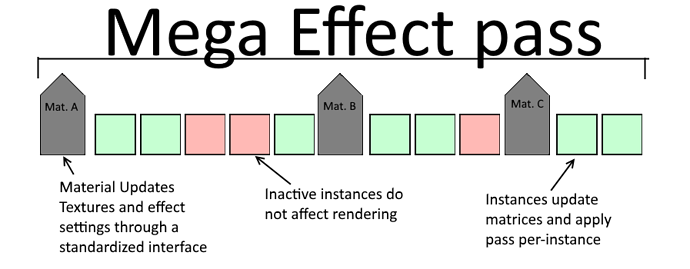

Solution 1 - Mega Effects

The basic idea here is that my scene would have a Render method which I pass a "Mega Effect" interface to. For each material, I would update the textures and things like blend modes and depth culling and all that, then render all the instances until the next material change flag. Each instance still needs to update it's matrices, so I still need to apply the pass for each instance (is there any other way to do this?).

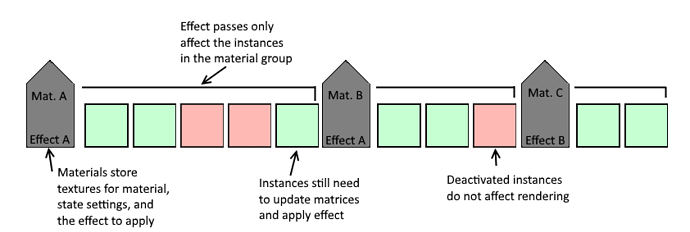

Solution 2 - Per-Material Effects

The idea here is that each material keeps an instance of it's effect with the textures and setting already applied, and will render all of the instances until the next material flag using said effect. This is kind of more what I'm leaning towards, but I see a few problems, mainly having to load an entirely new effect and it's parameters multiple times per draw call, burning up some processor time.

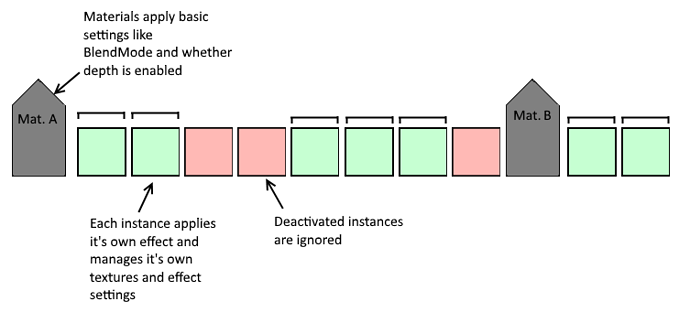

Solution 3 - Brute force

This is my least favorite idea. Basically the materials will only manage low level state changes like blend more, depth culling, the rasterizer, etc. Each instance would be resposible for renderining itself and appying it's effect and textures and whatnot. I'm worried this would be the slowest, as you would constantly be switching between effects.

Any tips or suggestions? I’m kind of at a crossroads here and I’m not sure what path to take. How do larger scale game engines like Unity or Unreal Engine handle this?

I have seen someone made a PrimitiveBatch, which operates in a similar manner to SpriteBatch but works on 3D objects. It put reach rendered item into a list and then sorted that list before submitting them to the GPU.

Ideally, the sorting would happen in two main groups: opaque and translucent. Different sorting rules apply to each group. In the opaque group, you want to sort by material. Within material, you want to sort by texture, states, etc. It may take some experimentation to see if you get better result sorting by state first or by texture first. In the translucent group, you want to sort by depth for proper rendering of overlaid objects. In console titles I’ve done before, we had another group before opaque that was a depth prime. This was a copy of the opaque group but sorted from closest to furthest and then rendered to the depth buffer only. This allowed the depth comparison tests in the rendering of the opaque group to skip rendering pixels that would be occluded. That is a bit more advanced though.

So I guess the thing that has me kind of stumped is the definition of material. From your description, it sounds like a material is basically an effect, and the instances have different variations of the material (textures and whatnot). So basically it would be closer to the second layout, but with translucent materials being put in a higher priority (rendered first), and instances applying their own textures and whatnot to the material (effect).

As for actually sorting on depth, what is you recommendation for this? I assume that the list would need to be rebuilt every frame (the scene is very dynamic), and would chew up a lot of processor time. The opaque stuff is easy, as it is only sorted when actually adding it to the scene, and is activated/deactivated as needed.

Finally, I was spelunking around the source for EffectPass and it looks like there is really no way to just update constant buffers without re-applying the entire pass. Maybe a future feature? A lil’ UpdateParameters method? ;D

i basically use your first idea, one shader for all basically and change material etc for each group of objects.

I am not super familiar with the pipeline - how does graphics memory work?

If let’s say I had 10 materials:

If I have one .fx shader file and I change it per material the game slows down a bit due to bandwidth when transferring said material, right?

If I have 10 .fx shader files and I load the materials to them on game startup - do they stay in GPU memory for good and consume no runtime bandwidth? How costly is it to change the current shader used?

Plus another question @KonajuGames

How exactly did you write to depth buffer only? Rendertarget with no Format.None?

Is depthstencil Test Applied before or after Fragment shader computations? I think I’ve red something about afterwards, so what’s the benefit?

Thanks in advance

It can depend on the GPU and many factors, but generally changing any render state, including shaders, can incur some costs.

This was in a C++ game on PS3 and Xbox 360. I haven’t tried depth prime in MonoGame yet. The benefit is mostly seen on scenes with costly pixel shaders since the depth prime is done with very simple shaders, and then the next pass with the expensive rendering using the full pixel shader only renders each screen pixel at most once since a large number of them will fail the depth test and never make it to the pixel shader.

@ShawnM427 I’m working on a PrimitiveBatch over at MonoGame.Extended. It’s based on, with permission, the incomplete implementation of PrimitiveBatch over at Nuclex Framework.

You can see my current progress in this pull request.

One of the insights I used for figuring out how to minimize state changes was from this article.

I moved away from the default Draw function for models and now split models by their submeshes and textures. Then I render sorted by textures and meshes, so I have the least possible amount of stateswitches (set the texture and material properties once for all models with ti and set the frame buffer/index buffer once for all models with the same texture).

Obviously the sorting and stuff is highly susceptible to bugs now, since I need to register my model files and deregister them when they spawn/despawn, but it works without problems now.

Works right now, but basically no performance benefit over "just draw the models in whatever sequence, which is a tad disappointing considering I have this huge sorting and registering models in the background. At least it’s more flexible now in terms of having different materials set for different submeshes. oh well.

I guess I am bottlenecked somewhere else. What tools do you guys use to determine GPU bottlenecks?