I have very weird problem and I can’t find mention of something similar nowhere. I have test level that has just bunch of static visually identical geometry;

Problem is that sometimes draw time for one object become enormous. Like almost all of them take from 0.01ms to 0.02, but few of them take up to 0.5ms.

this problem appears both in OpenGL and DirectX.

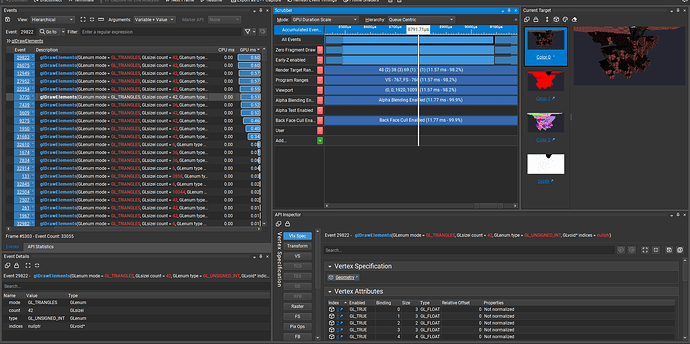

Here’s screenshot from nsight:

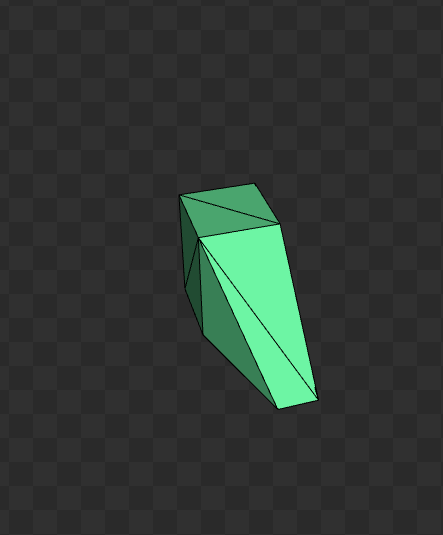

and here’s geometry that being rendered:

- I also noticed that despite everything framerate is relatively stable, but very low.

In case if it would interest somebody, here’s link to nsight cpp capture

https://mega.nz/file/emJC3T5Y#ycmSoH2yktZAtQoKHaHJ8uhrudS82cox8Ewzg1wYcVQ

Can you post DX capture please?

If possible include source for Vertex/Pixel Shaders used to render these.

here’s link to direct X version

After performing some tests I’ve noticed that amount and sizes of draw call performance drops increase by amount and difference of operations performed on gpu. For example: drawing sprite will cause smaller and less likely drops, but drawing cube increases it.

About shaders. here’s minimal shader that I used:

#if OPENGL

#define SV_POSITION POSITION

#define VS_SHADERMODEL vs_3_0

#define PS_SHADERMODEL ps_3_0

#else

#define VS_SHADERMODEL vs_5_0

#define PS_SHADERMODEL ps_5_0

#endif

matrix World;

matrix View;

matrix Projection;

struct VertexShaderInput

{

float4 Position : POSITION0;

float4 BlendIndices : BLENDINDICES0;

float4 BlendWeights : BLENDWEIGHT0;

};

struct VertexShaderOutput

{

float4 Position : SV_POSITION;

float4 myPosition : TEXCOORD1;

};

VertexShaderOutput MainVS(in VertexShaderInput input)

{

VertexShaderOutput output;

float4x4 boneTrans = GetBoneTransforms(input);

// Transform the vertex position to world space

output.Position = mul(input.Position, World);

output.Position = mul(output.Position, View);

output.Position = mul(output.Position, Projection);

output.myPosition = output.Position;

//output.Position.z *= DepthScale;

return output;

}

float4 MainPS(VertexShaderOutput input) : SV_TARGET

{

// Retrieve the depth value from the depth buffer

float depthValue = input.myPosition.z;

return float4(depthValue,0,0,1);

}

technique NormalColorDrawing

{

pass P0

{

VertexShader = compile VS_SHADERMODEL MainVS();

PixelShader = compile PS_SHADERMODEL MainPS();

}

};

Shader complexity doesn’t affect this issue at all.

GetBoneTransforms(input);

Content of this function please

Ok, this is weird, last time I’ve seen something this weird (and fairly similar) it was GC pressure and for whatever reason it affected GPU time measuring (keep in mind Nsight wasn’t made for managed languages). Once client fixed it, it was all good. For sanity check, could you profile that in you VS?

I guess this is just test scene, but to make sure: since you are nearing 4k drawcalls, start considering instancing. MG has INSANE overhead for drawcalls.