I’m trying to make a shader that is specular enabled, but I can’t seem to get the normal passed in to my fragment shader.

My shader code:

struct VertexData

{

float4 position : POSITION0;

float4 color : COLOR0;

float4 specularColor : COLOR1;

float3 normal : NORMAL0;

};

struct VertexToPixel

{

float4 position : POSITION0;

float4 litColor : COLOR0;

float4 specularColor : COLOR1;

float3 normal : NORMAL0; //It refuses to recognize this

};

VertexToPixel CubeVertexShader(VertexData input)

{

VertexToPixel output;

float4 worldPosition = mul(input.position, World);

float4 viewPosition = mul(worldPosition, View);

output.position = mul(viewPosition, Projection);

float4 lightColor = ambientColor * ambientColor.a;

//normally we'd need to switch the normal to be in world-space

//but, it conveniently already is

//so we cool.

//as long as we don't do any world space rotation.

float diffuseIntensity = dot(input.normal, -sunDir) * sunColor.a;

if (diffuseIntensity > 0)

lightColor = lightColor + sunColor * diffuseIntensity;

output.litColor = float4(0, 0, 0, 0);

output.litColor.rgb = input.color.rgb * lightColor.rgb;

output.litColor.a = input.color.a;

output.litColor = saturate(output.litColor);

output.specularColor = input.specularColor;

output.normal = input.normal;

return output;

}

float4 CubePixelShader(VertexToPixel input) : COLOR0

{

float3 eyePosition = float3(0, 0, 1.0);

float3 worldNormal = mul(float4(input.normal, 0), World).xyz;

float3 reflection = normalize(sunDir - (2 * worldNormal * dot(sunDir, worldNormal)));

reflection = mul(float4(reflection, 0), View).xyz;

float3 specularResult = input.specularColor.rgb * sunColor.rgb * pow(dot(reflection, eyePosition), input.specularColor.a);

//This next is an experimental function to

float alphaFactor = max(max(specularResult.r, specularResult.g), specularResult.b);

float3 premultSurfaceColor = input.litColor.rgb * input.litColor.a;

float4 finalColor = float4(0, 0, 0, 0);

finalColor.rgb = specularResult.rgb + (premultSurfaceColor.rgb * (1 - alphaFactor));

finalColor.a = alphaFactor + input.litColor.a * (1 - alphaFactor);

return finalColor;

}

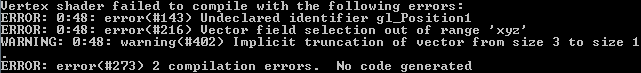

But it gives me this error:

The main error is the first, the others are just caused by that variable being missing.

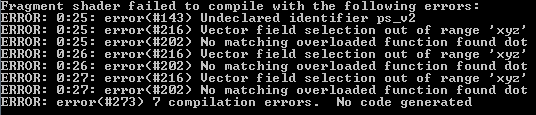

This is the GLSL code my fragment shader (and header) got translated to:

uniform vec4 ps_uniforms_vec4[6];

const vec4 ps_c8 = vec4(1.0, 0.0, 0.0, 0.0);

vec4 ps_r0;

vec4 ps_r1;

vec4 ps_r2;

#define ps_c0 ps_uniforms_vec4[0]

#define ps_c1 ps_uniforms_vec4[1]

#define ps_c2 ps_uniforms_vec4[2]

#define ps_c5 ps_uniforms_vec4[3]

#define ps_c6 ps_uniforms_vec4[4]

#define ps_c7 ps_uniforms_vec4[5]

varying vec4 vFrontColor;

#define ps_v0 vFrontColor

#define ps_oC0 gl_FragColor

varying vec4 vFrontSecondaryColor;

#define ps_v1 vFrontSecondaryColor

void main()

{

ps_r0.x = dot(ps_v2.xyz, ps_c0.xyz);

ps_r0.y = dot(ps_v2.xyz, ps_c1.xyz);

ps_r0.z = dot(ps_v2.xyz, ps_c2.xyz);

ps_r1.xyz = ps_r0.xyz + ps_r0.xyz;

ps_r0.x = dot(ps_c6.xyz, ps_r0.xyz);

ps_r0.xyz = (ps_r1.xyz * -ps_r0.xxx) + ps_c6.xyz;

ps_r1.xyz = normalize(ps_r0.xyz);

ps_r0.x = dot(ps_r1.xyz, ps_c5.xyz);

ps_r1.x = pow(abs(ps_r0.x), ps_v1.w);

ps_r0.xyz = ps_c7.xyz * ps_v1.xyz;

ps_r0.xyz = ps_r1.xxx * ps_r0.xyz;

ps_r1.x = max(ps_r0.x, ps_r0.y);

ps_r2.x = max(ps_r1.x, ps_r0.z);

ps_r0.w = -ps_r2.x + ps_c8.x;

ps_oC0.w = (ps_v0.w * ps_r0.w) + ps_r2.x;

ps_r1.xyz = ps_v0.www * ps_v0.xyz;

ps_oC0.xyz = (ps_r1.xyz * ps_r0.www) + ps_r0.xyz;

}

Did I do something wrong in the syntax or is the code translator just arbitrarily dropping variables?