Dear all,

I am currently trying to perform some basic thermal analysis on a satellite. Basically, I need to calculate surface areas (view factors) as seen from the sun and earth. A common way to do so is via an orthographic projection of the surface of interest as seen from, i.e., the sun.

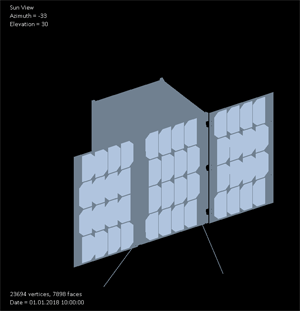

In this plot, you can, for example, see the solar cells (light steel blue) as visible from the sun.

Knowing the size of a single pixel in this representation, I only need to count the pixel number to get the surface area. So far, I did this by using a RenderTarget2D and a Color[] Array which I filled via GetData<Color>() and cycled through. Works well, but is super slow.

However, I believe that this task can also be solved on the GPU. I have read about atomic counters employed to get an image histogram, which is exactly what I need. In this tutorial, an atomic counter (or InterlockedAdd) is used to correct an image for luminosity.

However, I have failed to make it work so far. I have checked the HLSL reference and seen that the function I probably need is only available for shader model 5 and newer (https://msdn.microsoft.com/en-us/library/windows/desktop/ff471406(v=vs.85).aspx) and have therefore changed the profile accordingly. Unfortunately, this is currently not possible with OpenGL 3.0 (needs at least 4.2, I believe), so I am using DX.

Since I am very new to shaders, my approach is probably very naive (Main routine of pixel shader, using the standard notation as predefined in the monogame SpriteEffect template):

groupshared uint histogram[32];

float4 MainPS(VertexShaderOutput input) : COLOR

{

// get bin to write in

int bin = input.Color.r * 32;

// atomic add

InterlockedAdd(histogram[bin], 1);

// for now, display image

return tex2D(SpriteTextureSampler,input.TextureCoordinates) * input.Color;

}

I get two error messages during compilation:

a) groupshared is not supported in ps_5_0 -> which shader level should I use?

b) Resource being indexed cannot come from conditional expressions … -> probably using histogram the wrong way?

Does anybody have experience in how to use this command properly? Or am I missing the point entirely and should use another way to solve this problem?

Thanks a lot in advance