EDIT: This used to be about hemisphere-oriented SSAO, but I was having too many difficulties with that and read that it’s more susceptible to artifacts, so I changed to spherical, but I’m still having problems (although it’s much closer!)

I’ve loosely been following a number of tutorials, but I’m having a few issues with my SSAO.

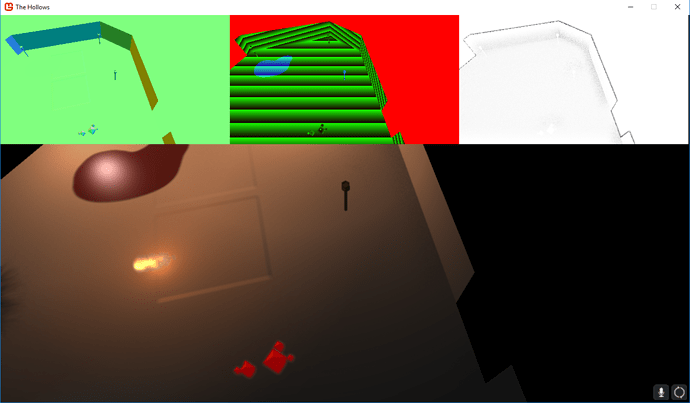

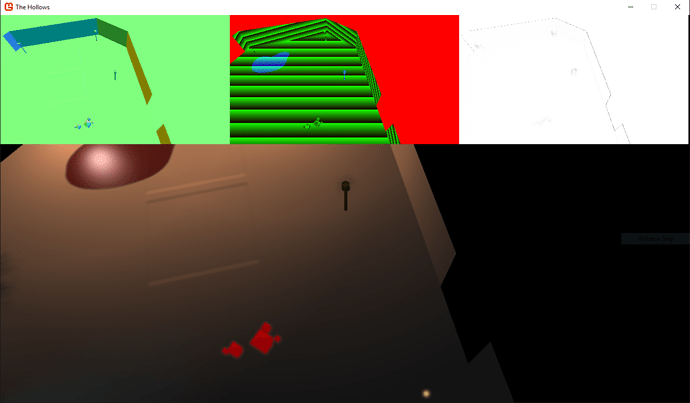

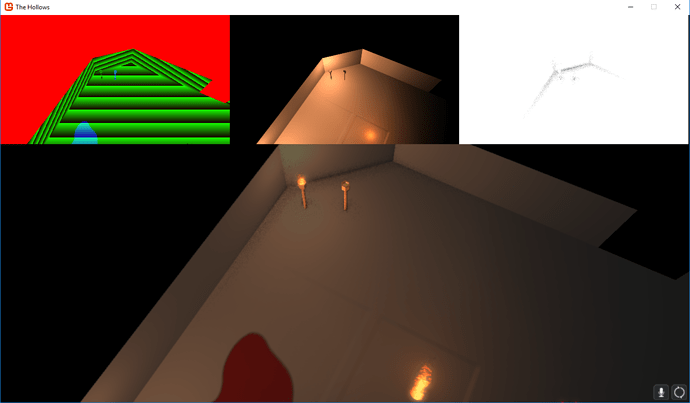

This screenshot shows world-space normals, encoded depth, and the ambient occlusion map, with the composition in the background. There’s darkness on the floor by the right wall, but not at the base of the wall itself. There’s also occasionally a strange cloud of darkness around the head of the torch, but nothing around the base. Additionally, it seems like maybe one of my transformations is incorrect, because all of the ambient occlusion varies so much when the camera moves around.

Here are the main bits of my shader:

// Vertex Input Structure

struct VSI

{

float3 Position : POSITION0;

float2 UV : TEXCOORD0;

};

// Vertex Output Structure

struct VSO

{

float4 Position : POSITION0;

float2 UV : TEXCOORD0;

float3 ViewRay : TEXCOORD1;

};

// Vertex Shader

VSO VS(VSI input)

{

// Initialize Output

VSO output;

// Pass Position

output.Position = float4(input.Position, 1);

// Pass Texcoord's

output.UV = input.UV;

output.ViewRay = float3(

-input.Position.x * TanHalfFov * GBufferTextureSize.x / GBufferTextureSize.y,

input.Position.y * TanHalfFov,

1);

// Return

return output;

}

float3 randomNormal(float2 uv)

{

float noiseX = (frac(sin(dot(uv, float2(15.8989f, 76.132f) * 1.0f)) * 46336.23745f));

float noiseY = (frac(sin(dot(uv, float2(11.9899f, 62.223f) * 2.0f)) * 34748.34744f));

float noiseZ = (frac(sin(dot(uv, float2(13.3238f, 63.122f) * 3.0f)) * 59998.47362f));

return normalize(float3(noiseX, noiseY, noiseZ));

}

//Pixel Shader

float4 PS(VSO input) : COLOR0

{

//Sample Vectors

float3 kernel[8] =

{

float3(0.355512, -0.709318, -0.102371),

float3(0.534186, 0.71511, -0.115167),

float3(-0.87866, 0.157139, -0.115167),

float3(0.140679, -0.475516, -0.0639818),

float3(-0.207641, 0.414286, 0.187755),

float3(-0.277332, -0.371262, 0.187755),

float3(0.63864, -0.114214, 0.262857),

float3(-0.184051, 0.622119, 0.262857)

};

// Get Normal from GBuffer

half3 normal = DecodeNormalRGB(tex2D(GBuffer1, input.UV).rgb);

normal = normalize(mul(normal, ViewIT));

// Get Depth from GBuffer

float2 encodedDepth = tex2D(GBuffer2, input.UV).rg;

float depth = DecodeFloatRG(encodedDepth) * -FarClip;

// Scale ViewRay by Depth

float3 position = normalize(input.ViewRay) * depth;

// Construct random transformation for the kernel

float3 rand = randomNormal(input.UV);

// Sample

float numSamplesUsed = 0;

float occlusion = 0.0;

for (int i = 0; i < NUM_SAMPLES; ++i) {

// Reflect the sample across a random normal to avoid artifacts

float3 sampleVec = reflect(kernel[i], rand);

// Negate the sample if it points into the surface

sampleVec *= sign(dot(sampleVec, normal));

// Ignore the sample if it's almost parallel with the surface to avoid depth-imprecision artifacts

//if (dot(sampleVec, normal) > 0.15) // TODO: is this necessary for our purposes?

//{

// Offset the sample by the current pixel's position

float4 samplePos = float4(position + sampleVec * SampleRadius, 1);

// Project the sample to screen space

float2 samplePosUV = float2(dot(samplePos, ProjectionCol1), dot(samplePos, ProjectionCol2));

samplePosUV /= dot(samplePos, ProjectionCol4);

// Convert to UV space

samplePosUV = (samplePosUV + 1) / 2;

// Get sample depth

float2 encodedSampleDepth = tex2D(GBuffer2, samplePosUV).rg;

float sampleDepth = DecodeFloatRG(encodedSampleDepth) * -FarClip;

// Calculate and accumulate occlusion

float diff = 1 * max(sampleDepth - depth, 0.0f);

occlusion += 1.0f / (1.0f + diff * diff * 0.1);

++numSamplesUsed;

//}

}

occlusion /= numSamplesUsed;

return float4(occlusion, occlusion, occlusion, 1.0);

}

I’d really appreciate if anyone could give me a hand, because I’ve been stuck on this for a few days scouring the web and these forums. Thanks!