Hey all,

This is my first ever post in the great land the monogame forum. Hi! I’ve been a super long time xna/monogame lurker though.

I have recently tried to follow this crazy old tutorial written for XNA 2 about deferred lighting.

Catalinzima Deferred Rendering

I have been able to render out the Gbuffer, with the color, normal, and depth render targets. I’m onto part 2, directional lighting, and I’ve hit a snag. The basic idea is that you send the 3 targets making up the G buffer to the DirectionalLight .fx shader, along with the light details for some directional light. Then you use tex2D to sample the color,normal, and depth targets to figure out what each pixel should look like lit. (I’m also pretty new with shaders in general). Anyway, the shader from the tutorial still compiles fine, but it doesn’t do anything. I just get a sad empty screen.

I took a look, and simplified the pixel shader to run some sanity checks. I figured that I would just make the directionalLight effect act a pass through for the color target, to at least ensure that texture data was coming through correctly. Here is where the snag happened. The tex2D(color_sampler, input.TexCoord) always returns float4(1,1,1,1).

And I don’t know why.

Okay, here is some code to get my point across.

Here is the shader (with my silly sanity check modification)

//direction of the light

float3 lightDirection;

//color of the light

float3 Color;

//position of the camera, for specular light

float3 cameraPosition;

//this is used to compute the world-position

float4x4 InvertViewProjection;

// diffuse color, and specularIntensity in the alpha channel

texture colorMap;

// normals, and specularPower in the alpha channel

texture normalMap;

//depth

texture depthMap;

sampler colorSampler =sampler_state

{

Texture = (colorMap);

MAGFILTER = LINEAR;

MINFILTER = LINEAR;

MIPFILTER = LINEAR;

AddressU = Wrap;

AddressV = Wrap;

};

sampler depthSampler = sampler_state

{

Texture = (depthMap);

AddressU = CLAMP;

AddressV = CLAMP;

MagFilter = POINT;

MinFilter = POINT;

Mipfilter = POINT;

};

sampler normalSampler = sampler_state

{

Texture = (normalMap);

AddressU = CLAMP;

AddressV = CLAMP;

MagFilter = POINT;

MinFilter = POINT;

Mipfilter = POINT;

};

struct VertexShaderInput

{

float3 Position : POSITION0;

float4 Color : COLOR0;

float2 TexCoord : TEXCOORD0;

};

struct VertexShaderOutput

{

float4 Position : SV_POSITION;

float2 TexCoord : TEXCOORD0;

float4 Color : COLOR0;

};

float2 halfPixel;

VertexShaderOutput VertexShaderFunction(VertexShaderInput input)

{

VertexShaderOutput output;

output.Position = float4(input.Position, 1);

//align texture coordinates

output.TexCoord = input.TexCoord - halfPixel;

output.Color = float4(1, 0, 0, 1);

return output;

}

float4 PixelShaderFunction(VertexShaderOutput input) : COLOR0

{

float2 tex = input.TexCoord;

// here, I am just showing that the tex.xy is actually correct, and trying to set the blue channel to match something

// from the sampled colorSampler.

float4 color = float4(tex.xy, 0, 1);

color.b = tex2D(colorSampler,tex).r;

return float4(color.rgb, 1);

}

technique Technique1

{

pass Pass1

{

#if SM4

VertexShader = compile vs_4_0_level_9_1 VertexShaderFunction();

PixelShader = compile ps_4_0_level_9_1 PixelShaderFunction();

#elif SM3

VertexShader = compile vs_3_0 VertexShaderFunction();

PixelShader = compile ps_3_0 PixelShaderFunction();

#else

VertexShader = compile vs_2_0 VertexShaderFunction();

PixelShader = compile ps_2_0 PixelShaderFunction();

#endif

}

}

and here is where I’m calling it in C#

DirectionalLightEffect.Parameters["colorMap"].SetValue(_colorRT);

//_device.Textures[1] = _colorRT;

//DirectionalLightEffect.Parameters["normalMap"].SetValue(_normalRT);

//DirectionalLightEffect.Parameters["depthMap"].SetValue(_depthRT);

//DirectionalLightEffect.Parameters["lightDirection"].SetValue(lightDirection);

//DirectionalLightEffect.Parameters["Color"].SetValue(color.ToVector3());

//DirectionalLightEffect.Parameters["cameraPosition"].SetValue(camPosition);

//DirectionalLightEffect.Parameters["InvertViewProjection"].SetValue(Matrix.Invert(view * projection));

DirectionalLightEffect.Parameters["halfPixel"].SetValue(_halfPixel);

_sb.Begin(SpriteSortMode.Immediate,

BlendState.NonPremultiplied,

SamplerState.LinearWrap,

DepthStencilState.Default,

RasterizerState.CullNone,

DirectionalLightEffect);

_sb.Draw(_pixel,

new Rectangle(0,-1,1,1), Color.Blue);

_sb.End();

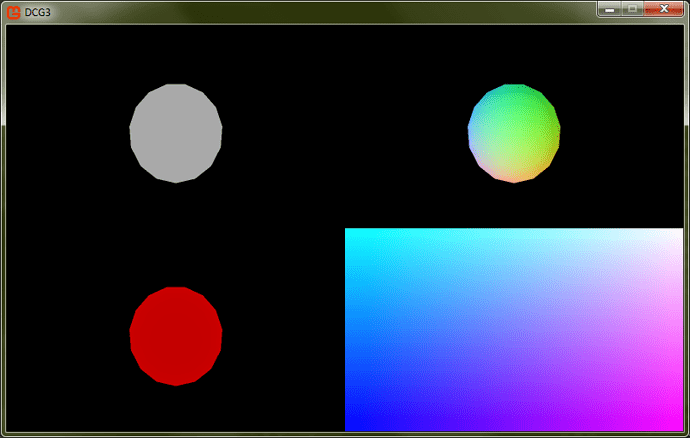

and finally, here is a screen capture of what I see.

Here are the quadrant labels

topleft - color topright - normal

bottomleft - depth bottomright - output of directional light shader

Any help would be greatly appreciated. Thanks in advance.

Also, hello forums!

edit - here is the code for initializing the render targets

// initialize the deferred RTs

var bbWidth = device.PresentationParameters.BackBufferWidth;

var bbHeight = device.PresentationParameters.BackBufferHeight;

_colorRT = new RenderTarget2D(device, bbWidth, bbHeight, false, SurfaceFormat.Color, DepthFormat.Depth24Stencil8); // TODO watch out for depth format, its shifty...

_normalRT = new RenderTarget2D(device, bbWidth, bbHeight, false, SurfaceFormat.Color, DepthFormat.None); // TODO watch out for depth format, its shifty...

_depthRT = new RenderTarget2D(device, bbWidth, bbHeight, false, SurfaceFormat.HalfSingle, DepthFormat.None); // TODO watch out for depth format, its shifty...

_halfPixel = new Vector2(.5f / bbWidth, .5f / bbHeight);